Skip to main content

Autodiscover test tool - (c) from Kip Ng

SharePoint Clustering Techniques for High Availability SharePoint

A keyword that's often thrown around when discussing SharePoint architecture is "clustering". The problem is that it's often not very clear what it means in the context of SharePoint, so here's a quick article on what this might possibly mean so we can all be on the same page when we talk about this term just for clarity sake.

Clustering Techniques Available for High Availability SharePoint

So here's how we can "cluster SharePoint" to make it more highly available. There are several options; in no particular order:

SQL Server Clustering for SharePoint

One of the first meanings I assume for clustering is SQL Server clustering for the SharePoint databases back-end, of which there are two types – failover and AlwaysOn. Believe it or not, both can be used at the same time.

Failover clustering is just providing a single logical SQL Server instance over X passive/1 active server (3 passive, and 1 active server for example). The idea is that when the active server dies for any reason whatsoever, another server will pick-up where the previously active server left off. Automatic failover is key to the whole idea, data is shared between all nodes, and this is the more traditional SQL Server clustering around – it's quite common to see in fact.

SQL Server AlwaysOn clustering is a bit more complicated. It's X logical SQL Server instances for which a single logical interface (the listener) may or may-not exist, and the primary node may or may-not automatically failover between the instances. Some people use it like failover clustering – a single instance for automatic failover; others SharePoint admins use it to backup data to a separate site (for disaster-recovery for example), and you can even combine uses. It's sort of failover-clustering + mirroring rolled into one.

So that's SQL Server clustering. SharePoint can use all or any setups since SharePoint Server 2013, but ultimately to SharePoint it still just connects to a single logical data-source.

SharePoint Web-Front-End Clustering

This is otherwise known as network-load-balancing (NLB). SharePoint is very commonly used in this configuration; x2 or more servers will be dedicated to just serving web-pages to users, the requests of which come through a network load-balancer.

"But an NLB isn't a true cluster!" I hear you exclaim. Well actually it is, albeit outside the core functionality of SharePoint itself. A cluster is just a collection of servers to service a single end-point, and an NLB is a perfect example of that. You can take a server out of the NLB (to reboot the server for example) and outside traffic will carry on quite happily, albeit now with more load to the remaining active nodes.

SharePoint Application Server Clustering

SharePoint needs application roles to handle service-application requests. As that statement is both obvious and slightly dry, here's a real-life example.

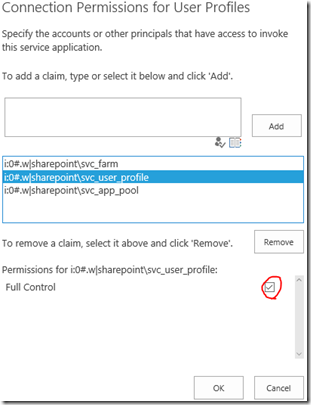

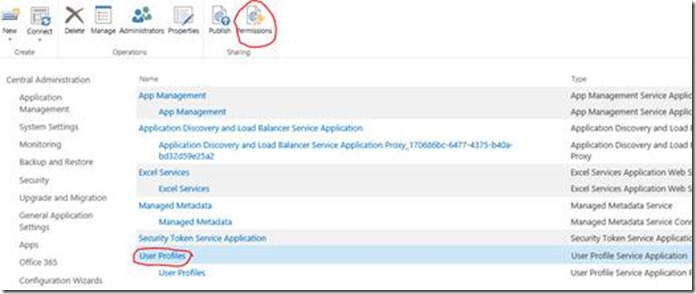

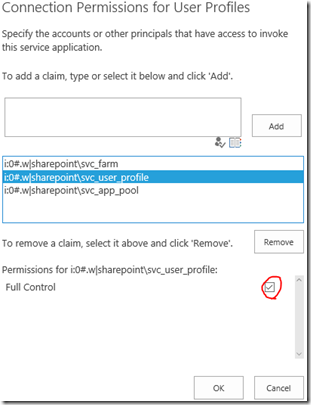

Web-part audience filters; they use user-profile data to know if the audience setting applies to the current user or not and therefore whether to show the web-part or not. This type of query requires the user profile service-application, which in turn needs & will send requests to application servers running the "user profile" service. These calls will work fine (and therefore the page loading with the web-part too) as long as just one of the servers responds to the user profile service request, so always have at least x2 for each type of service. If there's only one server in the list of "user profile servers" and it doesn't respond, then that's a fatal error for the page rendering.

Again, this type of invisible failover ability is technically "clustering", and actually is very handy at dealing with unexpected outages. More on this architecture here.

Related to application-server clustering in is search clustering and AppFabric clustering.

Virtual Machine (VM) Clustering

Some people like to cluster the machines that run SharePoint Server (or some other dependant server). If the machine host running the VM dies, the machine is failed-over to another host, sometimes in another data-centre even. Hyper-V can do this quite nicely and so can other hypervisors too.

SharePoint keeps running and life goes on blissfully unaware of the disaster that just happened.

Important: This isn't supported with any version of SharePoint as there's no guarantee of data parity between VM replicas. The only thing that we do support for SharePoint is failing over to Azure via Azure Site Recovery.

SharePoint Farm Clustering/Disaster Recovery (DR)

SharePoint DR is pretty simple; it's simply having x2 SharePoint farms that share the same content. The primary farm is in read/write mode until we move users to a passive farm, which then becomes the new primary. Every farm shares the same content so should be identical to the user, albeit with different configuration & service application databases in parallel.

The idea is that an entire SharePoint farm can die or go offline for some reason and SharePoint users will still be able to use SharePoint. More on SharePoint disaster-recovery here.

Again, this is also clustering because we've doubled-up the SharePoint servers to provide the same service – running a whole SharePoint farm. It's unlikely anyone will mean SharePoint DR when they say "SharePoint clustering" but it's worth knowing about just in case.

Which Clustering/High-Availability Techniques to Use for SharePoint?

Good question. All of them if there's enough budget for it :)

If I had to prioritise though, having a disaster-recovery farm is pretty high on the list in my opinion. After that, SQL Server AlwaysOn gives you x2 replicas of the same data, and having the SharePoint WFEs & app-servers clustered too will give you a pretty resilient SharePoint farm.

Hyper-V clustering is probably last on my list of priorities because it's not easy to setup and it's just for guaranteeing uptime for virtual machine instances. On the other hand, my preferred designs assume failures will occur at a virtual-machine level and just makes sure SharePoint isn't affected by them. Failures will always happen; it's how we handle them that counts for high-availability.

Wrap-Up

So I hope that's cleared-up what might be meant by the term "SharePoint clustering". In short, it can mean all sorts of things so hopefully this will help clarify exactly what's meant, and at what level. They're all useful for making SharePoint highly available so should all be considered if you care about SharePoint uptime.

Cheers,

// Sam Betts

SharePoint Disaster Recovery Failover Techniques

So you've got or are interested in two SharePoint farms running in parallel and you want to know how to switch users between the two farms for when you need to. There's a few options to do it; choosing the right one largely depends on how quickly you need to be able to failover everyone, and to what extent you can invest in an architecture to allow said instant failovers.

I say "disaster recovery" but you can also call this "passive/active" SharePoint farm failover too; either way you have users on one farm and you want them to go on the other farm for whatever reason. Here are your options.

SharePoint Farm Failover Methods

There's a few ways of performing your failover, depending on how much effort you can spare to setting it all up beforehand.

Content Database Failovers 1st?

Something you'll want to think about is what order to failover users & databases, given there's no clean way of doing both perfectly at the same time.

Generally speaking, for nice & planned failovers in a maintenance window the order for failover should be:

- Failover SQL Server; make the up-to-now primary SQL instance the now-to-be (readable) secondary, and the previously-read-only passive SharePoint farm instance the now-to-be primary.

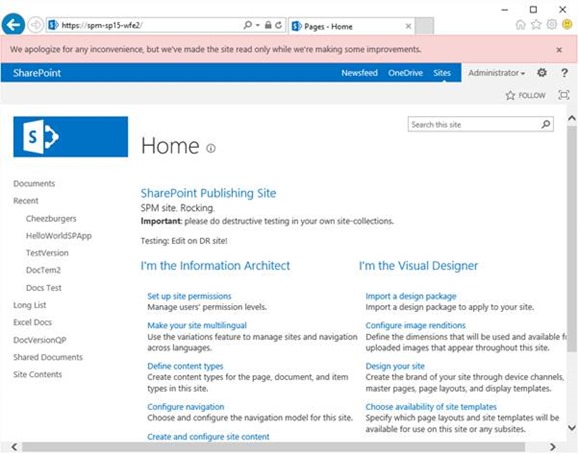

- Once done, the primary SharePoint site will now be in read/only mode for users. Hopefully they'll be expecting this.

- Failover users via one of these methods below.

- Users will start using the secondary farm immediately or not, depending on which way you failover users.

It's impossible to guarantee nobody will see a read-only SharePoint until everyone + databases are moved over to the secondary farm, so personally I'd have a "maintenance hour" if this is a planned failover so users can expect a couple of speed-bumps as everything switches.

If it's an emergency then, well just failover ASAP of course; we're way past caring about perfect user transitions if it's a firefight.

DNS Updates

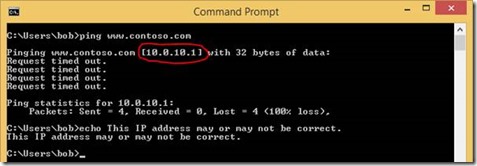

This is a pretty standard way of redirecting; you have DNS "A" record www.contoso.com pointing at a network-load-balancer on farm 1. You just update the record and wait for the clients to notice.

Nothing really that magical here; we just depend on the time-to-live value of the "www.contoso.com" A-record. That's to say, once we update the record the changeover won't be instant for clients that have the DNS info cached already, which maybe an issue.

Pros:

- Easy to execute.

- No reliance on fancy architecture.

Cons:

- Delayed response for clients.

Network Load Balancer (NLB) Reconfiguration

This is a bit trickier and assumes you even have a network-load-balancer for web-front-end traffic. In short, you reconfigure the NLB to just send traffic to the other farms web-front-ends and that's it.

Again, not overly exotic the solution but it'll work with instant effect – no dependence on clients to update. Just make sure the configuration doesn't at any point send users to both farms as that'll cause all kinds of havoc (nothing damaging; just web-form updates for example may start to fail for users, etc).

Pros:

- Instant effect.

- No reliance on fancy architecture.

Cons:

- A misconfiguration will give split-farm syndrome – all requests need to go to one or the other farm, without fail.

Reverse Proxy Redirect

This is probably the most problem-free in terms of failover risk, but also the most complicated to setup. It basically just means you have to configure both farms to accept the internal & external URLs (and please, make sure you thoroughly test this is setup right or bad things can happen).

The nice thing here is that because all the clients are already pointed at the reverse-proxy, there's a single reconfiguration for the published application there to a new endpoint and that's it.

Pros:

- Instant effect.

- Simple reconfiguration.

Cons:

Things That Can Go Wrong on Failover

Not much really. Item edits may fail if you're caught mid-failover without realising. Say you opened an edit-form from the primary & since editing the failover happened; the update would fail if sent to the secondary farm.

Probably the most potentially dangerous is the NLB approach if mishandled as that actually could theoretically send users to both farms. Still though, only one farm is ever readable so nothing too damaging could really happen.

That's it! Comments welcome.

Cheers,

// Sam Betts

SharePoint Disaster Recovery vs. Active Passive Farms

Just a quick clarification on terminology & methodologies for SharePoint "disaster recovery" (DR).

In case you didn't know already, multiple SharePoint farms can be run sharing the same content data, which is very handy if you need near 100% uptime for your SharePoint sites & apps. If for any reason your primary farm dies, you have another farm waiting for you. This post isn't new in ideas; this is mainly just a quick note on terminology & why there are these differences really, as there is still some confusion.

This trick is achieved by sharing certain databases between two farms, with said databases being in read/write on the "primary" side. All the users are on the whichever farm has read/write access to the databases with the other side(s) receiving any data changes & running in read-only mode.

If for any reason we need to switch farms, we put the read-only DBs in read/write mode and send everyone to the other farm.

SharePoint fully supports having service-application & content-databases in read-only mode and can switch between the two modes without any intervention.

A Quick History – Secondary Farm for DR Only

Back in the day, before SQL Server databases could be kept in sync with either mirroring or log-shipping. At least in the case of SharePoint DR with log-shipping at least (which was the most common DR method), the problem was that switching-over the read/write SQL instances was a "one-hit" use. That's to say you could easily switch from primary SQL instance to the secondary instance (in the event of the need arising), but going back again took a lot more work.

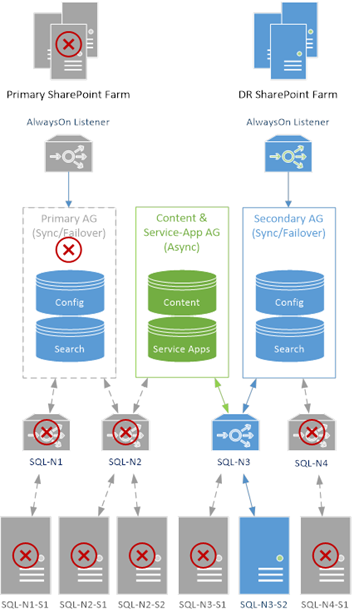

In the event of a failure on farm 1, we'd go from this:

…to this…

The only problem is that the switch back for SQL can take a while to get online & ready to failover again.

Anyway, because of this "one-way" failover nature, the secondary farm was strictly used for emergencies only, hence the concept of the farm being "only for disasters" and therefore the name "disaster recovery farm".

Normally this was fine because it's rare for multiple critical, farm-wide problems to happen on both sides. Not impossible, but rare, so this was still a good option to have available even if it was just for critical failures on the primary farm only.

Enter: Quick-Switching Failovers with AlwaysOn

These days with SQL Server AlwaysOn, SQL instances switching is very much easier than before. For one thing, when we switch primary SQL instances, switching back doesn't require any extra work.

What does that mean? Well it means now we can switch between the two SQL instances much quicker than before.

What does that mean? Simple really; enter the concept of "active/passive" farms rather than the more dramatic "disaster recovery" farm model. With active/passive farms switching is much quicker; just a simple SQL failover + users redirect each time.

Now we can go from this:

…to this…

…and back again. And back again; no restores or DB tricks needed except a failover.

What's the Correct Name & DR Model Then?

Good question; a lot depends on your original plans for the 2nd farm. In general, the old-style "only in disasters" model is a bit rubbish as it relies on having a disaster to know whether everything will even work, as it is the "nuclear option" for disasters. I've seen cases where everything "worked" on the DR site but when push-came-to-shove, the hardware just wasn't ready for the same load; and of course, this only became apparent when the primary went nuclear so everyone was moved over to the DR site.

Partly to avoid unpleasant surprises, my recommendation is we forget about "nuclear option DR farms" and just get used to having two equally active SharePoint farms (as in both switching between active/passive). Now we have an easy way of failing over there doesn't seem like much reason not to, and it guarantees that both could work in the event of an emergency.

Remind me Again – What Could Force a Failover?

Patching & updates mainly; it's a necessary but risky business, on any platform.

Patching Windows, SQL Server(s), SharePoint installations; all of these have risks in that there's always a chance whatever update will break services. From Windows platform to .net to SharePoint; despite our best efforts, servers are complicated beasts so very occasionally something may slip through the cracks.

This isn't something specifically tied to Microsoft patches either; I challenge someone to find a vendor that doesn't have occasional patching fracases. Hint: there aren't any.

The solution to this is simple: test patches first, and have another production system on stand-by in case something critical dies.

Have no single points of failure in other words, and active/passive farms are the ultimate high-availability SharePoint solution because they're the only architectures that achieve this.

Patching isn't the only reason; your own code may cause issues, and it's nice to have quick failback options. I can think of more than more big SharePoint customer that's benefited greatly by having this capability for handling bad code rollouts.

There's all sorts of reasons why it's worth doubling-up on farms, but mostly it's for the reasons you can't think of you'll need it the most ;)

Cheers,

Sam Betts

SharePoint Performance Monitoring with Azure

Aside from epic scaling possibilities, another cool ability Azure gives us is HTTP endpoint monitoring – the ability to check our SharePoint apps are responding nicely, even from various locations around the world if we want it.

This is fairly quick article mainly thanks to the brilliant simplicity of how this works.

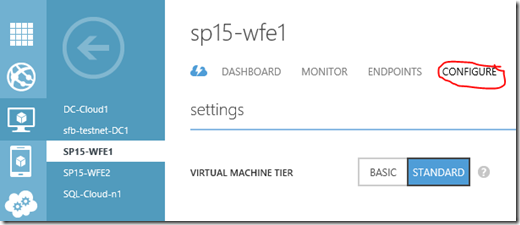

Add Endpoint Monitoring for Cloud Services URL

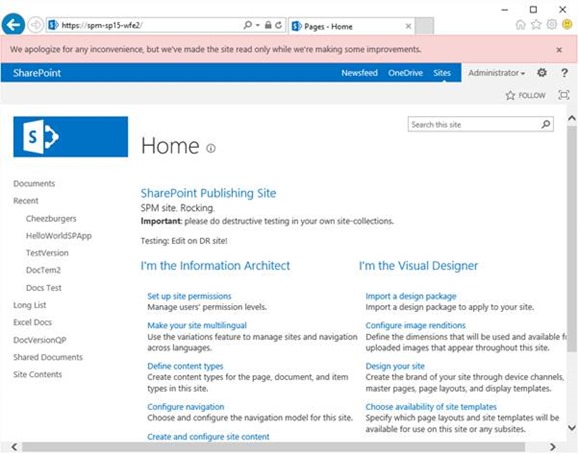

So in my SharePoint/Azure environment I have it publicly exposed via the cloud-services URL. I want to monitor how quickly the default page loads as a rough metric of how stressed the system is (there are all sorts of metrics available of course).

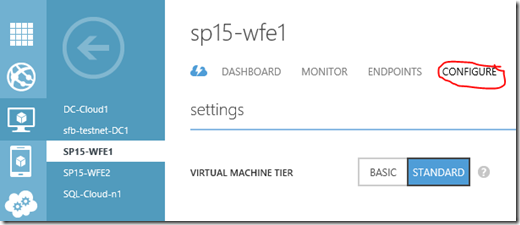

So, I go-to the SharePoint WFE and click "configure":

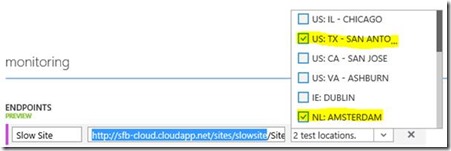

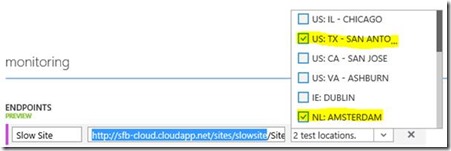

At the bottom I can add endpoint monitoring. This is so simple it's insane; all I have to do is add the URL for Azure to ping and then from which parts of planet Earth I want to ping from.

Save changes and that's about it; Azure will now ping the URL every 5 minutes & log the response time so you can monitor it, along with other metrics.

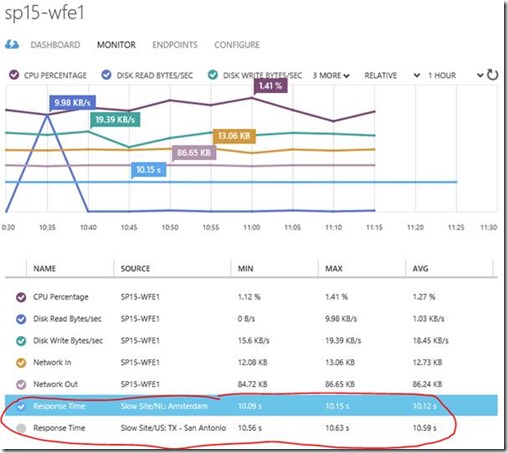

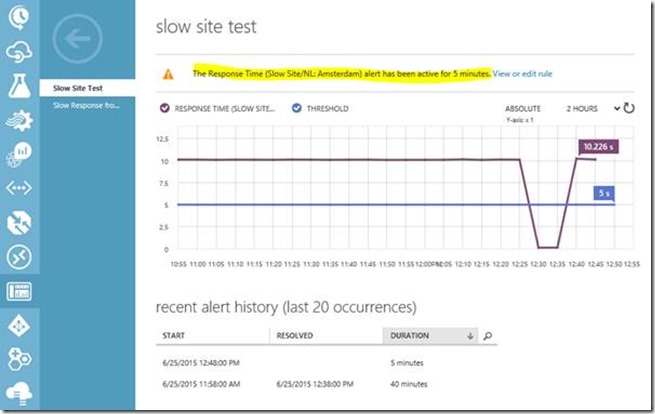

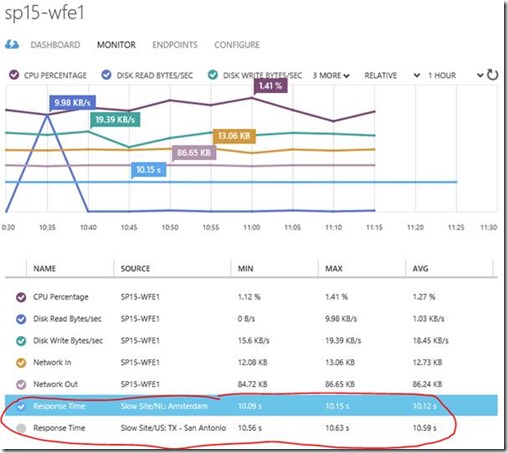

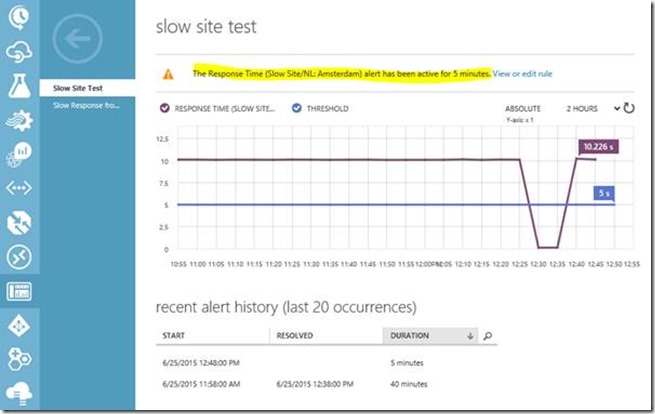

Here you can see the response time from Amsterdam, taking a suspiciously long 10 seconds to send back the page.

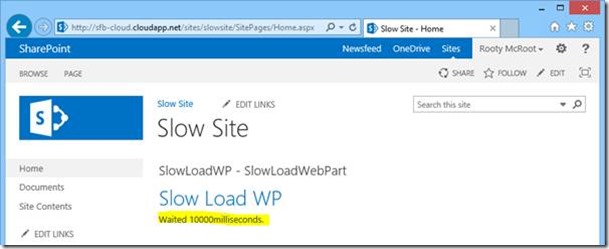

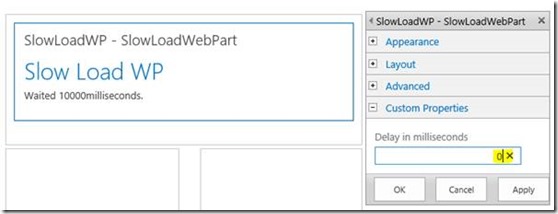

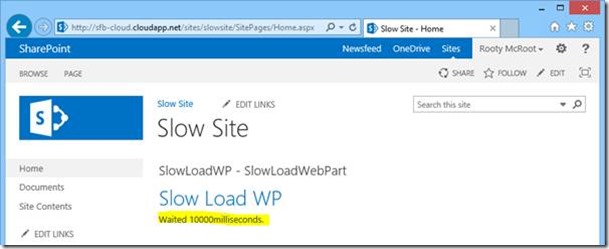

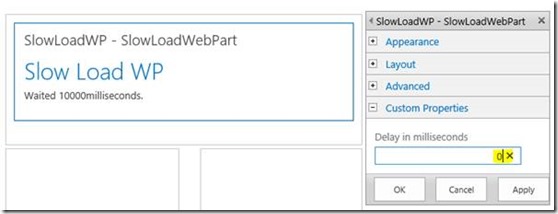

Why so slow? Well the site in question has a quickly hacked together web-part that lets me slow down the page render by whatever I configure in the web-part properties; 10 seconds in this case:

It's not pretty but it works, and it's handy for the next demonstration…

Add Rule to Monitoring Response

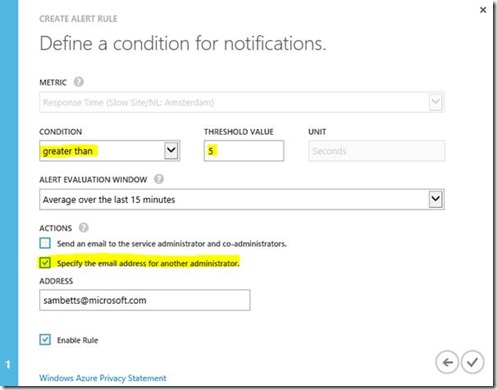

Even better, once you've got your endpoint monitors created you can now setup monitoring alerts on them. Add a rule for any of the metrics just by clicking on it & "add rule":

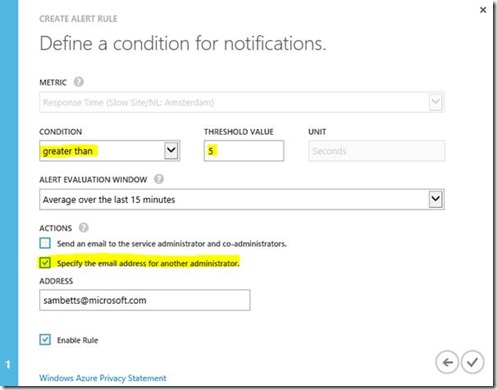

This will let you fill out a name/description, and then the conditions for the rule:

…and that's about it. Now to what happens when alerts activate or resolve…

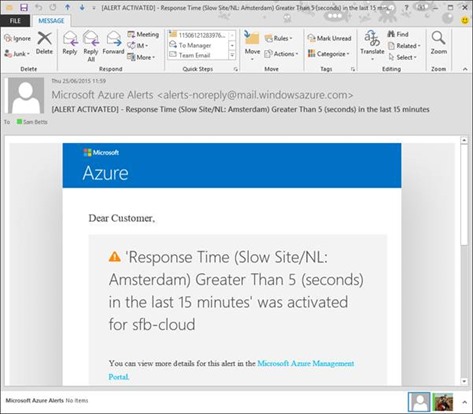

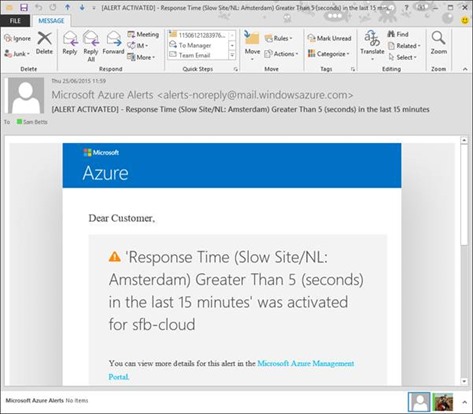

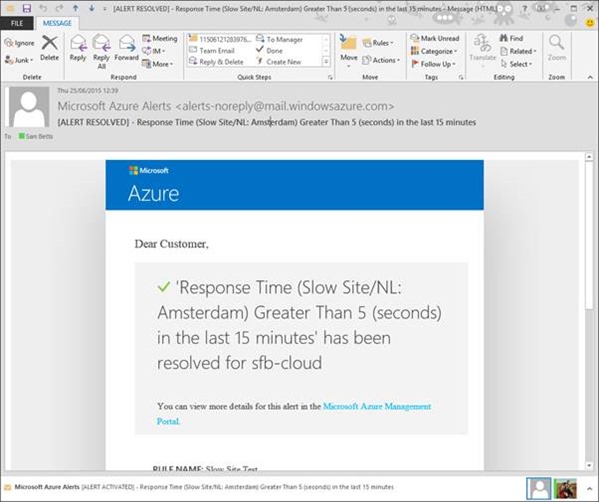

If the alert becomes active it means the condition for the rule you setup is being met, or worse. In my case, pages are taking 10 seconds to load so the condition is easily met and Azure sends me a nice email telling me the alert is "active":

Thanks Azure! Now in the portal we can see more information…

Clearly we have a problem with that site; in our case, the problem just being we have a web-part that kills performance.

Resolving Alerts

Once the page loads below 5 seconds, say because said web-part isn't killing performance anymore:

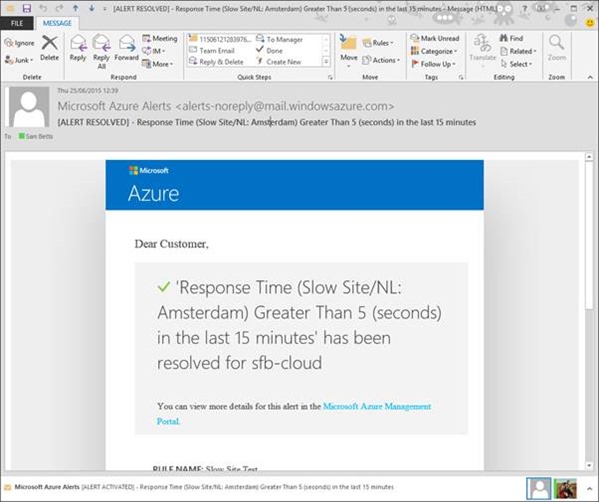

…then the alert should become "resolved" again one the condition is met again (15 minutes; 5 second average response max).

Hurrah – we're back to normal operation again.

But My SharePoint Sites Aren't Anonymous!

In case you've not guessed already, Azure can only make anonymous requests to an end-point. This means of course whatever SharePoint site/page you configure for it needs to be enabled for anonymous access of course in order for this to work.

For some that might sound unappetising at first but all that's required really is a test site that's setup exclusively for this performance-pinging purpose. An entirely self-contained site somewhere with no confidential data (or even just with junk data) but with enough data to make a page-load as life-like as possible for a real user. Making the ping-tests load about the same amount of data from the site lists is the goal; to generate the same load on the farm with ping-tests as a real user would on a real site, and of course enabled for anonymous access so Azure doesn't just get HTTP 401s back each ping.

At the end of the day, this is about gauging performance of how the whole system responds; any test site will share the same content-database, IIS configuration and hardware limitations as your non-public sites. We just want a dummy site that should give the servers the same load as a normal site, whatever & however that will be. Once done, Azure can provide the ping-tests & alerts, and we can figure out what to do about any problems that Azure flags.

Wrap-Up

That's it! It's pretty simple stuff really & very easy to setup, but quite powerful if you need to react quickly to slowness. As we have our farm in Azure, a simple solution could be to re-dimension various virtual machines until we can figure out the culprit bit of code, albeit for our thread-sleeping web-part that wouldn't have helped of course.

Incidentally, there's nothing stopping you from monitoring an on-premises SharePoint farm the same way; Azure just needs a VM to add the HTTP endpoints to. But anyway, have a play – it's all cool stuff!

Cheers,

Sam Betts

SharePoint Search Service - Failover & Outage Resiliency

I thought I'd share some tests I've done on how much more resilient the new search engine is to server outages now in 2013 just because I've done some research on it just recently. It's especially nice for consuming farms because there's a nice abstraction from any server apocalypse going on in the service publishing farm; the consuming farm just carries on anyway and everything keeps working nicely.

Back in the days of 2010, if your single search administration server went down then you could kiss goodbye to the search service application it was administering and also any relying apps/services/web-parts/pages that needed it. No more in 2013; there no longer need be one single point of failure for your search topology, if you have the hardware set up a decent topology that is.

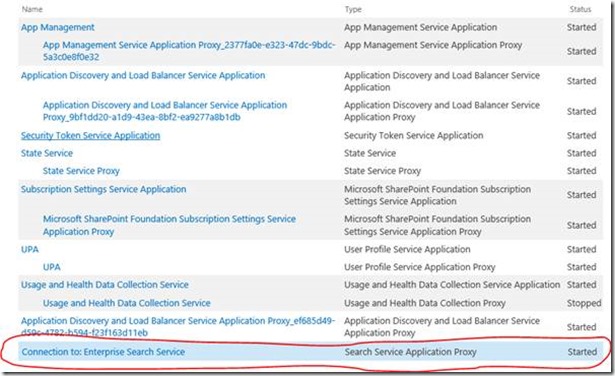

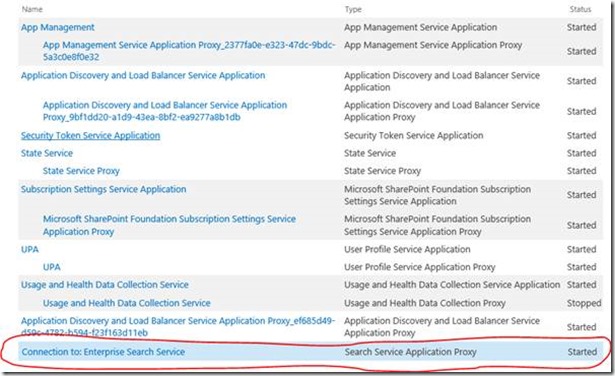

Anyway, here you see a connection to a published search service from a consuming farm:

The publishing string for the published search-app is:

urn:schemas-microsoft-com:sharepoint:service:d184aa7911cb41269598b3780592ff52#authority=urn:uuid:ef685d49d59c4782b594f23f163d11eb&authority=https://sp15-search-crl: 32844/Topology/topology.svc

Notice the server being mentioned there.

High Availability SharePoint Search

If we look at that topology on the publishing farm I've basically triplicated all the services. Admittedly this isn't so normal for performance reasons but it's setup that way just to demo the point.

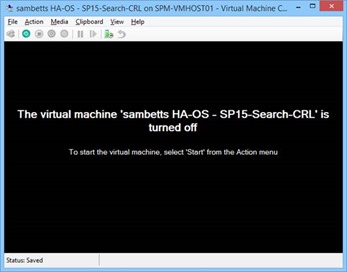

Now let's kill the server in the publishing string.

We can see the effect fairly immediately in the search management page:

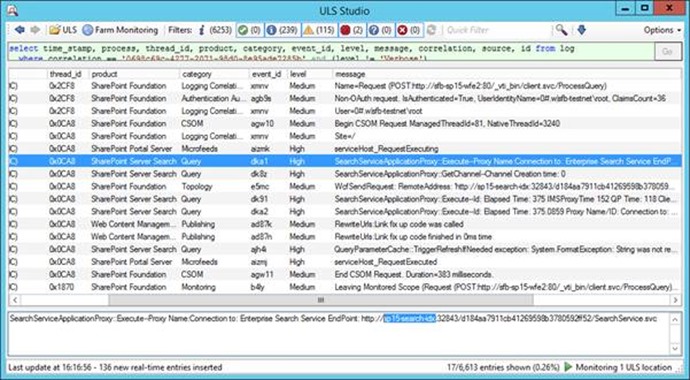

Clearly there's a problematic server there; one that the web-front-end in the other farm was going to use.

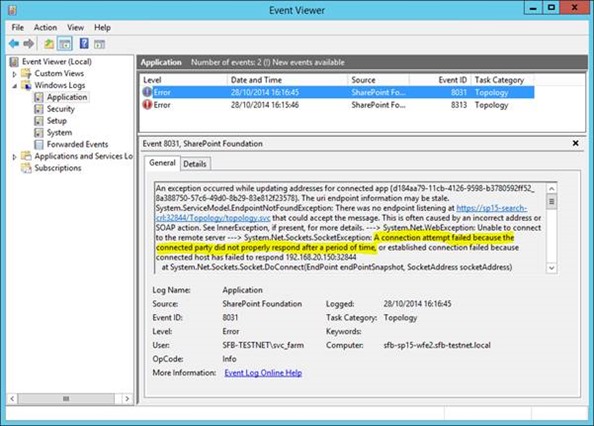

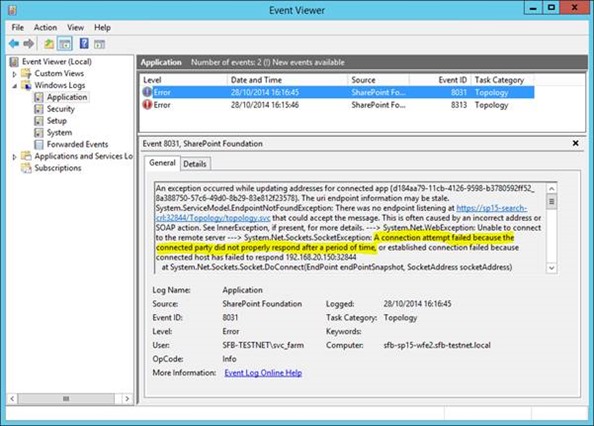

If we look at the event-logs on said WFE we can see a health warning thrown up by a timer-job – it's nice to know things might be a bit stormy; the consuming farm isn't aware what the impact will be of course so flags it just in case.

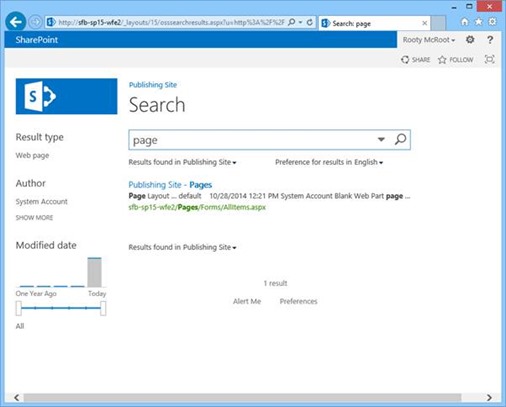

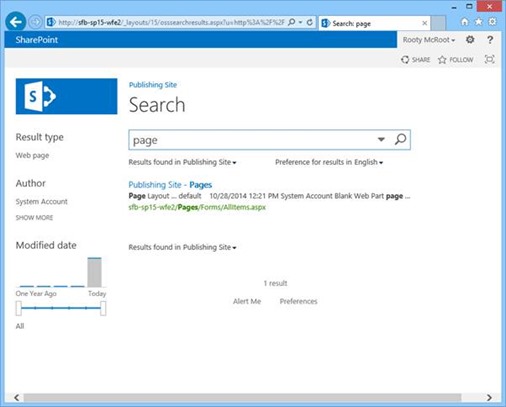

Never mind though because crucially, as there was no single point of failure, searched still work no problem for our consuming farm/web-application:

Here we see the web-front-end (WFE), it has adapted just fine to the outage and search results are coming in anyway.

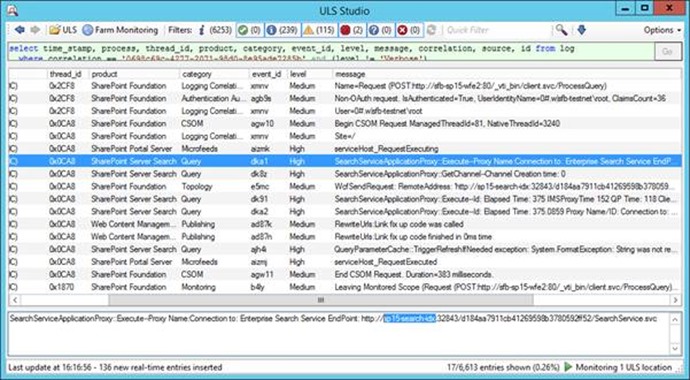

Looking at the WFEs logs you'd be forgiven for not realising there was even a problem.

Notice the new server-name in there (there is one; the screenshot isn't particularly clear). No errors or warnings; as far as that concrete query operation is concerned there is no problem.

And that's it!

Obviously it's not magic; if there's not enough redundancy built into your topology then it'll all come crashing down but I could turn off any one of the search servers and nothing really would happen. It's a highly-available search solution, finally!

Also this isn't specific to published apps either, just the messages are nicer. Anyway, I hope someone found it useful!

Cheers,

// Sam Betts

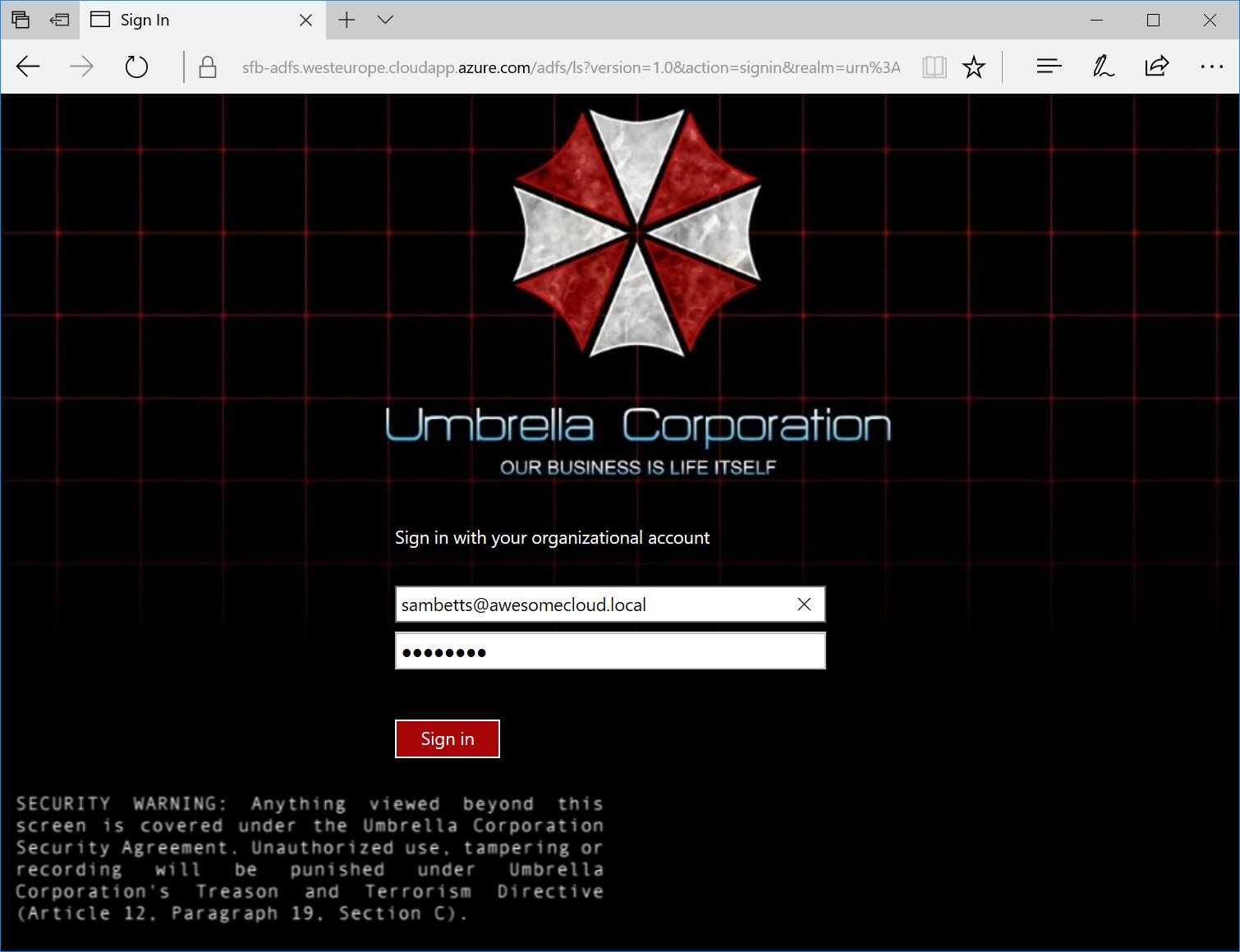

SharePoint Server and Web Application Proxy – continued

So having played around with the Windows Server role "Web Application Proxy" a lot more recently after some initial testing. I've learnt a few more things about the two working together that I thought worthy of sharing. Windows Web Application Proxy (WAP for short) is a great way of protecting SharePoint from being exposed directly to the internet at large – it's not as feature-complete as TMG Server, but it's a nice wrapper that's very simple to setup & secure.

Specifically I wanted to focus on the two different ways Web Application Server can be used to front authentication to SharePoint…

SharePoint Authentication Methods – Claims/ADFS or Windows

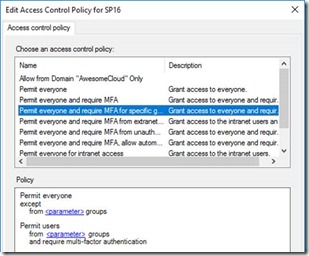

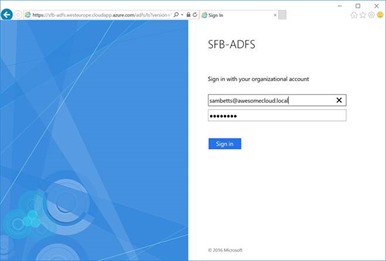

There's two modes SharePoint can be used in conjunction with Web Application Proxy + ADFS, depending on how you've got SharePoint setup. If you have ADFS already configured in SharePoint you can use Web Application Proxy in claims-aware mode; just selecting an ADFS relying-partner that's not claims aware is all you have to do, at which point WAP will assume the backend is Kerberos.

In either mode the ADFS server will be used for user logins & authentication for the proxy endpoint; the only difference is how Web Application Proxy validates you on the backend, transparently to the user. Either it'll pass the token directly to the claims/ADFS SharePoint website or it'll convert it to a Kerberos ticket for Windows authentication.

Windows Authentication SharePoint Backend

This mode should be used when you want to keep your Windows authentication in SharePoint without migrating to claims and is the most complicated to setup. It needs the WAP server on the same domain SharePoint servers are on, Kerberos configured & working for SharePoint HTTP, and each WAP server needs Kerberos configured too.

This means:

- SPNs for the external address given to each WAP server machine account.

- In my example I have proxy.contoso.com for my external address so each WAP server needs HTTP/proxy.contoso.com in the list of service-principal-names.

- Constrained delegation needs to be enabled for the SharePoint application SPN.

- Each WAP machine account needs to delegate to SHAREPOINT\svc_app_pool in my example, which has the internal SPN of HTTP/sp15.

Note: NTML doesn't work for WAP back-ends – it has to be a valid & working Kerberos configuration to the front-ends from the WAP server. I say "valid and working" because it's possible if you have Kerberos "configured" and WAP doesn't work that in fact Kerberos wasn't fully configured and connections were falling-back to NTML for user sessions. Well, this isn't possible with Web Application Proxy – Kerberos must be fully working if SharePoint is to work with non-claims.

Claims-Aware (ADFS) SharePoint Backend

In this mode WAP let's ADFS login, the redirects back to the WAP URL with the authentication cookie set just like a normal ADFS login. This is much simpler than a Windows authentication back-end because there's no conversion needed from the ADFS claims -> Windows token; SharePoint just accepts the ADFS token directly and it all just works.

Another benefit to this mode is that because we're not delegating Windows identities the WAP server(s) don't need to be on the same domain as SharePoint, so it's arguably more secure. The argument being of course that compromising the edge servers won't get you any closer to domain access, which is true, so there's that too.

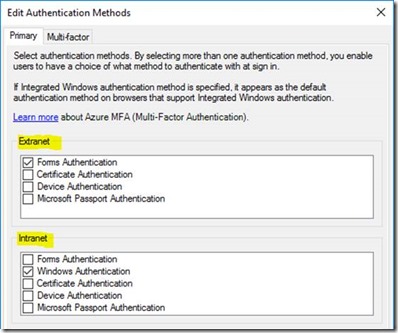

Use Single Authentication-Method Endpoints for WAP-SharePoint Back-ends

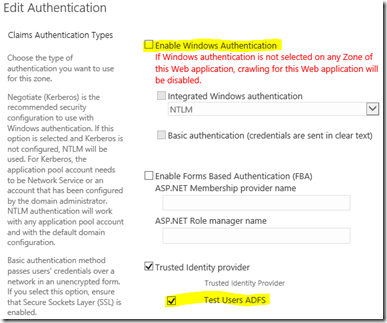

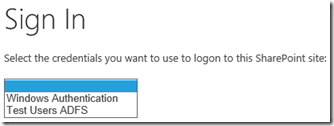

Whichever way you configure WAP server, either claims or not, the endpoint that WAP redirects traffic to must have exclusively the same authentication method configured that's expected for the WAP server.

That's to say that if proxy address is claims-aware/ADFS then the SharePoint site & zone that the proxy sends to should only and exclusively be configured for the same ADFS provider. Equally, if the proxy endpoint is non-claims aware, the endpoint that WAP sends traffic to should only have Kerberos authentication configured.

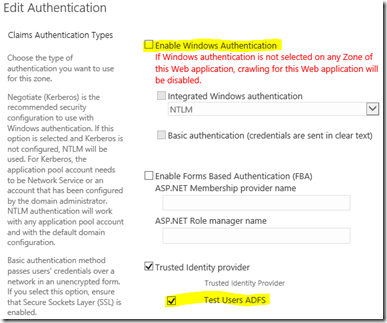

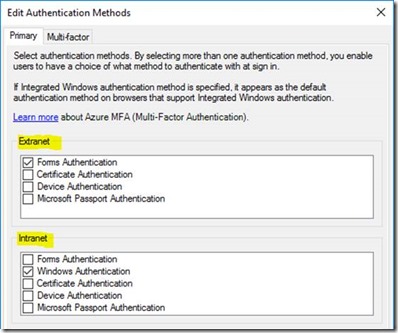

In fact, if your Kerberos site also has other authentication methods enabled too then the whole thing breaks. For ADFS sites however, if you don't have the target zone configured like this:

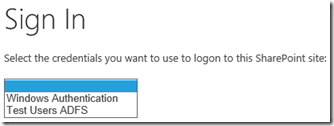

…after logging into ADFS, users will see this:

Single authentication methods only for internal WAP URLs to avoid this.

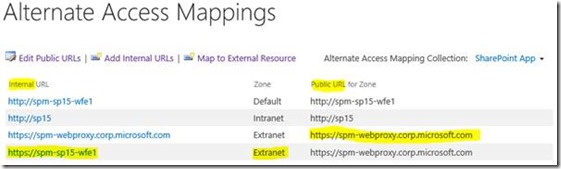

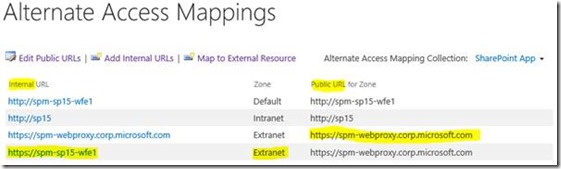

Setup Alternative Access Mappings for Web Application Proxy URLs

Finally, SharePoint does need to know about the URLs being used to access the application, both internally and externally. This is only really necessary if the URL being used to access the web application proxy is different to the URL that WAP is redirecting to – if both internal & external URLs are the same then you can skip this bit.

1st, make sure there's an extranet/custom/whatever zone with the address the web-application-proxy is being accessed on for SharePoint. Then add an internal URL so SharePoint knows how it's going to be called by WAP.

The URL used has to be added via "internal URLs" pointing at the public zone URL setup for the web-proxy address. In other words, the "extranet" zone is configured for the proxy address (https://spm-webproxy…/) and an internal URL is added for the address WAP is using to forward requests to, pointing at the "extranet" zone. That way SharePoint knows what the "internal" URL is for the outside address, and everything works just fine.

Note: you can't have a public zone already with the internal address already configured for this internal/external match to work – if you try and add an internal URL that already exists as a public URL then you'll get an error when you add it.

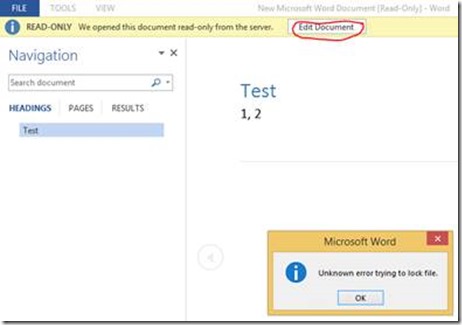

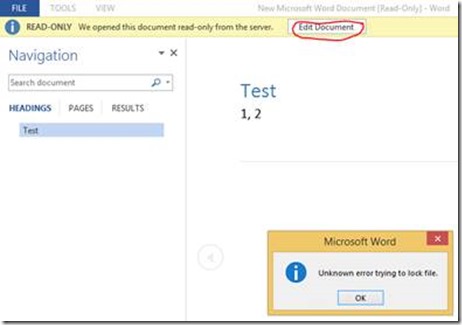

Anyway, the point is that without this matching perfectly you'll get errors and specifically with Office, when you try and edit a file, Office is likely to throw its toys out the pram with an "unknown error trying to lock file" error or something similar.

Setup your alternative access mappings correctly and all should work. Also remember that OneDrive for Business will fail to synchronise without AAMs setup correctly.

Wrap-up

That's it! SharePoint with Web Application Proxy both together works great once you know how they fit together. Hopefully this has helped.

Cheers,

// Sam Betts

SharePoint & SQL Server AlwaysOn Failovers – Video Demonstration

To demonstrate how SharePoint and SQL Server AlwaysOn work together, especially with a failover, I did a quick video to show it in action. Here it is, enjoy (https://www.youtube.com/watch?v=se_M1vdriMA if the embedded video doesn't load):

SharePoint 2013 with SQL Server AlwaysOn

SQL Server AlwaysOn is a key tool in maintaining a high-availability SharePoint solution.

More information on how to implement it @ https://blogs.msdn.com/b/sambetts/archive/2014/05/16/sharepoint-2013-on-sql-server-alwayson-2014-edition.aspx

Cheers,

// Sam Betts

SharePoint + SQL Server AlwaysOn: Outage Troubleshooting

So you have a SharePoint/SQL outage despite having SQL Server AlwaysOn configured, and you naturally want to know why. Event IDs 5586 and 6398 are flooding the SharePoint servers and you don't know why. On the SharePoint servers in the application log you might see either this:

Unknown SQL Exception 10060 occurred. Additional error information from SQL Server is included below.

A network-related or instance-specific error occurred while establishing a connection to SQL Server. The server was not found or was not accessible. Verify that the instance name is correct and that SQL Server is configured to allow remote connections. (provider: TCP Provider, error: 0 - A connection attempt failed because the connected party did not properly respond after a period of time, or established connection failed because connected host has failed to respond.)

…or this:

Unknown SQL Exception 976 occurred. Additional error information from SQL Server is included below.

The target database, 'SharePoint_Config', is participating in an availability group and is currently not accessible for queries. Either data movement is suspended or the availability replica is not enabled for read access. To allow read-only access to this and other databases in the availability group, enable read access to one or more secondary availability replicas in the group. For more information, see the ALTER AVAILABILITY GROUP statement in SQL Server Books Online.

Either way, this will be what the users see – a non-working SharePoint site across all pages:

This post is all about how you can figure out why SharePoint's databases have disappeared and how to get them back. This guide does assume that all databases are on the same SQL instance and everything is offline. To some extent this guide works for single-server installations too.

Step 1 – Identify SharePoint's SQL Endpoint Name

SharePoint can connect to SQL via a bunch of ways; as long as the TCP traffic ends up at a SQL Server instance of some kind it's not bothered about how it got there. For us on the other hand, we do care about how SQL traffic should arrive to SQL Server because somewhere along the chain, it's broken. We have to figure out where.

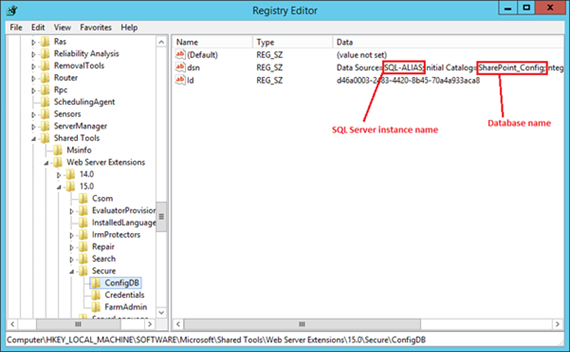

Where is the Configuration DB?

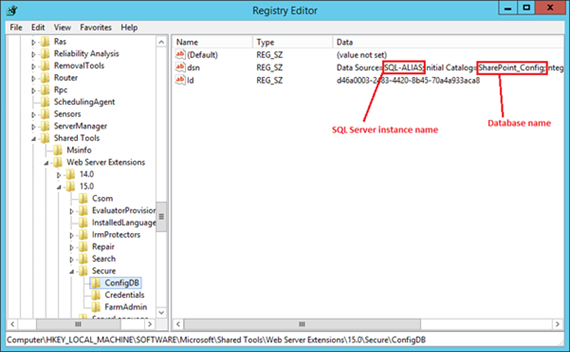

Let's assume all databases are out so using PowerShell or Central Admin just isn't going to happen – we need an "offline" way of getting back-end data. On the SharePoint server open the registry editor (be careful in there) and go-to "HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Shared Tools\Web Server Extensions\15.0\Secure\ConfigDB" and check the "dsn" key.

So the first question is, what really is the instance name that SharePoint is trying to connect to? An alias or real endpoint?

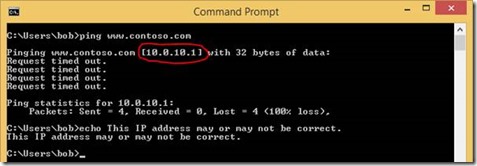

In my example it's pretty obvious just from the name but most installations have their own (often quite cryptic) naming conventions that might complicate things. We'll find out regardless by pinging the server name.

Get SQL Endpoint Name - Is this a Non-Default SQL Instance?

Does the instance name have a backslash ("\") in it?

Yes? The SQL endpoint name is the bit before the backslash. No backslash in the instance-name? The endpoint name is the whole thing.

An example SQL instance name could be "srvSQL1\SP" – the endpoint name is "srvSQL1".

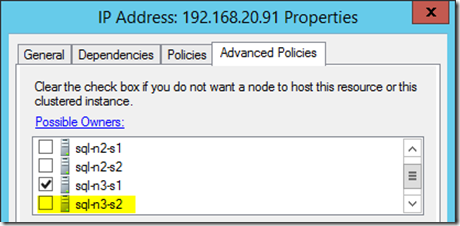

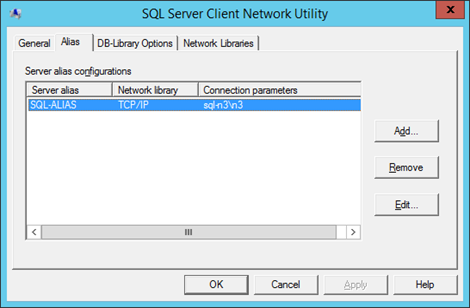

Get Real SQL Server Name – Is Endpoint Name a SQL Alias?

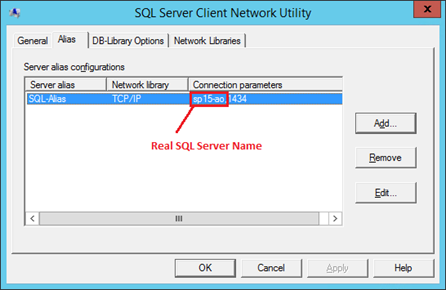

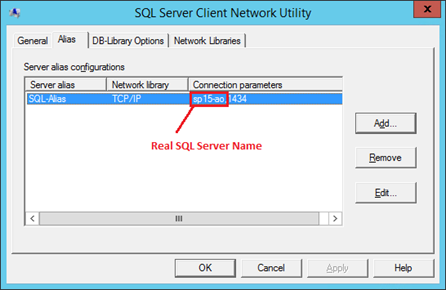

Open "cliconfig" from the "run" command from the SharePoint machine. Click on "alias" tab – if you see the endpoint name listed here then SharePoint is using an alias.

In my example, the SQL Server name is "sp15-ao" – SharePoint isn't connecting to a real server even.

Step 2 - Identify what exactly is the SQL Server

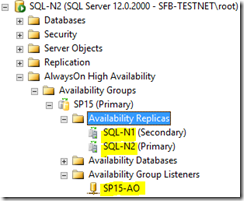

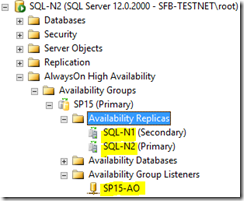

We need to figure out if the SQL Server is – an actual server/replica or an AlwaysOn listener.

This you'll need to compare by either asking a SQL Server guy or figuring it out from the servers themselves.

Here you can see my SharePoint is clearly using a listener. If SharePoint was using a replica instead of a listener we could have a problem (see below).

Is SharePoint Using a Replica?

If by now you see SharePoint is actually using a replica directly then that is your problem. If you're using an alias with "cliconfg" then you can just edit the alias to point at the primary replica and SharePoint should be good again.

If SharePoint is not using a SQL alias and instead are just pointing directly at a replica then you can create an alias with the same name as the replica but have the alias point at the real primary, as a temporary hack to get up & running.

If you want automatic failover you should be using an AlwaysOn listener, but at least for now you can get SharePoint online again. Update/create alias on each server setup this way & enjoy a working SharePoint again. Plan to use the listener in the future – that's what it's there for, so you don't have to do these manual changes every time there's a failover.

The end.

Is SharePoint Using an AlwaysOn Listener?

If we've figured out that actually the SharePoint server is using an AlwaysOn listener (even through an alias) then we need to figure out why SharePoint can't see it.

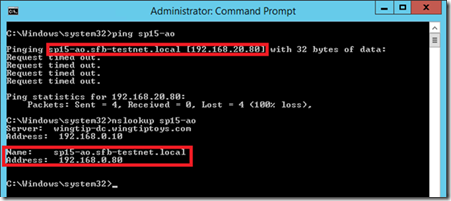

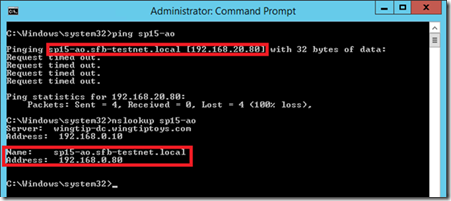

First, run a PING & nslookup test to the SQL listener name to see what we get.

NSLOOKUP Returns Single IP Address

This tells us all we need to know to fix this specific problem – we can see that PING resolved the name to a different IP address to what nslookup told us (192.168.20.80 and 192.168.0.80 respectively). What does that mean? Quite simply that the DNS server 192.168.0.10 has a different IP-address (A-record) than the SharePoint server thought it had because it was cached.

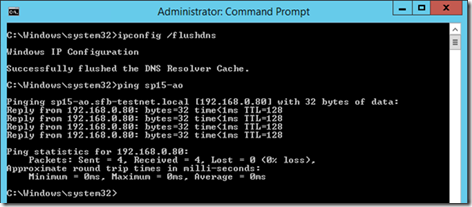

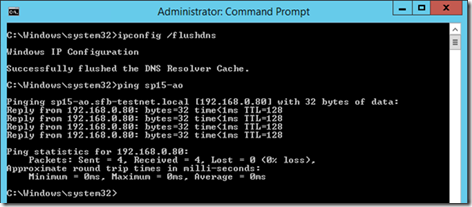

Easy fix in this example: clear DNS cache with "ipconfig /flushdns". Once done, trying pinging the endpoint again.

It works! And so will now SharePoint.

Why did this happen? A SQL AlwaysOn failover happened and SharePoint hadn't caught on in my instance because the DNS local cache hasn't updated – a simple resolution of either flushing the cache or disabling the DNS client service which does the caching.

Are There Multiple IP Addresses for NSLOOKUP?

You might be suffering from this problem.

Wrap-up

In my experience AlwaysOn is often implemented imperfectly (albeit rarely gravely so). Getting a fully automatic failover isn't easy but it is possible. Hopefully this post will have helped highlight some of the challenges in enabling fully automatic SQL Server failovers with SharePoint 2013.

Cheers,

Sam Betts

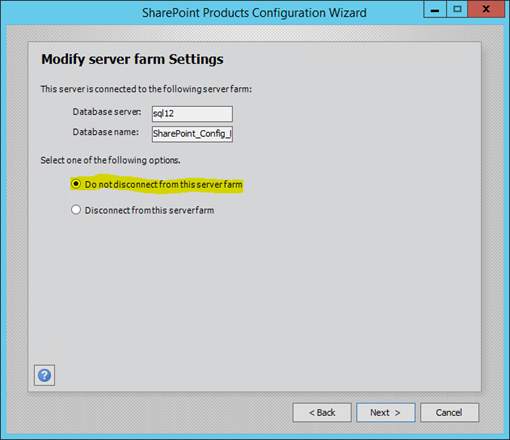

SharePoint Upgrade/Configuration Wizard Never Finishes

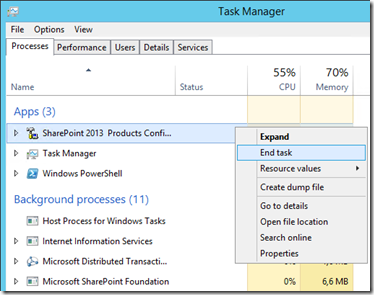

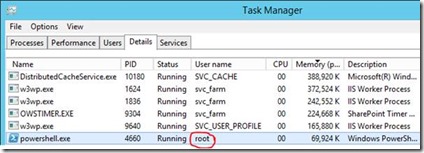

Some people may have had issues where the SharePoint Configuration Wizard never seems to finish an upgrade. This is a quick & dirty post to explain how finish it, as cleanly as possible.

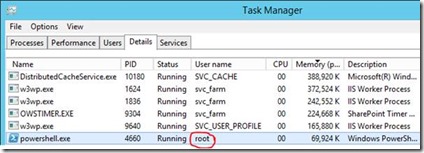

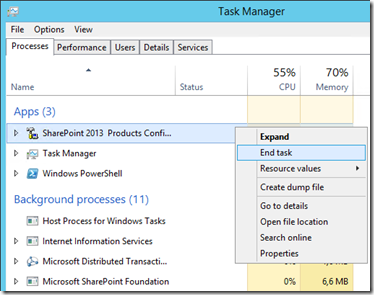

First; terminate the process via task-manager as closing the form will try and abort the dead upgrade process, so will never actually abort (so won't close the wizard either).

Closing programs via task manager will send it WM_CLOSE, and then drop the process by force if it doesn't respond within a few seconds. Well, the process hasn't crashed so the program will exit "cleanly" at least.

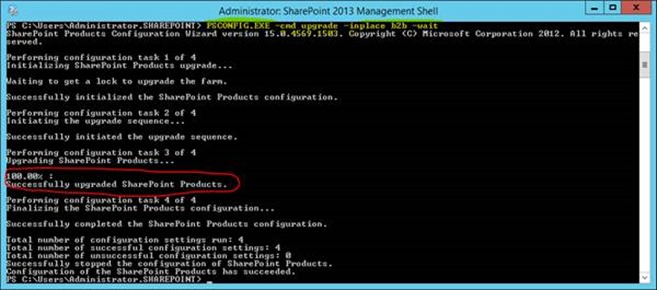

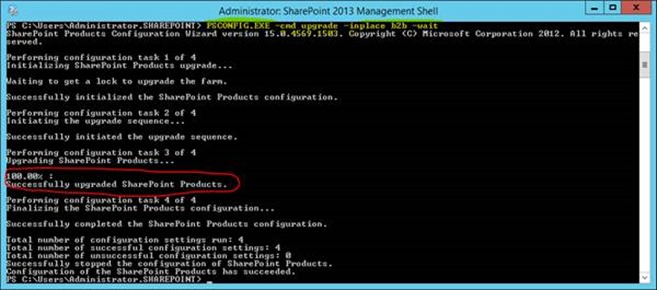

Now we just have to finish the upgrade from the command-line. Open a SharePoint PowerShell command-line & run:

PSCONFIG.EXE -cmd upgrade -inplace b2b -wait

This will work this time:

That's the hard bit done; the databases are all upgraded.

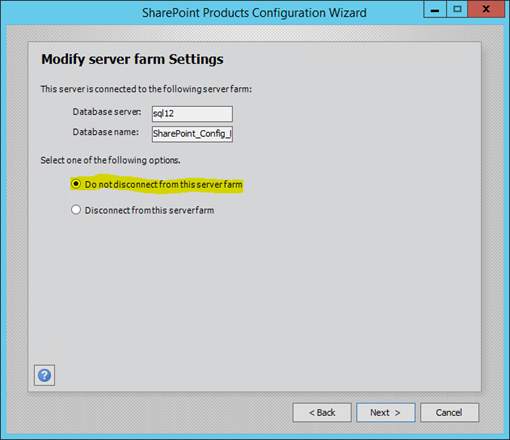

Next, let the wizard reinstall & reconfigure the application & features as we skipped this bit in PSConfig:

That's it! Server upgraded.

Cheers,

// Sam Betts

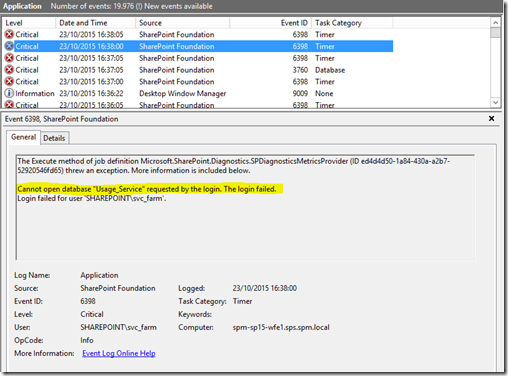

SharePoint Usage Database in SQL Server AlwaysOn

SharePoint has a very handy service-application that's normally running called the "SharePoint usage & health" application. It's actually a very useful to have under normal circumstance as it logs pretty much everything you could ever want to know about the operational health of the SharePoint farm; page-load times & metrics along with the developer-dashboard data, search performance statistics, etc, etc. All great data for SharePoint administrators, but this is a quick post about why you shouldn't add it to your AlwaysOn cluster.

TL;DR: Don't bother adding the usage application database in AlwaysOn. If SharePoint can't reach it there's very little impact.

Want a longer explanation? Read-on…

Why Is It a Bad Idea to Have Usage Database in AlwaysOn?

The problem with the usage application database is that it's a very fluid database in terms of sheer number of updates to it, by design. Every page-load, every user-action and every admin job is basically another entry, and these updates quickly add-up. The problem that this causes is that the contention issues with the AlwaysOn data-synchronisation as the usage updates will be competing with, say, content database updates too, and obviously the two are in entirely different categories of importance.

Also there's the small detail that for databases to be used with AlwaysOn they have to be in "full recovery" model. Full-recovery means every commit is also logged in the LDF file so the database file-size will balloon very quickly in the mode.

But What Happens if SharePoint Usage Application Dies?

Not much actually; SharePoint doesn't need it to run core functionality – pages will still render as they did before, all the important processes will carry on no problem. Unless you look in the logs you basically won't notice anything's wrong, with the one exception of the developer-dashboard being mysteriously silent.

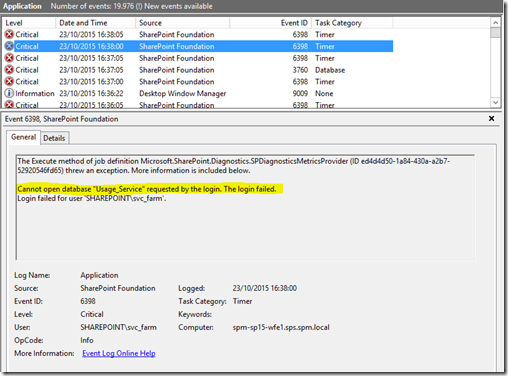

Here's an example of a SharePoint server which can't access the usage databases

You'd be forgiven for thinking these really are critical events being logged, and as far as the usage application is concerned they are. But in reality, again, using SharePoint (with the exception of some admin views), you're not going to notice.

So Should I Mount the Usage Database in SQL Server AlwaysOn?

No, is the short answer. "Definitely not" is the longer answer, unless you have boat-loads of network bandwidth & disc-space to burn. Should you have a failover, SharePoint will complain about not seeing the database any more but life will go on.

That's it!

Cheers,

// Sam Betts

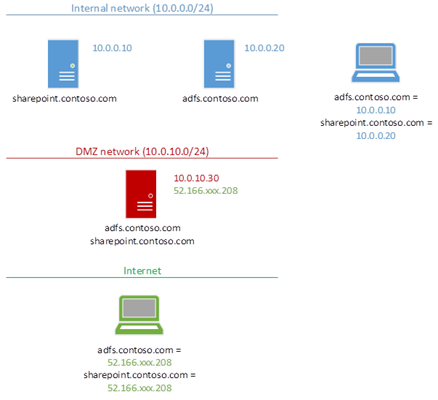

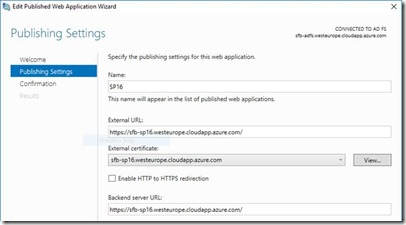

SharePoint + Web Application Proxy - 2016 Edition

I've done a couple of articles already on Web Application Proxy (WAP) with SharePoint, and figured it was time to update the series now Windows Server 2016 has improved on it. Here's how to set-up SharePoint 2016 with Windows Server Web Application Proxy 2016, up, high-level.

First, a quick recap; why use WAP on-top of SharePoint & ADFS? Because it gives you a way of exposing your public SharePoint applications to the internet without anyone having any line-of-sight. This is important because it means nobody could overwhelm your SharePoint infrastructure without being authenticated first.

The idea is WAP sits in a DMZ, can be completely unconnected to any domain even, and just forward requests to SharePoint once a valid ADFS token has been gotten.

This is very nice as we can isolate our precious production servers from any direct contact from outside.

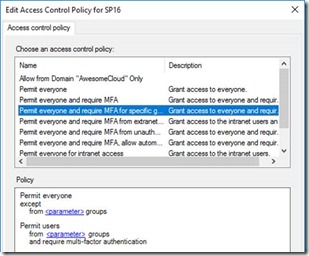

Also, WAP gives us extra authentication options for ADFS Server – we can insist on different security policies depending if the user has come from externally or internally.

How does ADFS know if a user is external or internal? Simple – a user request either came via the proxy or it didn't.

New Things in 2016

So what's new then? On the SharePoint side, not much as far as ADFS/WAP is concerned.

In WAP though we can now do things like:

- Use single wildcard certificate for publishing applications. Much easier for things like SharePoint-hosted apps which have unique domain-names per app.

- Redirect to HTTP back-ends (HTTPS no longer required).

- Some other stuff.

All in all, quite a worthy upgrade for Windows Server Web Application Proxy.

Network Requirements

The network setup is key to the whole thing working.

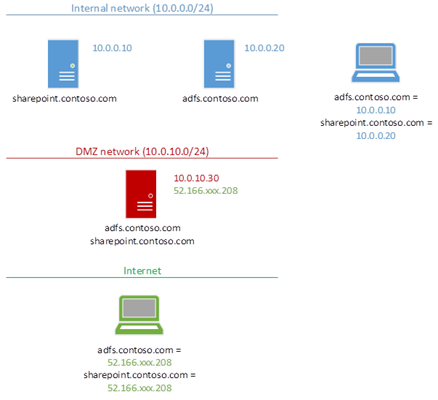

As our Web Application Proxy will be the contact point for all traffic both to SharePoint & ADFS, it needs to have the same SSL certificates & IP associations as internal SharePoint addresses do. Behold, figure 1:

WAP needs to be the external contact-point for everything needed internally; SharePoint & ADFS too.

It's true though that SharePoint doesn't necessarily need the same DNS names internally & externally, but I'd highly recommend it for simplicity sake – alternative access mappings are a pain. Some network guys like to have visibly different internal & external URLs, but frankly it only causes headaches for SharePoint URLs – things that work inside the network magically don't work outside, etc.

Keep URLs the same please! Seriously…

SharePoint Security Configuration

For my tests, I've taken an internal ADFS-secured SharePoint web-application & simply published it externally. It is possible to have WAP convert ADFS tokens into internal Kerberos tokens for standard Windows-authenticated SharePoint web-apps instead, but I simply can't be bothered with protocol transitioning. Why? Because ADFS is simply a better security solution that Windows auth directly on SharePoint web-applications, and ADFS can authenticate with Windows auth anyway.

ADFS published websites are the way to go; forget native Windows authentication for SharePoint web-apps – you'll be doing yourself a favour in the long-run, IMO.

Why Use ADFS Instead of Windows Auth for SharePoint?

ADFS give you a lot more control over identities. First, you can set what the "ID" (identifying claim) of each user will be; account-name, email address, whatever you want.

Second is that, with the claims sent to each application (SharePoint in this case) we can manipulate them before sending them to the client-app (SharePoint) if we want too. This can make things like domain-mergers much easier; users from a newly absorbed domain can appear to SharePoint like users in another domain for example.

Third, is the extra security you have for authentication. Multi-factor-authentication is possible for users based on certain policies, etc.

Oh, and single-sign-on for Office 365/Azure AAD can use it.

In the end, SharePoint isn't designed to handle authentication & identity policies; ADFS is and does it very well. SharePoint & ADFS complement each other very nicely.

Use it!

Publishing a SharePoint Web-App

So first up then let's make sure the network is working – ping/nslookup from inside & outside the network and verify the IP addresses are correct for each address, in all networks (internal, DMZ if you have one, and outside on the internet).

Then get your certificates installed on your WAP 2016 machine(s). Obviously WAP needs line-of-sight to your ADFS instance too. Do not continue until you have this infrastructure verified.

Now install the Web Application Proxy role. Configure the ADFS server being used.

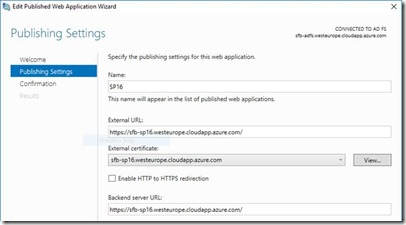

Finally publish your SharePoint application in WAP like so.

That's it!

Assuming your network setup is correct, clients should now be using WAP for all requests, and it'll just work.

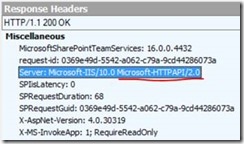

Testing for Proxy Usage

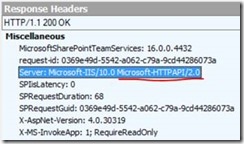

You can tell if you're hitting a WAP server instead of the real end-point from this header:

Here's a normal response if you're going direct to SharePoint:

That's it!

// Sam Betts

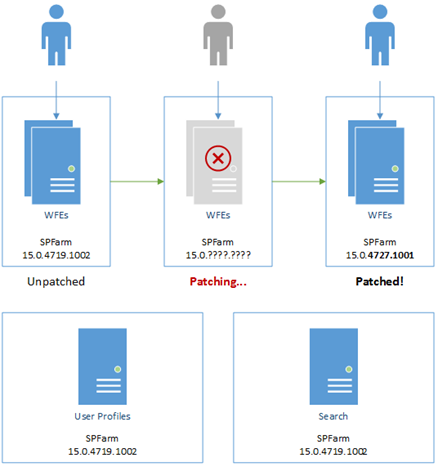

Smoother SharePoint Server Patching

Something that's always been a challenge in SharePoint on-premises, and 2013 in particular is deploying patches across the farm. SharePoint patches, updates and service-packs typically take a literal age to install during which time whatever services that are in the farm will all have to come down eventually (in 2016 this is greatly improved). In short patching SharePoint is generally a nail-biting experience for any SharePoint admin whose job revolves around keeping SharePoint running.

There are some other workarounds too, like disabling services before upgrading but here's a new take on how to mitigate the SharePoint patching pain. There are several options to help alleviate SharePoint patching stress:

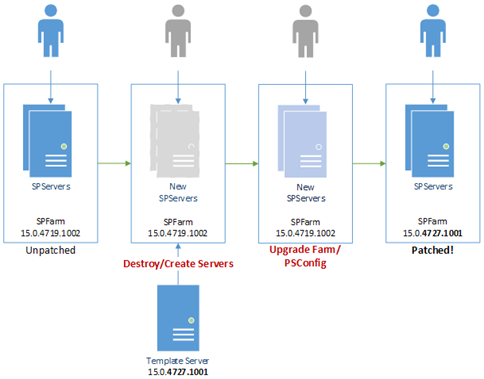

Divide up SharePoint Farm into Smaller Interconnected Farms

I'm increasingly coming to the opinion that a heterogeneous, or "all in one" SharePoint farm is a bad idea for various reasons. In other words, SharePoint farms will all the services are bad and the upgrading experience is a perfect example why.

When you upgrade SharePoint it's an all-or-nothing game; either all servers have to be on the same binary bits or nothing will work. Want to patch a search issue? That'll mean patching user-profile servers, web-front-end servers, and all the others that are lumped into the same farm too. There's no getting round it.

We have to patch all servers in the farm, regardless of whether the patch addresses services the server is running or providing. So…

Solution: have multiple farms that publish & consume services between them. That way you can roll-out your patches in stages to relieve the pressure a bit.

Not to mention, for backend patching you can build a failover farm to cover while your normal service farm is patching – switching between the two is a simple PowerShell script.

The point here is we don't need to patch all SharePoint servers in order to be running a supported configuration; we can segment up the patches if we want, thus reducing the downtime.

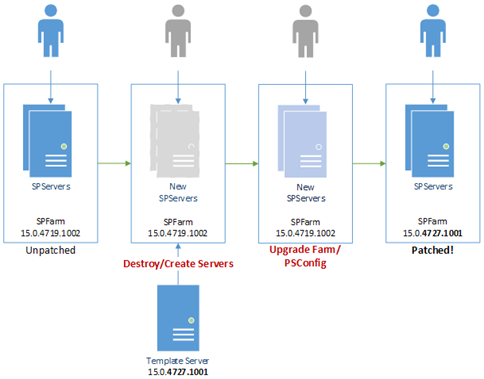

Template SharePoint Server Virtual Machine

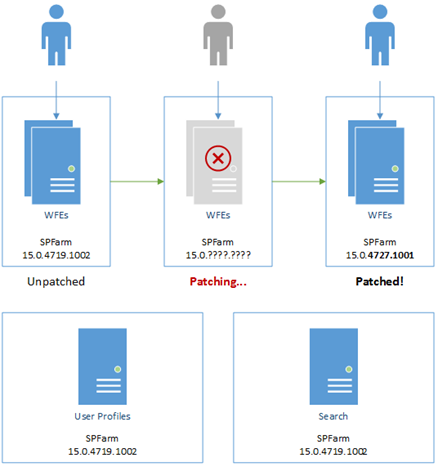

Patching a joined SharePoint server can take ages if services are running especially if you have to update server-by-server which is how it's normally done.

Solution: destroy & recreate the SharePoint servers from an updated template image.

This is quite the nuclear option and it's not possible with anything search-service related but it can save a lot of time. The idea is you have a sys-prepped template virtual-machine that you use to create your SharePoint servers from. When a new patch is released, you fire up the template; patch it, and then sys-prep it again. The existing servers are then disconnected & destroyed with new servers created from the server image & connected to the farm.

At some point the farm will need upgrading but you've basically skipped over the binary installation pain – patching a single disconnected SharePoint server is much lighter work that patching each & every active member of a SharePoint farm.

Again, don't try with search-servers as reconfiguring a search service application without the original search admin component/server is just not something I would recommend, or am even sure is possible for that matter.

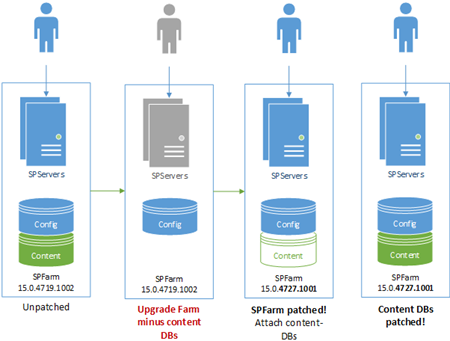

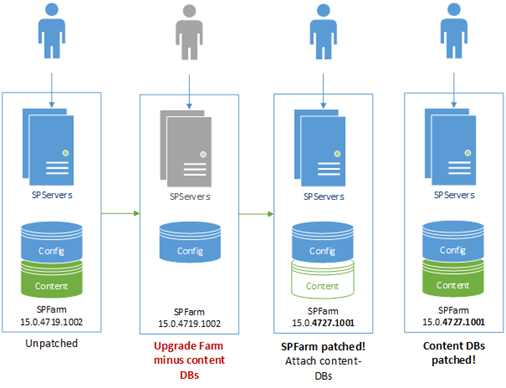

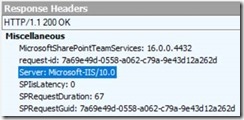

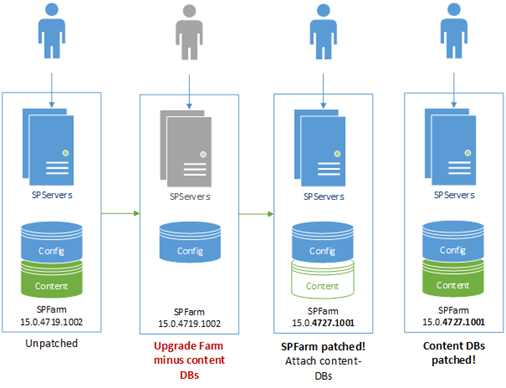

Disconnecting Content Databases Pre-Upgrade

Content-databases can take a while to upgrade, which PSConfig will try and do while upgrading everything else. The problem is that PSConfig will drop the entire upgrade like a hot-potato if for any reason it finds a critical error, including all the other upgrade tasks that aren't content-database related if something critical goes wrong with the content DB upgrade. This leaves you with a half-upgraded farm and basically in no-mans-land in terms of upgrade state.

Solution: before any upgrade, disconnect from the SharePoint applications any content databases so PSConfig just focuses on all the other upgrade tasks to at least have the farm upgraded ASAP. Once done, reattach the content databases and complete the upgrade with Upgrade-SPContentDatabase.

Again, the point is to better stage the upgrade; get the core farm upgrade 1st while running content-databases in compatibility mode, then finish the content databases once everything's stable.

Important: you will need to complete the upgrade on the content-databases to be in a supported configuration; this is just a way of getting the base infrastructure upgraded 1st & foremost, and as quickly as possible.

Wrap-Up

That's it – I hope this helps smooth-over patch-time for any admins looking for a better way of installing SharePoint patches.

Cheers,

// Sam Betts

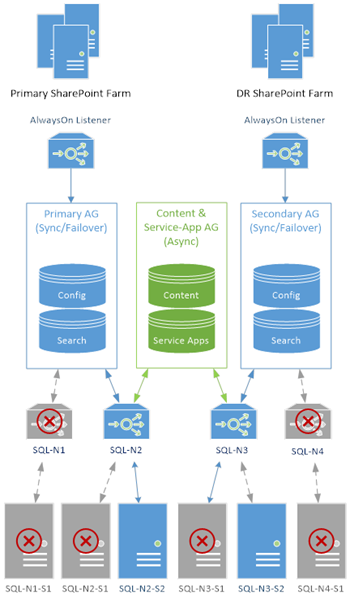

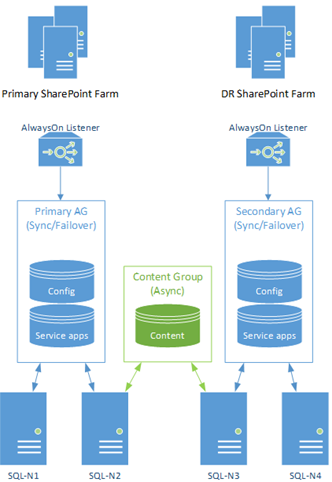

Super SQL Server Clusters for SharePoint - Part 1

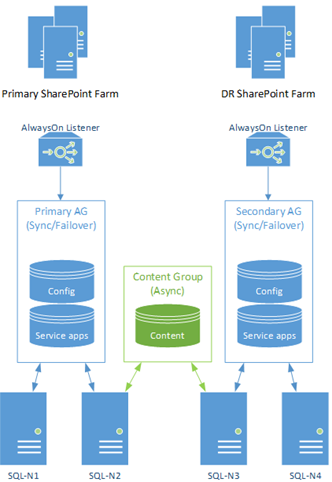

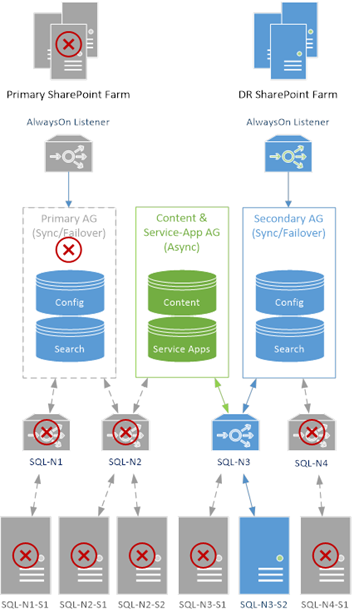

When I demonstrated AlwaysOn for SharePoint Disaster Recovery setups a while ago, the SQL cluster model I setup wasn't strictly speaking highly-available. This is because there's no failover for SQL Servers synchronising data between both SharePoint farms.

A couple of people have pointed this out now and as this is technically a potential fault, I wanted to expand on the previous design by turning the cluster design into a "super-cluster" (my own term). I wanted to build a SQL Server architecture to cover all the potential high-availability holes for SharePoint & the SQL backend. That and, well, any excuse to fire up some epic architectures is fine by me!

Before we begin, if this is your first time looking at AlwaysOn for SharePoint, you'd best read this quick overview first.

This is a two-part series just because of how big this setup guide is. We're going to mount the backend for two SharePoint farms, with part one being the core cluster setup. Part two of the setup where we mount the SharePoint databases & availability-groups is here.

We're going to setup said "super-cluster"; a cluster of clusters to some extent to serve two active/passive SharePoint farms just so we have God-like uptime & availability for SharePoint.

More specifically though, we have two goals for our super-cluster-architecture:

- The most highly-available uptime for SQL Server known to mankind.

- On the back of that, the most reliable content synchronising between our two SharePoint farms, also known to man.

This will be a complex setup, but still, it's all so our users/bosses don't complain SharePoint has gone down. If said bosses don't want to commit to building this out then any failures aren't a technology issue, but rather a financial one.

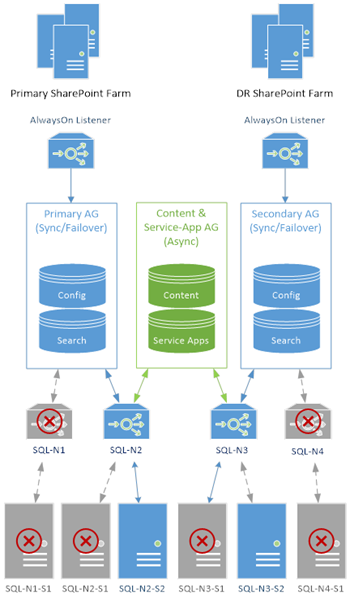

The Original SharePoint DR Architecture

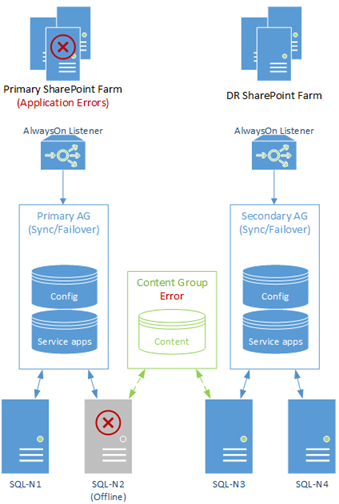

Here's our original SharePoint farms + SQL backend diagram:

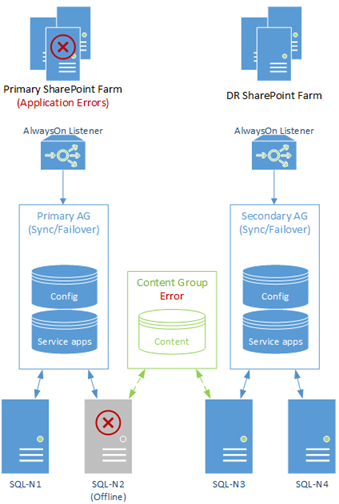

As pointed out, this design isn't actually as bullet-proof as it could be simply because a single SQL Server outage could bring down the primary SharePoint farm, unless everything was failed-over to the DR side, like so:

The same problem would be true if "SQL-N3" would go offline for any reason (patching for example); our AlwaysOn group for content will die and data synchronisation would temporarily stop, even if users won't necessarily notice. This cannot be!

Even though we still do have high-availability in that we have failover options, it would be nice to further improve uptime even further by making sure our single server ("SQL-N2") isn't a point of failure for the main farm.

Side note: In fairness, AlwaysOn is pretty hot at resuming synchronisation when problems occur and service is resumed, but we want to make sure that isn't necessary. In the case of "SQL-N2" going offline, it's actually fairly serious because unless we fail-over to the other farm entirely we're going to have fatal SQL exceptions.

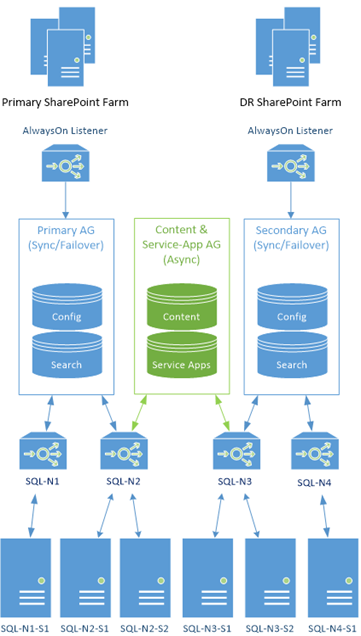

The Solution: An AlwaysOn Cluster of Failover Clusters

In the above diagrams each server is just a standalone server in an AlwaysOn cluster. Back in the day, the only clustering in SQL Server was "failover" clustering which is somewhat different, but still available now.

The solution to fill this high-available gap is we're going to make all the servers "failover clusters" with "SQL-N2" and "SQL-N3" specifically failover clusters with multiple members, instead of single servers. The other servers will also be failover instances too as AlwaysOn clusters can't mix & match standalone & failover instances, but they'll be failover instances of just one member. You could add more servers, but for now at least there's no need.

Background: Failover Clustering

First though a quick bit of history. Failover clustering is basically clustering a single SQL Server service instance, while sharing data between the cluster members. Basically turn a standalone machine into several, with only one server pretending to the clustered server at any one time. If the active server goes offline (or we failover manually), the next failover server starts the SQL Server service and pretends to be the clustered server instead, using the same data files the previous server had.

Failover clustering is basically one service instance with multiple possible members. AlwaysOn is basically multiple service instances all together. The two work together quite nicely.

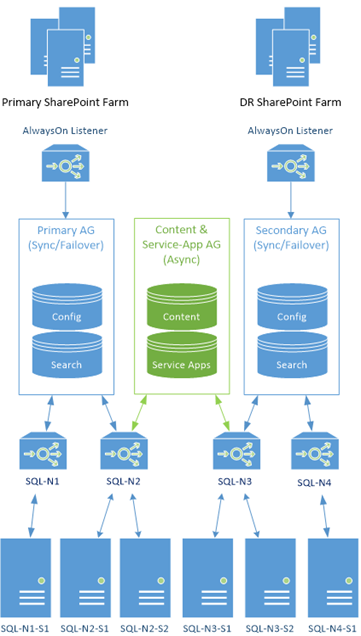

This is the new monster we're going to create:

Yes indeed; this setup should satisfy even the most hardcore enterprise architects. We replace all the standalone instances with failover instances (albeit some instances with only a single member, for now); high-availably & maximum uptime in bag-loads. It's quite a thing of beauty, if you like this sort of thing.

Creating a Super Cluster

So just for the lulz, I'll show you how to create said super-cluster from scratch. If you had the previous model already you can just edit what you've got, but I'm doing it from zero just so it's clear.

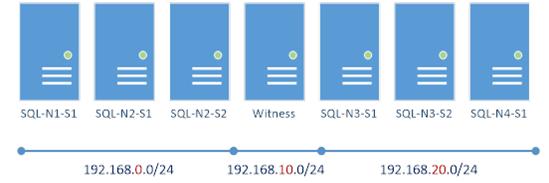

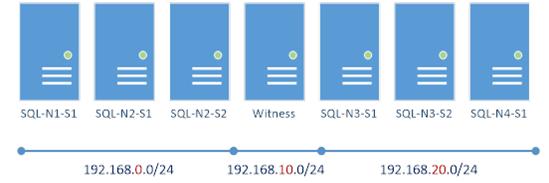

For this experiment, seeing as we're in "enterprise: expert" mode, I created 6 SQL machines + 1 witness machine over 3 subnets – 3 machines are in one subnet, 3 machines are in another with the witness in the 3rd subnet. Like this:

This gives a proper quorum, and one that'll be designed to handle correctly network failures too.

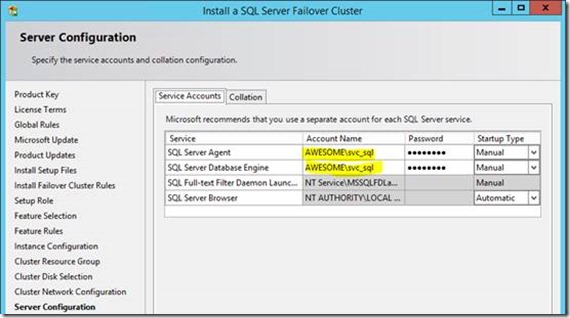

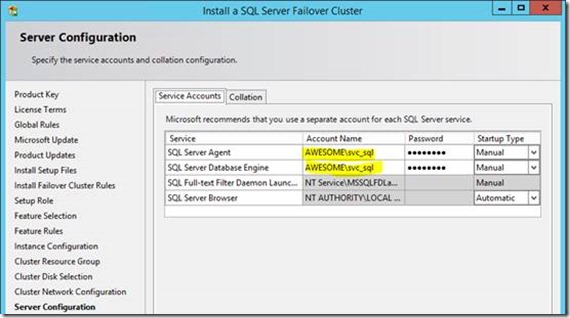

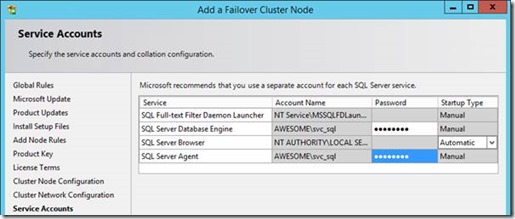

For the SQL Service, we need a network account too – "awesome\svc_sql" in my case.

The failover machines also need some kind of shared storage between them. Ideally this should be shared SCSI disks, but in SQL Server 2012 and onwards you can use just a network path (which is much slower, so not recommended). My test lab has some limits, so I'm just using a network path for this, but be aware it's really not that suitable for production setups normally.

Create Windows Cluster Host

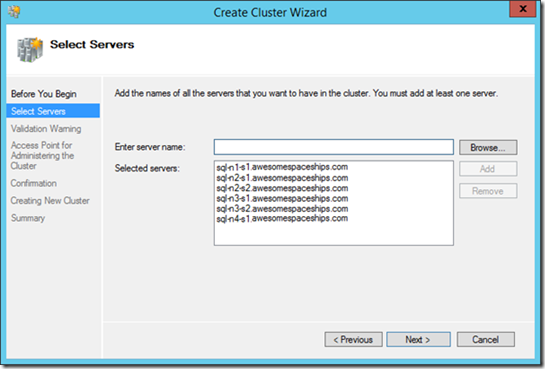

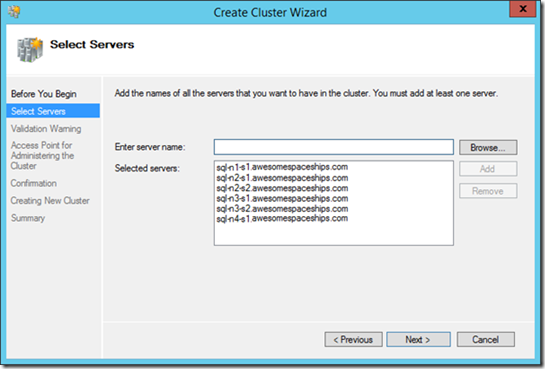

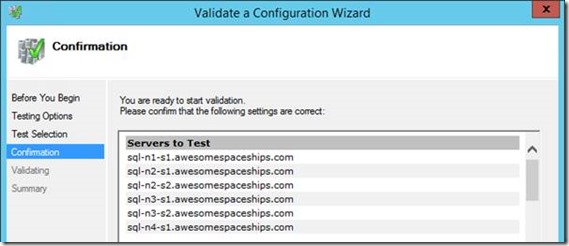

In all 6 SQL Servers we need failover clustering Windows Server feature to be installed, and then to add each one to the parent host cluster. From one of the SQL Servers create a new cluster:

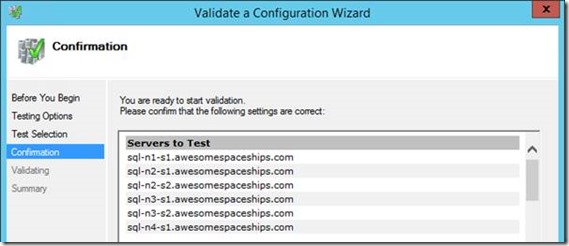

Next we need to validate the cluster. Normally for AlwaysOn this isn't mandatory but for failover clustering, unless there's a good validation report the setup won't continue. Either way, you won't get any support from Microsoft unless all tests are run (and passed) so run it.

So run the validation tests.

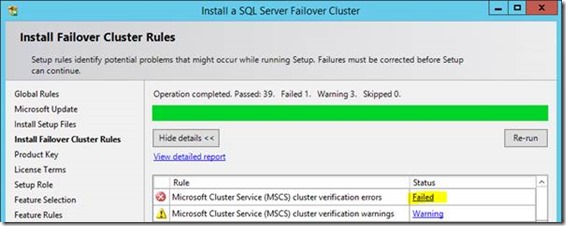

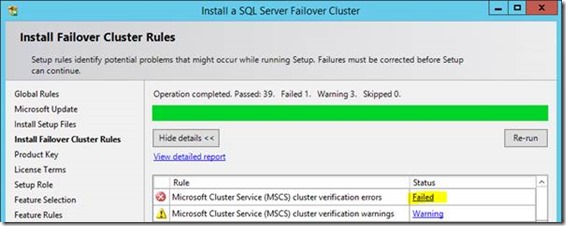

If you don't validate, or have critical errors, SQL Server won't setup as a cluster:

It's fairly normal to have some warnings at least; proper clustering is an art-form but this is my dodgy test environment so, well, who cares. For production environments you should actually care.

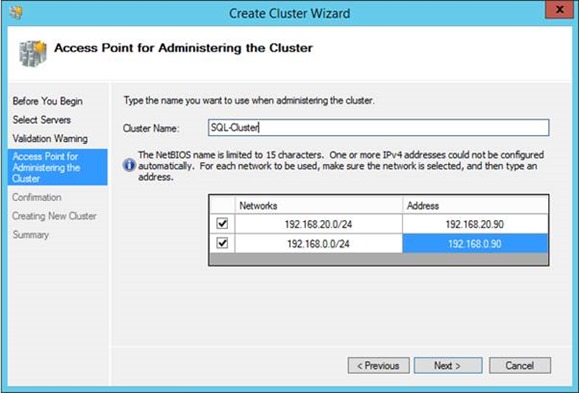

Once done we should be able to create our cluster-host. This is just the host object for the entire cluster super-set. Clustered SQL Services will get added to it later on...

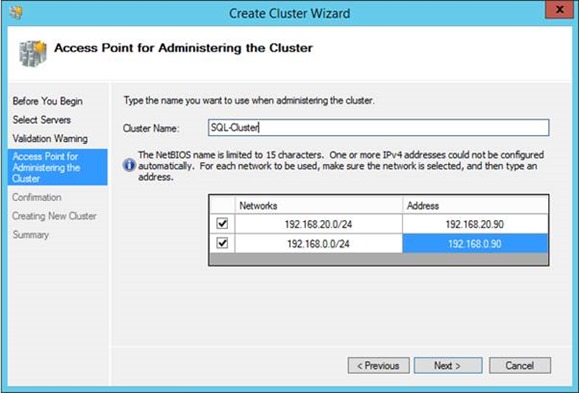

Finish the wizard, and now we can give the cluster host name.

As this is a multi-subnet cluster, we'll need to give an IP address in each subnet as servers in either subnet could host the cluster-name-object in theory.

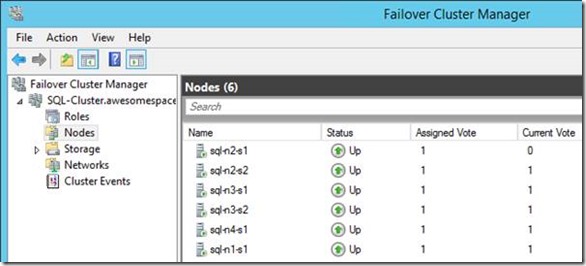

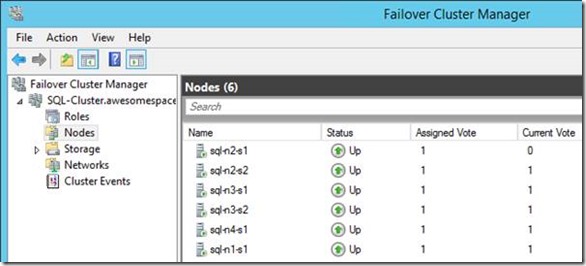

Now our cluster should show these nodes:

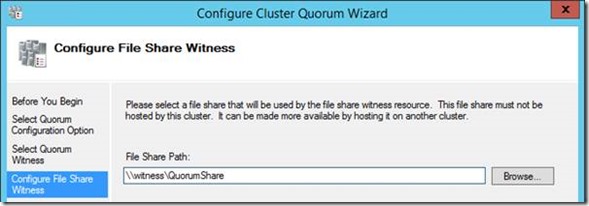

Now we'll need to add a quorum to the cluster so we can make sure we failover correctly. We're going to use the file-share on the witness machine on the 3rd subnet. Right-click on the cluster root, "more actions" and "configure cluster quorum settings".

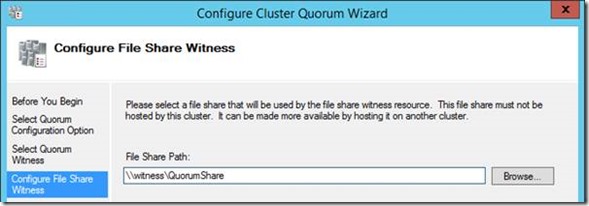

Select "file share witness"; put the file-share path in:

Confirm, and that's all the configuring you should do in the Cluster Manager now. Everything else, SQL Server will configure.

Install SQL Server on SQL Machines

Next step is to get SQL Server on each node. In AlwaysOn we can't mix standalone & failover clusters so we're going to add all 4 failover clusters, 2 of which have 2 members & the other 2 are just single-member clusters. That should be enough to demonstrate how this will work, and you can always add more member servers later.

Create New SQL Server Failover Clusters

We need to create here 4 new failover clusters, with SQL-N1 & SQL-N4 (our "standalone" machines) with a cluster of just one member.

I'm not going to go into detail about the process, as this technology is nearly as old as SQL Server, but here's some details.

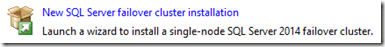

Installing a failover cluster isn't like installing normal SQL; there's a separate install method for failover clusters:

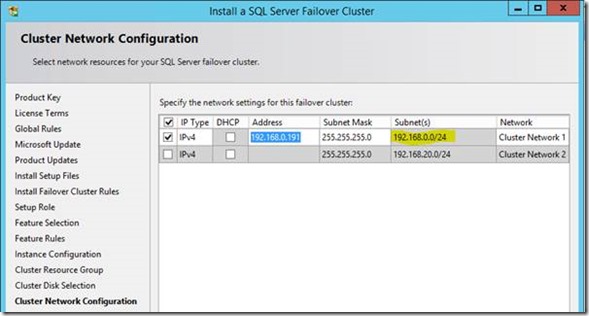

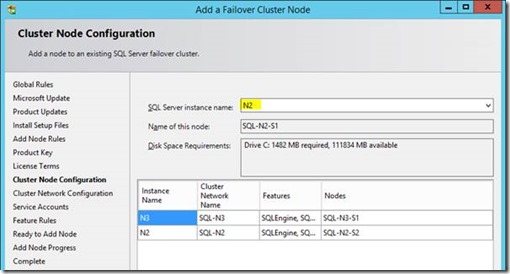

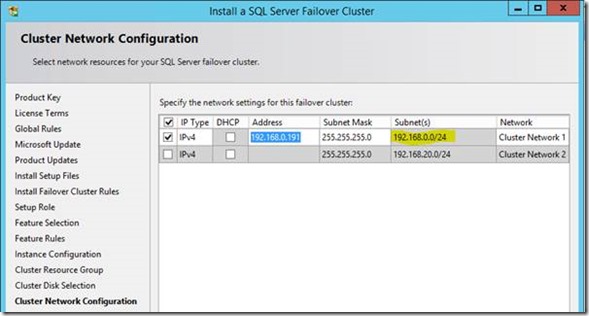

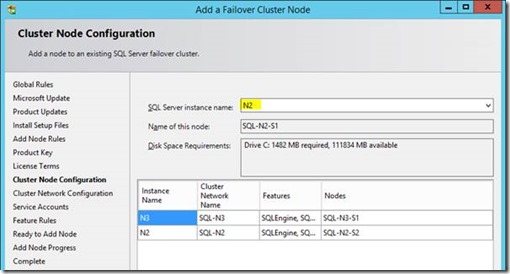

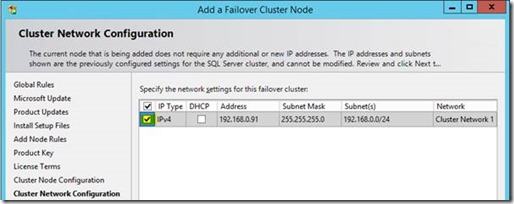

Run that and get started. This I did on "SQL-N2-S1" and "SQL-N3-S1" to create two new failover clusters. Most of the wizard is pretty straightforward, until the networking configuration:

Here I can only put an IP address in the top box because right now the cluster only has one member (this machine), hence multi-subnet configuration isn't available yet. We're not planning on making these roles multi-subnet however.

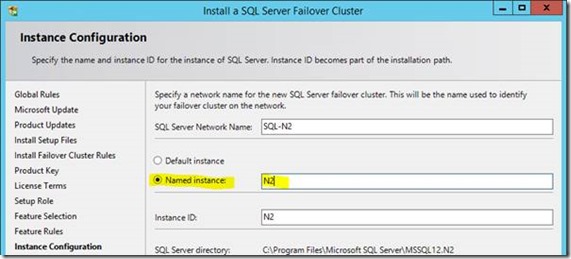

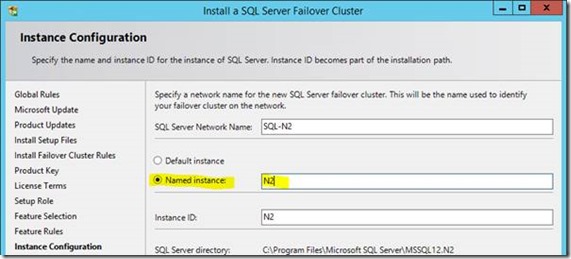

Another important detail: per parent cluster host, each service instance name has to be unique for failover instances at least. That means our failover clusters will technically be:

- SQL-N1\N1

- SQL-N2\N2

- SQL-N3\N3

- SQL-N4\N4

This is because we can't have any two cluster instances as the default "MSSQLSERVER" (or just "SQL-N2" & "SQL-N3"). The instance name uniqueness seems a bit redundant but basically it's because the service-names in the cluster have to be unique as well as the clustered object names (the virtual "server" name).

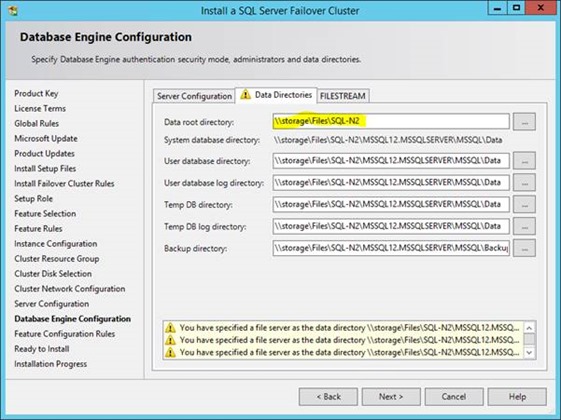

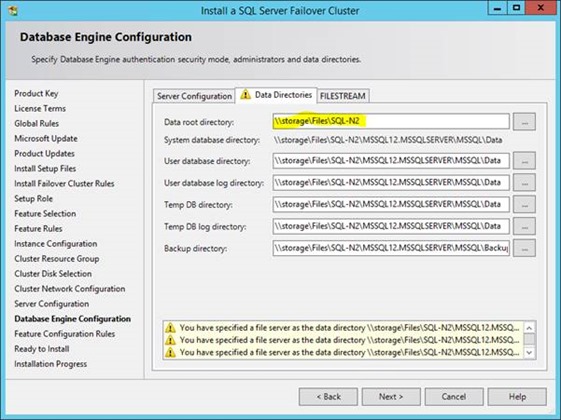

Also on storage for the database instance I'm using a network path because this is just a test environment so I don't have the cash for proper SCSI discs:

This normally is a terrible idea though, just for performance reasons if nothing else – a network location, even with decent infrastructure, is many times slower than proper SCSI disks.

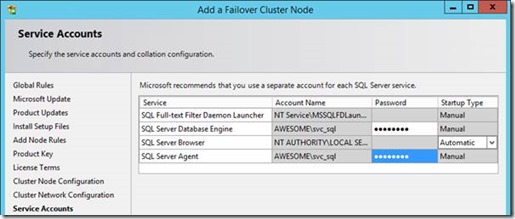

For the service account I'm still using "awesome\svc_sql" of course.

Create new failover clusters on machines SQL-N1, SQL-N2-S1, SQL-N3-S1, and SQL-N4; when done you should have four failover clusters of one server only each. We're going to add the other servers in a minute.

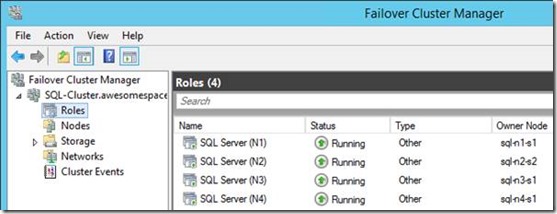

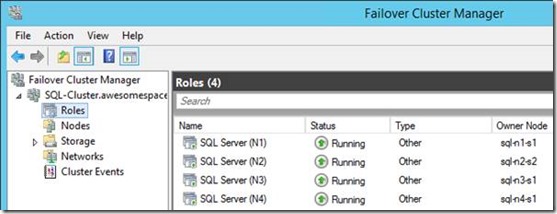

Once you're done, you should see the roles configured in the Cluster Manager:

Remember folks: don't even touch anything here unless you know what you're doing!

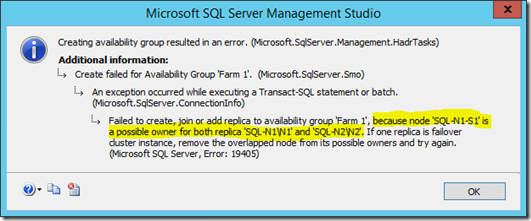

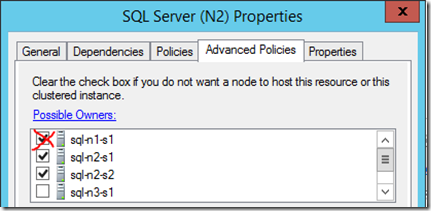

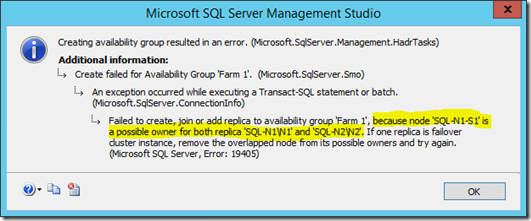

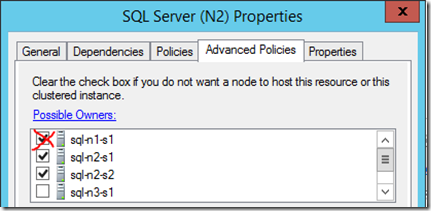

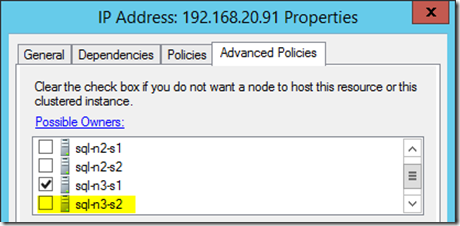

We need to check clustered that roles can only be taken ownership of by servers that should run the role. This isn't just a good practise; SQL Server will fail when mounting the AlwaysOn availability group if this isn't done right:

Anyway, in each clustered role, just uncheck any server that will never be able to run the role on the SQL Service so there's no overlap:

In my case the service was set to allow SQL-N1-S1 too, even though it never could because it wasn't a member of that failover cluster.

Seriously, get this bit right because SQL Server can really get its' knickers in a twist if it gets halfway through creating an AlwaysOn availability group later & fails because these possible owners aren't right – make sure each service can only be failed over to the server which are assigned to the instance.

Add SQL Server Failover Members

Now we have our failover clusters we need to add extra nodes to them so they can actually failover to something, for two of them at least. You could add failover members to all 4 technically, but as pointed out earlier, our high-availability vulnerable points are only really the SQL instances making up the availability group for content synchronisation, so here we're only creating two "true" failover clusters. The others are single-member clusters because in AlwaysOn we can't mix standalone & failover cluster instances into the same AlwaysOn availability group.

Anyway, to add a server to an existing failover cluster, we launch another type of installation:

This time there's not so much to configure as it's already been done; just what instance you want to join:

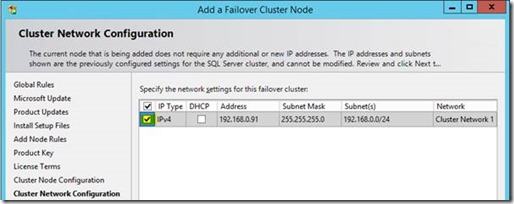

…and just confirming the IP address. In our case we're not setting up a multi-subnet the failover instance we don't have to do anything except join the existing configured clustered IP.

Also with the service-account; we can't choose it as it's done already, just add the password for this cluster node to save.

That's pretty much it; the rest of the wizard will just join the server & install the service-instance.

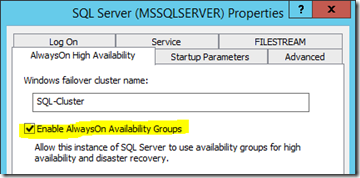

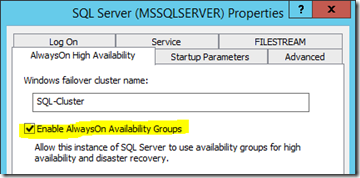

Enable AlwaysOn on SQL Services

You'll need to check this is on each primary or standalone server, in the SQL Server Configuration Manager:

Once you restart the service, that instance will be enabled for AlwaysOn.

Testing Failover Clusters

At this point we should have all our failover cluster resources ready, minus the AlwaysOn bits. Now's a good a moment as any to test the failover clusters actually failover ok. It's somewhat important to know our failover clusters can in fact, failover.

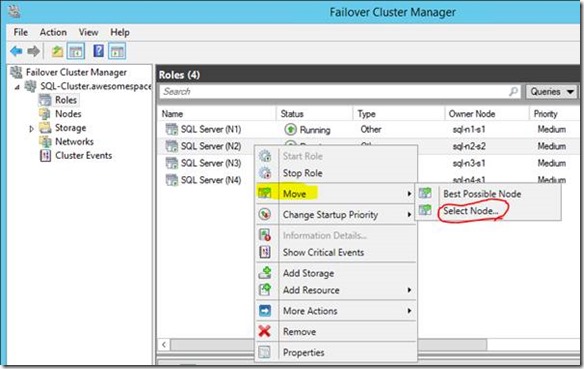

For failover clustering, failovers are transparent to SQL Server as opposed to AlwaysOn which drives the process from SQL Server itself (for the most part). Anyway, for failover clustering we use the Cluster Manager/PowerShell to drive events, rather than SQL Server itself.

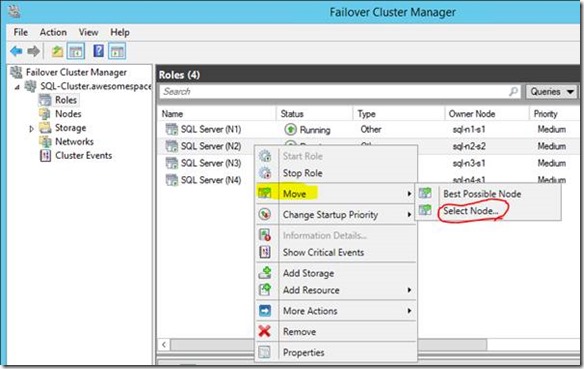

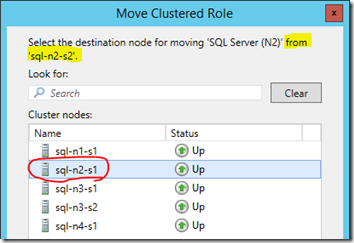

Let's check we can move each role to the other server. Open the Cluster Manager and move each one like so:

Normally we can just say move to "best possible node" which will use the possible owner configuration in the cluster to figure out which server could take the role, but we'll do it manually…

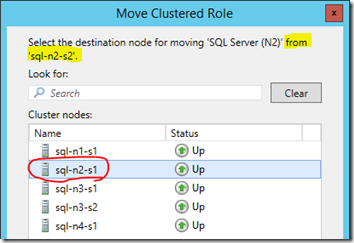

This lets you select any node, but only nodes which have the service will actually work for the move command – trying to move a role to a server that isn't allowed/configured for the role will give an error and not actually do anything.

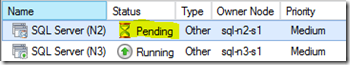

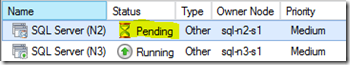

Assuming you pick an appropriate server though, once you pick the other serer you'll then see the cluster manager move everything there:

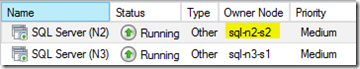

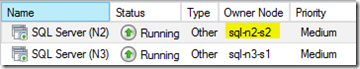

…and hopefully, if everything went well we'll have a new owner for the clustered resource (SQL):

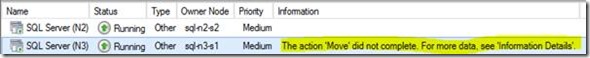

If there are any errors here, you'll need to figure out why the other SQL node didn't come up – application logs are a good start if the problem is the service. Sometimes though the problem can be cluster configuration (see below).

Do the same test for the other failover role.

Oops. Failover Cluster Fails to Actually Failover!

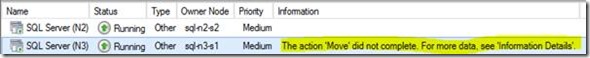

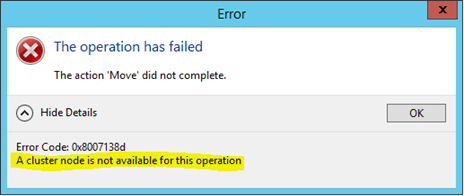

Testing your failover clusters can actually make the leap from one server to the other is kinda critical for obvious reasons. But if your test fails, this is what it'll likely look like…

Clicking the "more information" menu shows us this:

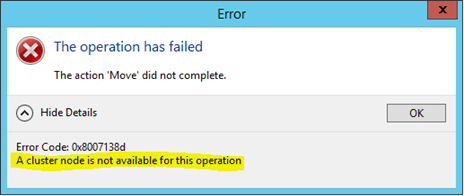

So "sql-n3-s2" couldn't accept ownership of the role for some reason or other. After poking around, it turns out the clustered IP address was misconfigured for some reason:

We've basically told the cluster that this IP address, which is a dependency for the failover role, can only be owned by one machine. Not much failover capacity here then, which is why it didn't failover.

On investigating why in this example (this was a real slip-up on my part, not a faked problem) it turned out I'd forgotten to add SQL-N3-S2 to the failover cluster in the SQL Server Setup, so at the moment of testing "SQL-N3" really only had one possible server. What a n00b!

Anyway; adding this server fixed it, and it nicely showed the value of testing your failover clusters ahead of time.

Now we should have a super-cluster ready for SharePoint databases! See part 2 for how.

Super SQL Server Clusters for SharePoint - Part 2

This is part two of one of how to setup the mother of all database clustering solutions for SharePoint. In part one we setup the failover cluster, installed SQL Server and configured the failover clusters.

Previously we'd setup our super-cluster & installed SQL Server, this time with two failover clusters; one for each farm.

Now we need to setup or create our SharePoint databases for both the primary & secondary farms.

Create SharePoint Databases for Each Farm

At this point we should have all of our SQL instances ready to go. Next we need to add the databases so we can setup AlwaysOn for those databases.

Using client-side SQL aliases, this is what we want to build first:

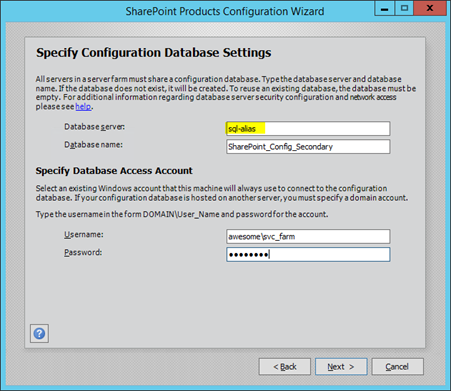

Both farms have separate configuration & some service apps where it makes sense (seriously, this is important). For anything we're going to copy between farms like content-databases for example, we'll just create/move the databases onto the primary farm.

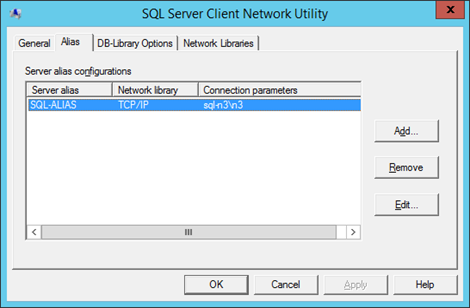

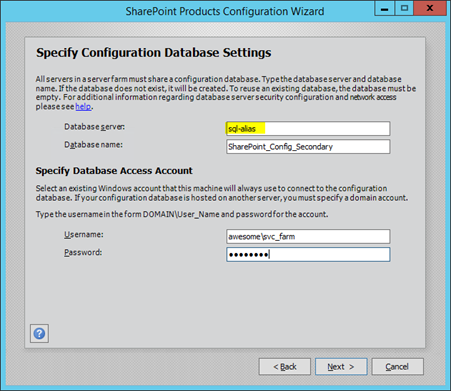

First create/edit a SQL alias on each SharePoint server:

Create new farms; one on each failover cluster for each farm, using said alias:

…or use PowerShell if you prefer.

You could just as easily move databases if you already have SharePoint farm(s) setup already; the principal is the same – we want two farms working, each one on a separate failover cluster.

Create Service-Apps

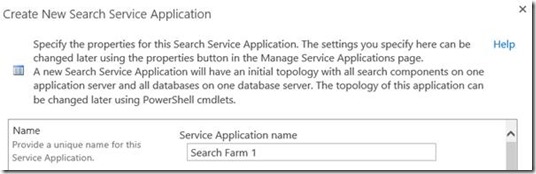

Once we have two farms setup & ready we need to create the service-apps next.

Create & configure your service-applications as needed on the primary farm. Leave the secondary farm as we'll use copies of everything from the primary farm, with one exception…

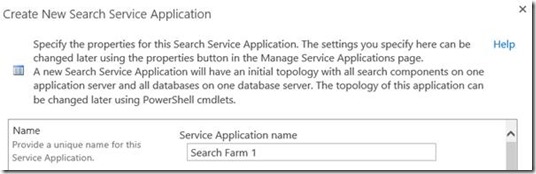

Each farm should have its own separate search application + databases.

Search isn't something that you want to copy between farms as we want farm redundancy on this huge chunk of SharePoint, so we'll just fail it over to the local farm SQL instances.

Create Content Databases & Web-Applications

Also as before, create a web-app on the primary farm only with either new or restored content databases.

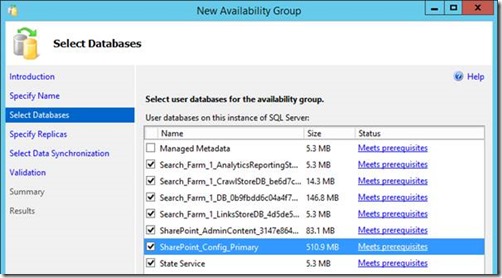

Create AlwaysOn Availability Groups for SharePoint Farms

At this point we should have all of our SQL instances & databases ready to go for both SharePoint farms.

Now we have to create the availability-groups for them – we want the following SQL Server AlwaysOn availability groups:

- Farm-local failover groups.

- All the databases that won't ever move between farms, one group per farm.

- Service application availability group.

- All service-app data that both farms will share, copied between the two farms.

- Content database availability group.

- All the content that both farms will share, copied between the two farms.

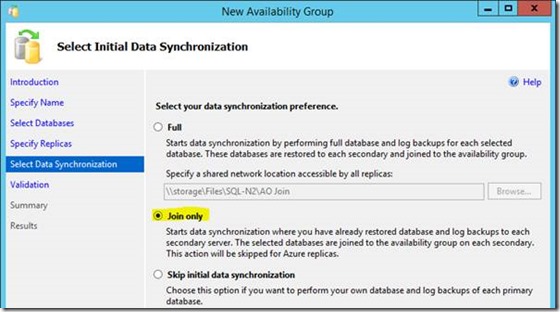

Now, it should be pointed out that creating AlwaysOn availability groups is much (so much) easier when the database file paths are exactly the same across all instances. If this is the case, you can perform an initial "full" sync via a shared network path to backup & restore the databases + transaction-logs.

Otherwise you need to do the DB + transaction-log restores manually & just have SQL Server join the databases. This my friends, is an epic amount of work if you have to do it by hand.

Farm + Search Availability Groups

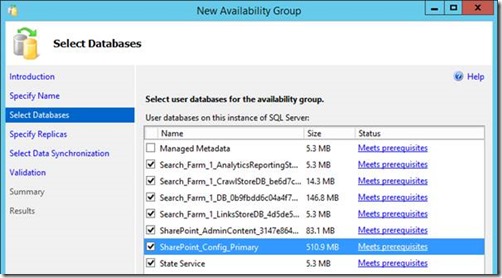

We need two farm AlwaysOn availability groups; one per farm so we have farm redundancy. That means we'll have two copies of each farm database & two SQL instances we can use, with at least one instance with two member servers.

We want the config DB, central admin content + the search databases in this group. All need to be in "full recovery" mode, so just make a backup of each database and they should be ready to add. Add the state service DB too if you have one.

We don't care too much about the usage database because it can be very heavy on updates, and isn't critical.

Here we're including the state service in the local farm group, meaning it won't be synced between farms. This is because I don't have any SharePoint 2010 style workflows so isn't needed to be synced, but your requirements might differ (read: if you have workflows, don't include the State Service DB in the local farm group – sync with service-apps DBs).

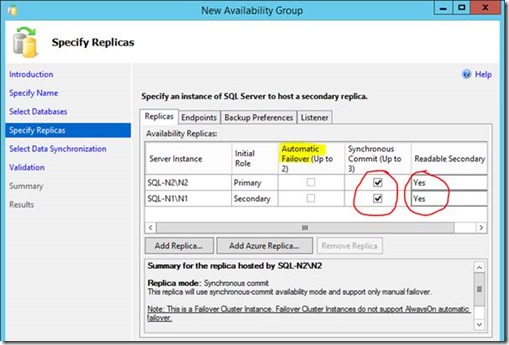

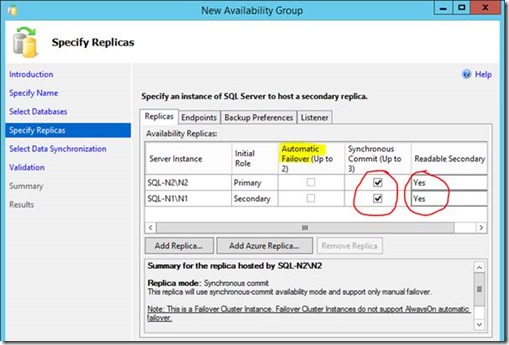

Next up; add the secondary replica for our local farm failover AG, which in farm 1s' case is "SQL-N1".

Now we need to join the databases. In my specific setup we can't do a full sync here as both SQL-N1 & SQL-N2 use different paths for the database files (something you can technically avoid if you know ahead of time, but I forgot).

Anyway, as mentioned earlier, the alternative is the somewhat-more-hassle restoring each database with no recovery (important) on the secondary so the wizard can just join each DB to the availability group instead of creating each one too.

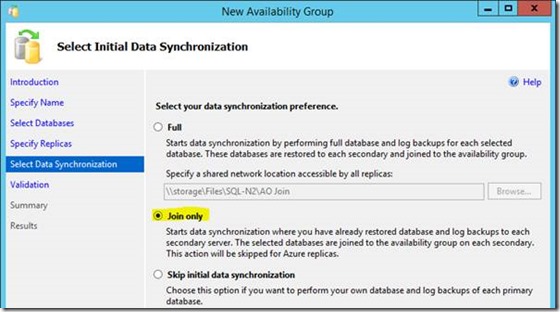

Join only a pain to get DBs in sync, but nobody said this will be easy. Assuming all the databases to add are ready on the secondary instance (SQL-N1 in this example), in "restoring" mode then this will work no problem.

Create availability group "SPFarm1" between instances SQL-N1 & SQL-N2, and another availability group "SPFarm2" between SQL-N3 & SQL-N4.

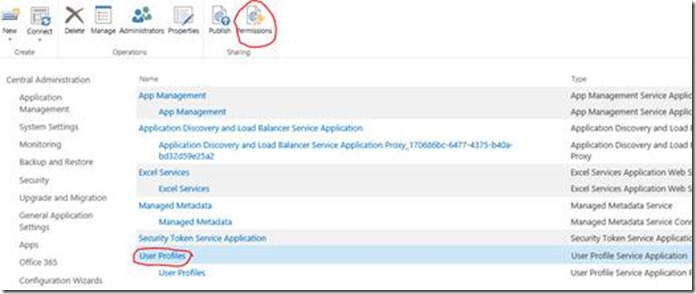

Service-Apps Availability Group

Next up, we want another separate availability group for service applications. On farm 1 create/mount the following service applications:

- Managed Metadata

- User profiles

- App Management

- Secure Store

- …

- Whatever service-apps you use that have databases that need syncing.

Once created & working, create a new availability group between SQL-N2 & SQL-N3 to synchronise the databases in those applications, except the sync DB for user profiles.

Once mounted, primary to SQL-N3 & in farm 2 add the service-applications using the existing databases setup in AlwaysOn.

More on sharing service-apps between farms @ https://blogs.msdn.microsoft.com/sambetts/2016/03/21/running-sharepoint-service-applications-in-read-only-mode-for-disaster-recovery-farms

Content Database Availability Group

Finally do the same again for content databases as you did for service-apps in a 2nd group between SQL-N2 & SQL-N3, just with the content databases. One new availability group between nodes SQL-N2 & SQL-N3.

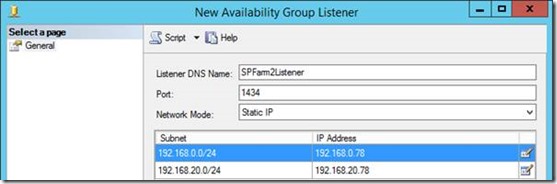

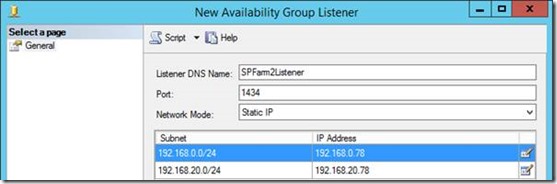

Create Listeners & Update SharePoint Aliases

Now we just need to add listeners for the SPFarm availability groups so SharePoint will failover between the farm instances transparently.

I'll add mine here; for some reason SQL doesn't let me create one unless all possible subnets are covered, even though for the farm-specific connections were never going to cross a subnet.

Having a listener for each farm means either farm 1, for example should be able to use either SQL-N1 or SQL-N2 instances; the change-over will happen transparently.

Even better, as SQL-N2 is a failover cluster of two members, SQL-N2 itself could even failover to either SQL-N2-S1 or SQL-N2-S2 and again, nothing would change. Two levels of failover transparency! My goodness.

Anyway, to seal the deal, update each SharePoint farm server to use either "SPFarm1Listener" or "SPFarm2Listener" as we did before.

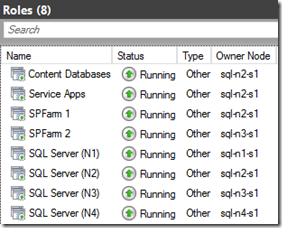

Everything Finished – Let's Review the Setup

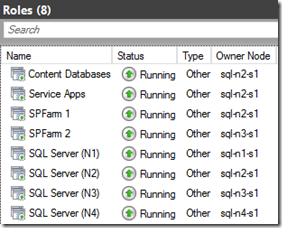

At this point your SQL Server cluster setup should look something like this:

- SQL-N1 (single member failover cluster)

- SPFarm 1 availability group + listener.

- SQL-N2 (2-server failover cluster)

- SPFarm 1 availability group + listener.

- Service Apps availability group.

- Content database availability group.

- SQL-N3 (2-server failover cluster)

- SPFarm 2 availability group + listener.

- Service Apps availability group.

- Content database availability group.

- SQL-N4 (single member failover cluster)

- SPFarm 2 availability group + listener.

You cluster host should now have no less than 8 roles in it:

Four failover SQL clusters & four availability groups. Clustering on steroids!

Testing Failovers & Outages

So this is the key goal here then; which SQL Servers can die without SharePoint suffering any outage? How many before we need to use the other farm?

No Farm Failover Needed

First let's see what we can kill without needing to failover to the other farm.

We can technically keep working with up-to 4 SQL Servers offline & just 2 left, which is quite unlikely to ever happen. In this scenario we've lost two clustered roles too as there's no servers left to run them. Assuming the two offline servers weren't in the same failover cluster, we'd still have data-synchronisation between farms too.

Any one SQL Server can go offline without the slightest interruption of service, albeit we'd possibly have to failover the AlwaysOn AG manually, as automatic failover only works for standalone instances.

Farm Failover Needed

So what could go offline which would need an entire farm failover then?

In this case we have just one SQL Server running of the six setup, and even then we could still use the 2nd farm. Synchronisation would be stopped but should in theory resume anyway.

Wrap-Up

Setting this kind of architecture up is very complicated, but as you can see it does give the benefit of having a zombie-like SQL backend for SharePoint – one that just won't ever die.

In all honesty I'd only recommend this over SQL Server AlwaysOn for SharePoint DR if SQL/SharePoint uptime is absolutely critical, and so is guaranteeing data movement between SharePoint farms. In reality, it's the guaranteed data movement that this model really gives over the standalone AlwaysOn for SP-DR model, but yet it's quite a lot more complicated to setup.

Another negative is the lack of automatic failover between AlwaysOn instances, but as each instance is a failover instance itself that shouldn't be an issue.

Anyway, in short, this is your solution if costs aren't an issue & uptime is critical.

If you need an uptime solution for more reasonable costs, get AlwaysOn with just standalone instances.

Cheers,

Sam Betts

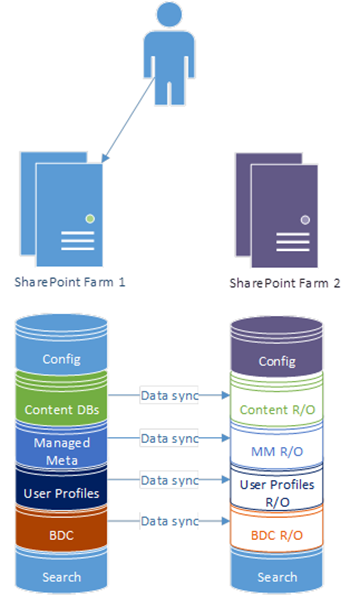

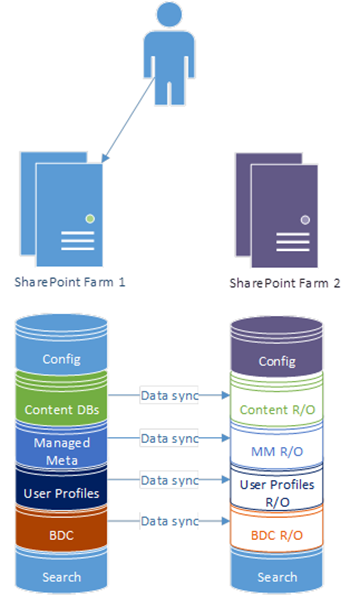

Synchronising Service Applications Between SharePoint Disaster Recovery Farms

A key part of hot-standby/disaster-recovery SharePoint 2013 farms is the principal that only content is synchronised between the two farms. The reason being is that we want to reduce the chance that a failure will replicate over to the other farm too, so we just replicate content and keep services separate in each farm.

This is what I mean by SharePoint disaster-recovery & content-syncing, on a basic level:

On failover to the other site, the previous backup becomes the active & gets full read/write access, and the old primary goes into read-only mode (and our users are happy they don't have an outage):

The question of what exactly to sync for the "content" however isn't such a black & white principal; some service applications have to be replicated in lockstep with content to maintain data integrity and others don't, depending on what you use.

What Constitutes Content Replication Exactly?

You would think that is an easy question to answer, but actually it's not. "Content databases" would presumably be the answer and yes, of course those but the problem is there are other places for "content" and there are dependencies between service-applications to take into account.

What Databases Should Be Replicated then?

If you have any doubts, then I'd say "all except search". It may not be necessary though; for example, term-sets are often heavily used to tag items; provide friendly URLs for pages, etc, etc. So managed-metadata probably needs to be in lockstep with your content-databases.

User profiles is another one. Are user-profiles something that you let users update on their own? If so that'll also need to be data-synced between farms, the same way content-databases are. Also remember; you can add managed terms as custom user-profile properties so again, in this instance you'll need lock-step with managed-metadata/user-profile databases.