Skip to main content

Another Milestone for the BizTalk Product Family

OS & Framework Patching with Docker Containers - a paradigm shift

Thinking of containers, we think of the easy packaging of our app, along with its dependencies. The dockerfile FROM defines the base image used, with the contents copied or restored into the image.

We now have a generic package format that can be deployed onto multiple hosts. No longer must the host know about what version of the stack that's used, nor even the version of the operating system. And, we can deploy any configuration we need, unique to our container.

Patching workloads

In the VM world, we patch workloads by patching the host VM. The developer hands the app over to operations, who deploys it on a VM. Along with the list of dependencies that must be applied to the VM. Ops then takes ownership, hoping the patching of the OS & Frameworks don't negatively impact the running workloads. How often do you think ops does a test run of a patch on a VM workload before deploying it? It's not an easy thing to do, or expectation in the VM world. We basically patch, and hope we don't get a call that something no longer works...

The workflow involves post deployment management. Projects like Spinnaker and Terraform are making great strides to automating the building process of VM, in an immutable infrastructure model. However, are VMs the equivalent to the transition from vhs to dvds?

Are containers simply a better mousetrap?

In our busy lives, we tend to look for quick fixes. We can allocate 30 seconds of dedicated and open ended thought before we latch onto an idea and want to run with it. We see a pattern, figure it's a better drop-in replacement and boom, we're off to applying this new technique.

When recordable dvd players became popular, they were mostly a drop in replacement for vhs tapes. They were a better format, better quality; no need to rewind, but the workflow was generally the same. Did dvds become a drop in replacement for the vhs workflow? Do you remember scheduling a dvd recording, which required setting the clock that was often blinking 12:00am from the last power outage, or was off by an hour as someone forgot how to set it after daylight savings time? At the same time the dvd format was becoming prominent, streaming media became a thing. Although dvds were a better medium to vhs tapes, you could only watch them if you had the physical media. DVRs and streaming media became the primary adopted solution. Not only were they a better quality format, but they solved the usability for integrating with the cable providers schedule. With OnDemand, NetFlix and other video streaming, the entire concept for watching videos changed. I could now watch from my set-top box, my laptop in the bedroom, the hotel, or my phone.

The switch to the dvd format is an example of a better mousetrap that didn't go far enough to solve the larger problem. There were better, broader options that solved a broader set of problems, but it was a paradigm shift.

Base image updates

While you could apply a patch to a running container; this falls into the category of: "just because you can, doesn't mean you should". In the container world, we get OS & Framework patches through base image updates. Our base images have stable tags that define a relatively stable version. For instance the microsoft/aspnetcore image has tags for :1.0, 1.1, 2.0. The difference between 1.0, 1.1, 2.0 represent functional and capability changes. Moving between these tags implies there may be a difference in functionality, as well as expanded capabilities. We wouldn't blindly change a tag between these versions and deploy the app into production without some level of validations. Looking at the tags we see the image was last updated some hours or days ago. Even though the 2.0 version was initial shipped months prior.

To maintain a stable base image, owners of these base images maintain the latest OS & Framework patches. They continually monitor and update their images for base image updates. The aspnetcore image updates based on the dotnet image. The dotnet image updates based on the Linux and Windows base images. The Windows and Linux base images update their base images, testing them for the dependencies they know of, before releasing.

Windows, Linux, Dotnet, Java, Node all provide patches for their published images. The paradigm shift here is providing updated base images with the patches already applied. How can we take advantage of this paradigm shift?

Patching containers in the build pipeline

In the container workflow we continually build and deploy containers. Once a container is deployed, the presumption is it's never touched. The deployment is immutable. To make a change, we rebuild the container. We can then test the container, before it's deployed. The container might be tested individually, or in concert with several other containers. Once there's a level of comfort, the container(s) are deployed. Or, scheduled for deployment using an orchestrator. Rebuilding each time, testing before deployment is a change. But, it's a change that enables new capabilities, such as pre-validating the change will function as expected.

Container workflow primitives

To deliver a holistic solution to OS & Framework patching, there are several primitives. One model would provide a closed-loop solution where every scenario is known. A more popular approach involves providing primitives that can be stitched together. If a component needs to be swapped out, for whatever reason, the user isn't blocked.

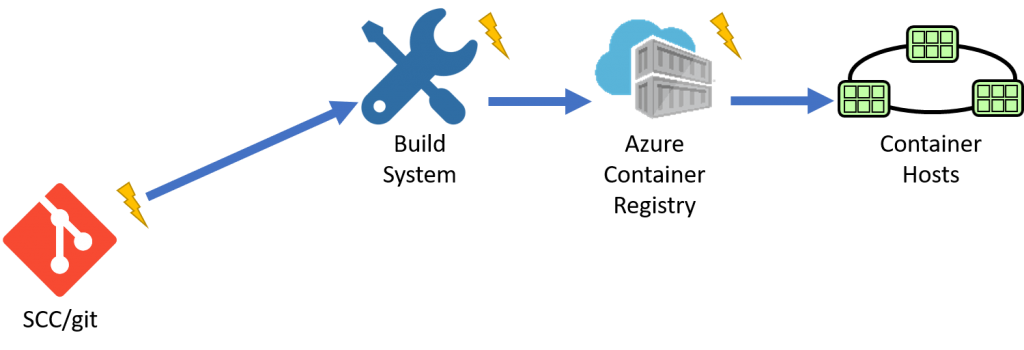

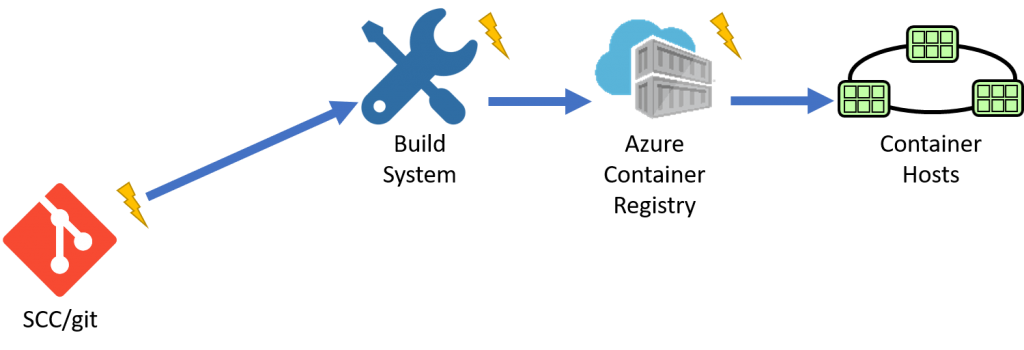

If we follow the SCC build workflow, we can see the SCC system notifies the build system. When the build system completes, it pushes to a private registry. When the registry receives updates, it can trigger a deployment, or a release management system can sit between, deciding when to release.

The primitives here are:

- Source that provides notifications

- Build system that builds an image

- Registry that stores the results

The only thing missing is the source for base images that can provide notifications?

Azure Container Builder

Over the last year, we've been talking with customers, exploring the problem area for how to handle OS & Framework patching. How can we enable a solution that fits within current workflows? Do we really want to enable patching running containers? Or, can we pickup the existing workflows, or the evolving workflows for containerized builds?

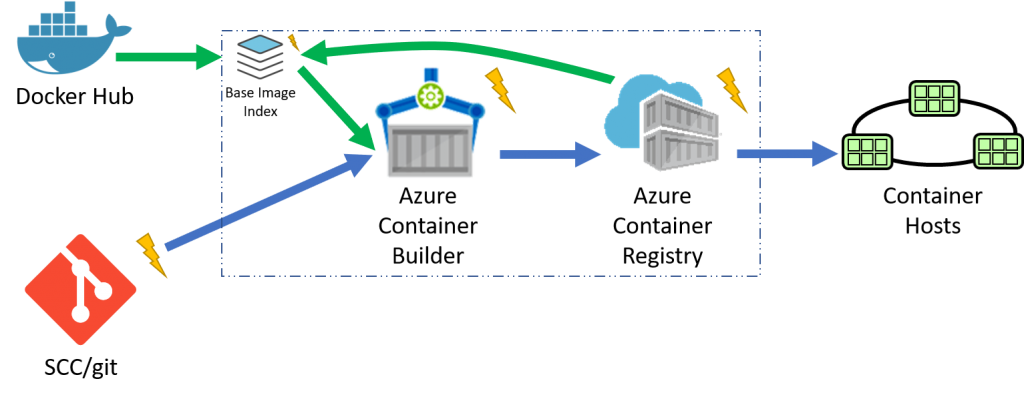

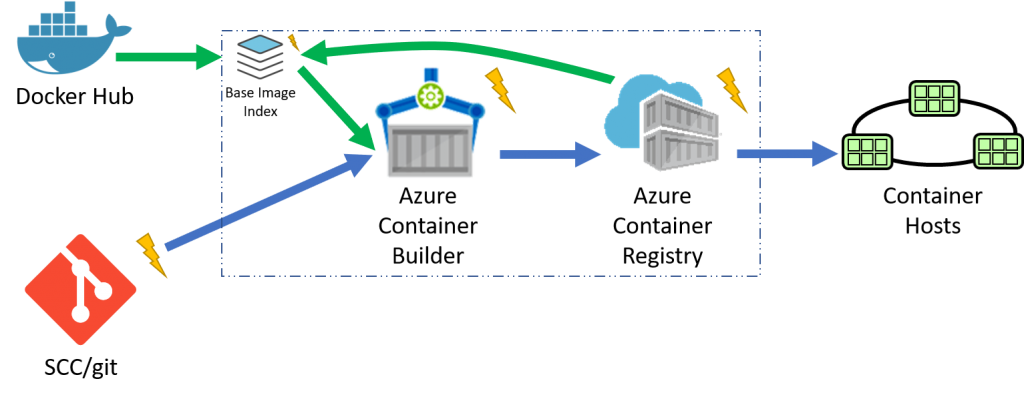

Just as SCC systems provide notifications, the Azure Container Builder will trigger a build based on a base image update? But, where will the notifications come from? We need a means to know when base images are updated. The missing piece here is the base image cache. Or, more specifically, an index to updated image tags. With a cache of public docker hub images, and any ACR based images your container builder has access to, we have the primitives to trigger an automated build. When code changes are committed, a container build will be triggered. When an update to the base image specified in the dockerfile is made, a container build will be triggered.

The additional primitive for base image update notifications is one of the key aspects of the Azure Container Builder. You may choose to use these notifications with your existing CI/CD system. Or, you may choose to use the new Azure Container Builder that can be associated with your Azure Container Registry. All notifications will be available through the Azure Event Grid, providing a common way to communicate asynchronous events across Azure.

Giving it a try...

We're still finalizing the initial public release of the Azure Container Builder. We were going to wait until when we had this working in public form. The more I read posts from our internal Microsoft teams, our field, Microsoft Regional Directors, and customers looking for a solution, the more I realized it's better to get something out for feedback. We've seen the great work the Google Container builder team has done, and the work AWS Code-build has started. Any cloud provider that offers containerized workflows will need private registries to keep their images network-close. We believe containerized builds should be treated the same way.

- What do you think?

- Will this fit your needs for OS & Framework patching?

- How will your ops teams feel about delegating the OS & Framework patching to the development teams workflow?

Steve

Steve Lasker's Web Log - https://SteveLasker.blog

Cheese has been moved to https://SteveLasker.blog

Last week, John Papa posted this interview we did, discussing in part our Silverlight 5 LOB focus....

Author: Steve Lasker Date: 03/04/2011

Completely acknowledging the lack of an integrated local database story with Silverlight, there are...

Author: Steve Lasker Date: 02/01/2011

As was announced at the Firestarter, Silverlight 5 will continue its focus on data and line of...

Author: Steve Lasker Date: 01/14/2011

Well, after 2 years away from DevDiv, I just couldn't stay away. While I had a great time working on...

Author: Steve Lasker Date: 01/06/2011

I've moved... Here's the new blog with details on why and what...

Author: Steve Lasker Date: 02/26/2009

Another great year in Barcelona. In addition to the sessions below, we had several nights out on the...

Author: Steve Lasker Date: 11/19/2008

I always love going to Barcelona. Sure, it's a great city, and loved riding motorcycles into the...

Author: Steve Lasker Date: 11/08/2008

This Wednesday, November 5th 2008, I'll be giving doing a local users group presentation that will...

Author: Steve Lasker Date: 11/01/2008

This week I presented a session on SQL Server Compact. Video Recording of the session is here. The...

Author: Steve Lasker Date: 10/31/2008

There's been a lot of talk, momentum and questioning for where and how occasionally connected...

Author: Steve Lasker Date: 10/17/2008

After much demand, the latest Platform Builder updates have been released, and they include SQL...

Author: Steve Lasker Date: 08/14/2008

In addition to the 64bit support being a web-only download, I should have also noted that for...

Author: Steve Lasker Date: 08/13/2008

So you want 64bit support so you can set Target = Any. You just installed VS 2008 SP1, but when you...

Author: Steve Lasker Date: 08/12/2008

I'm happy to announce the release of SQL Server Compact - SP1 This release includes several key...

Author: Steve Lasker Date: 08/06/2008

For our Spanish customers, Jose M. Torres just published a book covering SQL Server Compact for our...

Author: Steve Lasker Date: 08/05/2008

It's that time of year again. A quick note on some of the sessions/panel discussions I'll be...

Author: Steve Lasker Date: 05/30/2008

August 6th 2008 UPDATE: SQL Server Compact 3.5 SP1 has shipped: SQL Server Compact 3.5 SP1 Released...

Author: Steve Lasker Date: 05/14/2008

The nice thing about SQL Server Compact is the database can be treated as a document. It's a single...

Author: Steve Lasker Date: 05/14/2008

A while back I posted some info about our intention to ship a native 64bit release: SQL Server...

Author: Steve Lasker Date: 05/12/2008

As part of our vNext release planning, we're heading out on the road to meet some of our customers....

Author: Steve Lasker Date: 05/10/2008

They Sync team has just published a CTP release of Sync Services for ADO.NET on Devices. This...

Author: Steve Lasker Date: 03/05/2008

Jim Springfield, an Architect on the Visual C++ team has just posted a great example of how SQL...

Author: Steve Lasker Date: 02/29/2008

On Wednesday, Jan 16th '08 from 9am-10am PST time, I'll be doing an MSDN Live webcast covering an...

Author: Steve Lasker Date: 01/15/2008

We've been getting a lot of questions regarding mixing versions of SQL Server and SQL Server Compact...

Author: Steve Lasker Date: 01/09/2008

Another great year in Barcelona. First I want to thank our database track owner, Gunther Beersaerts....

Author: Steve Lasker Date: 11/07/2007

Ambrish, one of our great Program Managers for SQL Server Compact has posted some details on the 3.5...

Author: Steve Lasker Date: 08/30/2007

DevConnections, coming November 5-7, is just around the corner. Maybe you want to gamble a little,...

Author: Steve Lasker Date: 08/24/2007

At Tech Ed US '07, and several other events I've been giving a presentation discussing how Sync...

Author: Steve Lasker Date: 08/20/2007

As developers start architecting their apps with Sync Services for ADO.NET they're starting to ask...

Author: Steve Lasker Date: 08/09/2007

A number of people have been a bit confused how to get SQL Server 2005 Compact Edition (also known...

Author: Steve Lasker Date: 08/06/2007

Release UPDATE: We've since shipped SQL Server Compact, so these B2 links are no longer valid. There...

Author: Steve Lasker Date: 07/31/2007

For those that have downloaded Visual Studio 2008 to get the latest version of SQL Server Compact...

Author: Steve Lasker Date: 07/30/2007

We've started to get a number of request whether SQL Server Compact will support 64bit. The answer...

Author: Steve Lasker Date: 07/10/2007

Recently I was asked how well RDA works with Vista. While RDA remains the same, there are some...

Author: Steve Lasker Date: 06/15/2007

Saurab, a PM on the UiFX team posted a screencast on the new application services coming in Visual...

Author: Steve Lasker Date: 05/20/2007

Before joining Microsoft I worked in consulting. We worked hard searching for only the most talented...

Author: Steve Lasker Date: 05/14/2007

In part 1, I used the Visual Studio Orcas Sync Designer to configure and synchronize 3 lookup...

Author: Steve Lasker Date: 03/22/2007

Here's part 1 of the new Sync Designer. In this screen cast I walk through how to cache lookup...

Author: Steve Lasker Date: 03/21/2007

Q: Why does the Orcas Feb CTP Typed DataSet designer not work on Vista? A: Visual Studio Orcas is...

Author: Steve Lasker Date: 03/21/2007

Not surprisingly we've been get a lot of great questions about specific features and scenarios for...

Author: Steve Lasker Date: 03/18/2007

Today we launched the public CTP of the Sync Services for ADO.NET. This is the current name of...

Author: Steve Lasker Date: 01/22/2007

The 5 day blackout, holidays, vacation, house projects, and crippling snow storms that have kept my...

Author: Steve Lasker Date: 01/16/2007

Well, after several name changes, power outages, the holidays, and crippling winter storms, we've...

Author: Steve Lasker Date: 01/16/2007

We routinely get requests, complaints, or sometimes even threats, (If you don't enable it, I'll use...

Author: Steve Lasker Date: 11/27/2006

Just as Bill was finishing his Hitchhikers Guide to Visual Studio and SQL Server, SQL Server Compact...

Author: Steve Lasker Date: 11/14/2006

<Previous Next>

Patching Docker Containers - The Balance of Secure and Functional

PaaS, IaaS, SaaS, CaaS, …

The cloud is evolving at a rapid pace. We have increasingly more options for how to host and run the tools that empower our employees, customers, friends and family.

New apps depend on the capabilities of underlying sdks, frameworks, services, platforms which depend on operating systems and hardware. For each layer of this stack, things are constantly moving. We want and need them to move and evolve. And, while our "apps" evolve, bugs surface. Some simple. Some more severe, such as the dreaded vulnerability that must be patched.

We're seeing a new tension where app authors, companies, enterprises want secure systems, but don't want to own the patching. It's great to say the cloud vendor should be responsible for the patching, but how do you know the patching won't break your apps? Just because the problem gets moved down the stack to a different owner doesn't mean the behavior your apps depend upon won't be impacted by the "fix".

I continually hear the tension between IT and devs. IT wants to remove a given version of the OS. Devs need to understand the impact of IT updating or changing their hosting environment. IT wants to patch a set of servers and needs to account for downtime. When does someone evaluate if the pending update will break the apps? Which is more important; a secure platform, or functioning apps? If the platform is secure, but the apps don't work, does your business continue to operate? If the apps continue to operate, but expose a critical vulnerability, there are many a story of a failed company.

So, what to do? Will containers solve this problem?

There are two layers to think about. The app and the infrastructure. We'll start with the app layer

Apps and their OS

One of the major benefits of containers is the packaging of the app and the OS. The app can take dependencies on behaviors and features of a given OS. They package it up in an image, put it in a container registry, and deploy it. When the app needs an update, the developers write the code, submit it to the build system, test it – (that's an important part…) and if the test succeeds, the app is updated. If we look at how containers are defined, we see a lineage of dependencies.

An overly simplified version of our app dockerfile may look something like this:

FROM microsoft/aspnetcore:1.0.1 COPY . . ENTRYPOINT ["dotnet", ["myapp.dll"]

If we look at microsoft/aspnetcore:1.0.1

FROM microsoft/dotnet:1.0.1-core RUN curl packages…

Drilling in further, the dotnet linux image shows:

FROM debian:jessie

At any point, one of these images may get updated. If the updates are functional, the tags should change, indicating a new version that developers can opt into. However, if a vulnerability or some other fix is introduced, the update is applied using the same tag, notifications are sent between the different registries indicating the change. The Debian image takes an update. The dotnet image takes the update and rebuilds. The mycriticalapp gets notified, rebuilds and redeploys; or should it?

Now you might remember that important testing step. At any layer of these automated builds, how do we know the framework, the service or our app will continue to function? Tests. By running automation tests each layered owner can decide if it's ready to proceed. It's incumbent on the public image owners to make sure their dependencies don't break them.

By building an automated build system that not only builds your code when it changes, but also rebuilds when the dependent images change, you're now empowered with the information to decide how to proceed. If the update passes tests and the app just updates, life is good. You might be on vacation, see the news of a critical vulnerability. You check the health of your system, and you can see that a build traveled through, passed its tests and your apps are continuing to report a healthy status. You can go back to your drink at the pool bar knowing your investments in automation and containers have paid off.

What about the underlying infrastructure?

We've covered our app updates, and the dependencies they must react to. But what about the underlying infrastructure that's running our containers? It doesn't really matter who's responsible for them. If the customer maintains them, they're annoyed that they must apply patches, but they're empowered to test their apps before rolling out the patches. If we move the responsibility to the cloud provider, how do they know if the update will impact the apps? Salesforce has a great model for this as they continually update their infrastructure. If your code uses their declartive model, they can inspect your code to know if it will continue to function. If you write custom code, you must provide tests that have 75% code coverage. Why? So Salesforce can validate that their updates won't break your custom apps.

Containers are efficient in size and start up performance because they share core parts of the kernel with the host OS. When a host OS is updated, how does anyone know it will not impact the running apps in a bad way? And, how would they be updated? Does each customer need to schedule down time? In the cloud, the concept of down time shouldn't exist.

Enter the orchestrator…

A basic premise of containerized apps is they're immutable. Another aspect developers should understand: any one container can and will be moved. It may fail, the host may fail, or the orchestrator may simply want to shuffle workloads to balance the overall cluster. A specific node may get over utilized by one of many processes. Just as your hard drive defrags and moves bits without you ever knowing, the container orchestrator should be able to move containers throughout the cluster. It should be able to expand and shrink the cluster on demand. And that is the next important part.

Rolling Updates of Nodes

If the apps are designed to have individual containers moved at any time, and if nodes are generic and don't have app centric dependencies, then the same infrastructure used to expand and shrink the cluster can be used to roll out updates to nodes. Imagine the cloud vendor is aware of, or owns the nodes. The cloud vendor wants/needs to roll out an underlying OS update or perhaps even a hardware update. It asks the orchestrator to stand up some new nodes, which have the new OS and/or hardware updates. The orchestrator starts to shift workloads to the new node. While we can't really run automated tests on the image, the app can report its health status. As the cloud vendor updates nodes, it's monitoring the health status. If it's seeing failures, we now have the clue that the update must stop, de-provision the node and resume on the previous nodes. The cloud vendor now has a choice to understand if it's something they must fix, or they must notify the customer that update x is attempting to be applied, but the apps aren't functioning. The cloud vendor provides information for the customer to test, identify and fix their app.

Dependencies

The dependencies to build such a system look something like this:

- Unit and functional tests for each app

- A container registry with notifications

- Automated builds that can react to image update notifications as well as app updates

- Running the automated functional tests as part of the build and deploy pipeline

- Apps designed to fail and be moved at any time

- Orchestrators that can expand and contract on demand

- Health checks for the apps to report their state as they're moved

- Monitoring systems to notify the cloud vendor and customer of the impact of underlying changes

- Cloud vendors to interact with their orchestrators to roll out updates, monitor the impact, roll forward or roll back

The challenges of software updates, vulnerabilities, bugs will not go away. The complexity of the layers will likely only increase the possibility of update failures. However, by putting the right automation in place, customers can be empowered to react, the apps will be secure and the lights will remain on.

Steve

Relaxing ACR storage limits, with tools to self manage

When we created the tiered SKUs for ACR, we built the three tiers with the following scenarios in mind:

- Basic - the entry point to get started with ACR. Not intended for production, due to the size, limited webhooks and throughput SLA. Basic registries are encrypted at rest and geo-redundantly stored using Azure Blob Storage, as we believe these are standards that should never be skipped

- Standard - the most common registry, where most customers will be fine with the webhooks, storage amount and throughput.

- Premium - for the larger companies that have more concurrent throughput requirements, and global deployments.

The goal was never to force someone up a tier, beyond the basic tier. Or worse, cause a build failure if a registry filled up. Well, we all learn. :) It seems customers were quick to enable automation - awesome!. And are quickly filling up their registries.

There are two fundamental things we're doing:

- ACR will relax the hard constraints on the size of storage. When you exceed the storage associated with your tier, we will charge an overage fee at $0.10/GiB. We did put some new safety limits in place. For instance Premium has a safety of 5tb. If you think you'll really need more, just let us know, and help us understand. We're trying to optimize the experiences, and there are differences we'd need to do for very large registries.

- We have pulled up the Auto-Purge feature, allowing customers to manage their dead image pool. Using Auto-purge, customers will be able to declare a policy by which images can be automatically deleted after a certain time. You'll also be able to set the TTL on images. We've heard customers say they need to keep any deployed artifact for __ years. Wow, long time... Eventually, we'll be able to know when it's no longer deployed, and you'll be able to set the TTL for __ units, after the last use.

As customers hit the limits, we wanted to allow customers to store as much as they want, simply charging them for their usage. And enable them to manage their storage, with automated features.

When, when, will we get this?

The overage meters will start the end of February. Early next week, we'll start the design for the policies on auto-purge. As we know more, I'll post an update.

Thanks for the continued feedback,

Steve

Some great docker tools

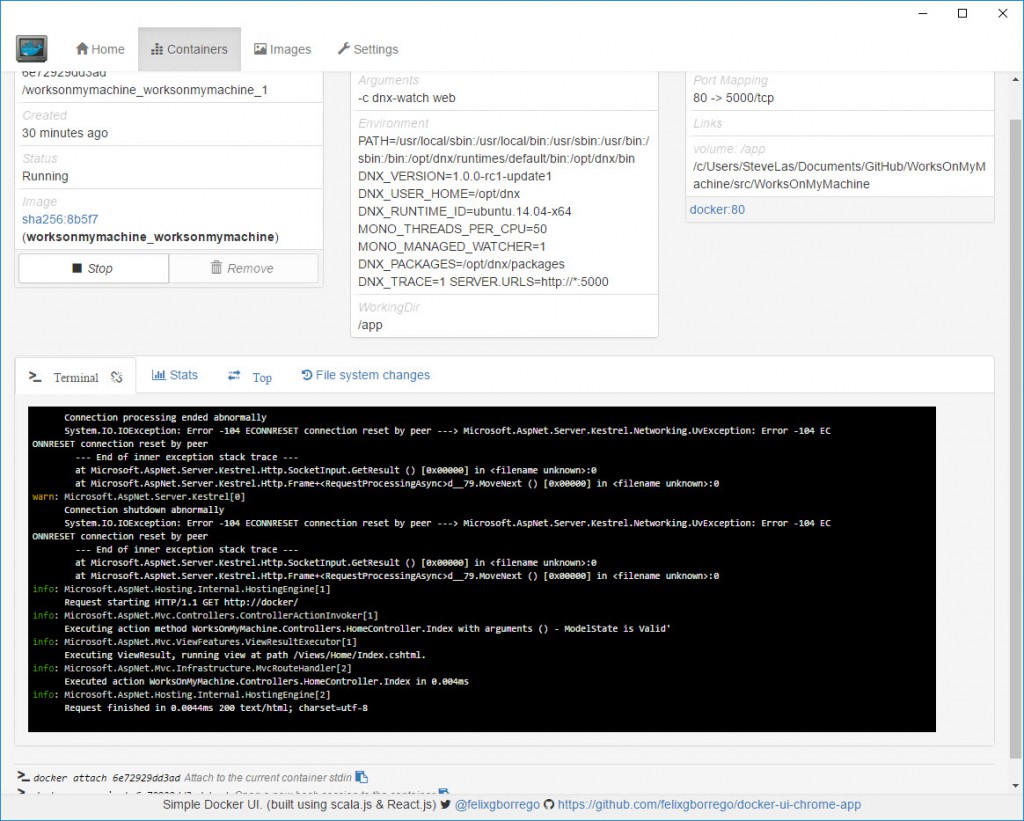

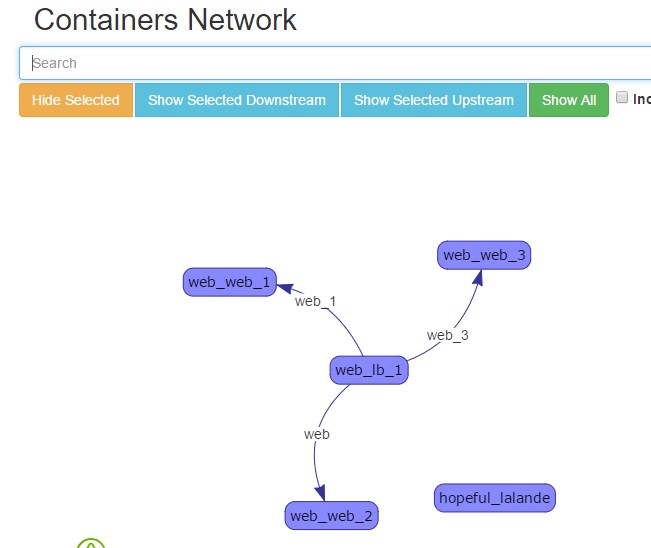

Here's a few docker tools I've started using to help diagnose issues:

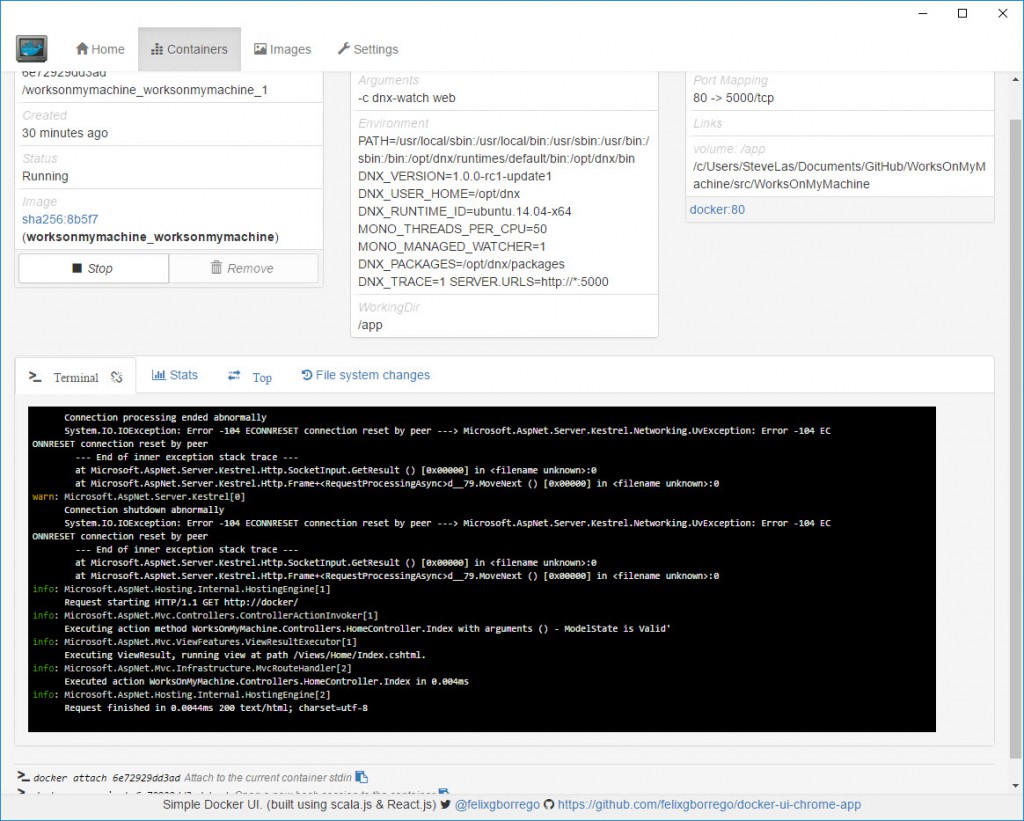

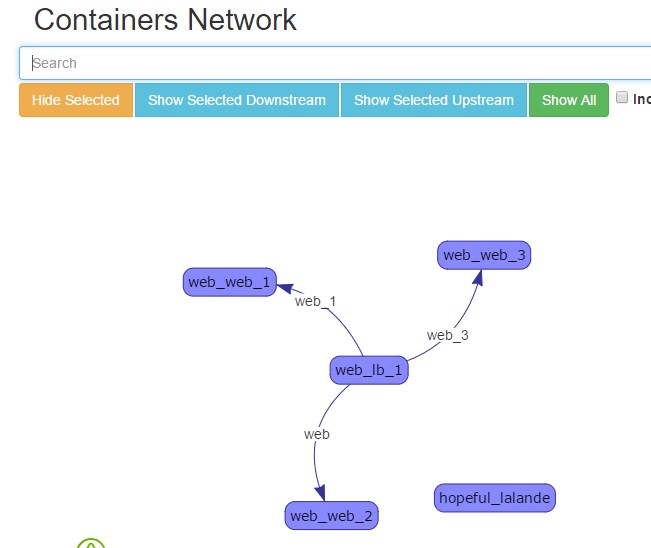

offered by felix

A Google Chrome Plugin that allows you to view your images and running containers - including the logs. No more docker ps, docker logs [container id]

by Michael Crosby (crosbymichael.com), Kevan Ahlquist (kevanahlquist.com)

Run with the following command:

docker run -d -p 10.20.30.1:80:9000 --privileged -v /var/run/docker.sock:/var/run/docker.sock dockerui/dockerui

I've started deploying this on all my nodes when I'm looking to understand how containers are deployed and interacting

I'll keep updating this as I find new tools.

If you have your favorite, comment away...

Steve

Visual Studio 2015 Connected Services in 7 minutes

A quick overview in prep for //build & ignite

https://channel9.msdn.com/Series/ConnectOn-Demand/227

With a message of retiring the "Can't Touch This" style of coding.

Thanks to the MS Studios folks who took the extra effort to help put this together.

Steve

Visual Studio 2015 CTP6 and Salesforce Connected Services

Just a quick note that with the release of Visual Studio 2015 CTP 6, we've updated the Connected Service Provider for Salesforce.

Since Preview, we've:

- Improved the OAuth refresh token code to support a pattern that should handle more issues with less code. We've been working with the Azure AD folks on this common pattern.

- Now retrieve the ConsumerKey avoiding the manual trip to the Salesforce management UI to copy/paste the value

- Improved the T4 template support to save any changes you've made to your customized templates. Be sure to read the new Customizing section in the guidance docs.

- Improved performance by caching the Salesforce .NET NuGet packages along with the installation of the connected services provider

Thanks for all the feedback that's gotten us this far.

We're still looking for feedback before we wrap up the Release Candidate. There are a number of channels:

Connected Services SDK

For those looking to build your own Connected Service Provider, we are also getting ready to make the Connected Services SDK available soon as well

Thanks, and happy service coding,

Steve

Visual Studio Docker tools support for Visual Studio 2015 and 2017

With the Visual Studio 2017 RC release, we've started down the path to finally shipping an official version of Visual Studio Docker tools; enabling developers to locally develop and debug containerized workloads.

The latest Visual Studio 2017 RC Docker Tools added a number of anticipated features:

- Multi-container debugging, supporting true microservice scenarios

- Windows Server Containers for .NET Framework apps

- Addition of CI build definition using a docker-compose.ci.build.yml file at the solution level.

- Return of the Publishing experience that integrates the newly released public preview of the Azure Container Registry and Azure App Service

- Configure Continuous Integration experience for setting up CI/CD with VSTS to Azure Container Services

We also spent a lot of time improving the overall quality

- Performance for first and subsequent debugging sessions, keeping the containers running

- Focus on optimized images for production deployments

- Cleanup of the docker files for consolidating on a single dockerfile for both debug and release and factoring the docker-compose files into a hierarchy, rather than parallel mode leveraging the docker-compose -f flag.

- Better handling for volume sharing failures - at least providing better insight

There are bunch of other great features and quality items that went in to the Visual Studio RC release. It would be great to hear which you like the most, or would like the most.

What about Visual Studio 2015?

The matrix of support scenarios are quite complex, particularly now that we ship inside Visual Studio. The current Visual Studio Docker Tools also focus on .NET Core with Linux, with the addition of .NET Framework targeting Windows Server Containers. While .NET Framework continues to be supported on VS 2015, the current .NET tooling has moved to Visual Studio 2017.

We also have a backlog of items that are required for RTW as well as customers asks, including:

- Open Source the Visual Studio Docker Tools

- Localization

- Complete Perf work

- Cleanup some of the artifacts left behind (solution level obj folder)

- Converge the two debugging buttons as we need better solution level support for solution level debugging from VS

- Add Nano Container support for .NET Core

- Continue to improve perf for Windows Containers

- Add Visual Studio for Mac docker tooling

- Image renaming - as the image and service names are referenced in several docker-compose.*.yml files

- Language services for dockerfile and docker-compose.*.yml files

Attempting to keep both the Visual Studio 2015 and Visual Studio 2017 ship vehicles going would be fairly large effort, and if we took that on, a number of the above items would have to fall below the cut line.

Going forward, past Visual Studio 2017, we absolutely will support an N-1 support policy. Whether that's Visual Studio 2017 + 1/-1, or Visual Studio updates, we'll have to see.

However, at this point, Docker Containers are a relatively new technology that has a lot of rapid changes. We believe customers would prefer us to keep up and even lead in the tooling space as we also believe we'll attract far more customers with the new features then slowing down to support the current scenarios.

What do you think?

Steve

VSLive 2015, Connected Services & Docker Tools for .NET Presentations

Last week, i had the opportunity to talk about Connected Services and Docker Tools for .NET

Thanks to Brian Randal for chairing VSLIve and the chance to talk about these two cool topics.

The presentations are here:

TH19 Docker for .NET Developers – What You Need to Know

Docker has become one of the hottest topics for developer teams targeting either on-prem or in the cloud. In this session, we'll give a brief overview of why .NET developers should care about Docker, delve into how Docker, Docker Compose, and Docker Swarm work, and show how to build, test, diagnose, and deploy .NET apps to both Linux and Windows Server containers. You'll learn how Visual Studio makes it easy to deploy your app to a container and how to build an automated CI pipeline that deploys to a container using Visual Studio Online.

Since I'm new to Docker Containers, and I was getting a sense many others were as well, I did the presentation in the spirit of what is it, why do I care, and since the session was pitched as a what do you need to know as a .NET developer, I tried to make it fun and relevant for preparing .NET apps for Containerisms.

TH15 On The Shoulders of Giants, Building Apps That Consume Modern SaaS Endpoints with Visual Studio 2015

Enterprises are expected to build modern apps, ship them to their customers at an increasingly faster pace, with increasing expectations for the functionality they provide. In this session, we demonstrate how to easily build apps that consume services from SaaS vendors like Microsoft and Salesforce. We walk through how you can use Microsoft Visual Studio 2015 to build web and mobile apps, enabling your company to deliver increasing functionality with modern services, while focusing on the unique differentiations of your business

If you're using Containers or Connected Services, I'd love to hear about your experience over all.

Thanks,

Steve

Why doesn't Docker Tools for Visual Studio 2015 show the [New] button?

If you've attempted to install the Docker Tools for Visual Studio - August Release, starting on Friday August 21st, you may have fallen into the trap of not reading the descriptive text below that explains what we should have done for you.

Quick answer: Follow the detailed instructions for installing Microsoft ASP.NET and Web Tools 2015 (Beta6) – Visual Studio 2015

Longer Answer - what happened:

In addition to supporting Windows Containers, the August release of Docker Tools for Visual Studio was updated to support Visual Studio 2015 RTM. Some changes were made to Web Tools Essentials between RC and RTM that we didn't get everything checked in and complete in time. As a result, to make the Docker Tools work with ASP.NET publishing we had to take an updated version of WTE. Rather than have one off versions of WTE, and since Docker Tools for Visual Studio targets developers working with ASP.NET 5, we aligned our install experience with the Beta releases of WTE.

When we released the 0.6 release last Wednesday, we were chaining in the WTE beta 6. If you were on a full English version of Visual Studio, life was good. A nice simple, (albeit long) experience. Unfortunately, since the preview of Docker Tools for VS isn't yet localized, developers with non-English versions of VS would get in a broken state as we weren't chaining in the language packs for WTE. To avoid others falling into this broken trap, last Friday we pulled WTE from the Docker Tools installer, and placed a nice little ToDo link in the download page of the extension. It was a quick decision we heavily debated as there were concerns others would fall into this trap, and we apologize.

We are actively working to fix the install experience in two phases:

- Phase 1 - We will ship an update to the Docker Tools for Visual Studio that will block the install until you first install the proper beta version of WTE. This will also include a few bug fixes that we've found since last Wednesday. We hope to have this out soon.

- Phase 2 - Docker Tools got caught in this spot as the Web Publishing Experience doesn't have an extensibility model, which meant we needed to ship all of WTE just to get the Docker hook in. The current plan is to have this extensibility point in place for Visual Studio Update 1. This will allow us to easily rev the Docker Tools without having to redistribute updates to WTE.

Again, we really appreciate all the early adopters that are trying out our tools, and providing feedback. Your feedback is very important, and we are working hard to give the best install experience we can based on all the moving parts. These days, we are working more iteratively, giving you early access to work in progress, and sometimes portions are a bit rough, including the install experience.

Thanks for your patience,

Steve

Windows Container Video Series

The Windows Container Team has been working to provide some content, including a series of videos for how to use an early preview of Windows Containers.

To use the Visual Studio Tools for Docker, don't forget:

Thanks,

Steve

Working with ACR Geo-replication notifications

Azure Container Registry geo-replication enables a single control plane across the global footprint of Azure. You can opt into the regions you'd like your registry to have a network-close/local experience.

However, there are some important aspects to consider.

ACR Geo-replication is multi-master. You can push to any region, and ACR will replicate the manifests and layers to all other regions you've opted into. However, replication does take some time. Time, based on the size of the image layers. Without an understanding of the latency, it can be confusing and look like a failure has happened. We also recently realized the errors we're reporting can add to the confusion.

Lets assume you have a registry that replicates within:

- West US

- East US

- Canada Central

- West Europe

You have developers in both West US and West Europe, with production deployments in all regions. ACR uses Azure Traffic manager to find the closest registry to the location of the pull. The registry url remains the same. Which means its important to understand the semantics.

Image flow

From West US, I push a new tag: stevelasdemos.azurecr.io/samples/helloworld:v1. I can immediately pull that tag from West US, as that's the local registry I pushed to.

However, If I immediately attempt to pull the same tag from West Europe, the pull will fail as it doesn't yet exist. The pull will fail, even if I issue the docker pull from West US... Hmmm, why? Docker is a client/server model, and the pull is executed from the server.

I work in West US. I connected my docker client to the docker host running in West Europe. When I issue a docker pull, my client is just proxying the request to the host, which is running in West Europe. When the pull is executed, traffic manager routes to the closes registry, which is in West Europe.

If I remote/ssh into a box in West Europe, or execute a helm deploy from West US to West Europe, the same flow happens. The pull is initiated from West Europe. Which, is actually what we want as we want a local fast, reliable, cheap pull. But, how do I know when I can pull from West Europe?

Image Notifications

ACR supports web hook notifications that fire when images are pushed or deleted. Webhooks are regionalized, so you can create web hooks for each region.

In a production deployment, you'll likely want to listen to these web hooks and deploy as the images arrive.

Tag Updates

This is a more interesting conversation, and requires a new post on tagging schemes. Basically, base image owners should re-use tags as they service core versions. When a patch to 2.0 of an image is made available, the 2.0 tag should have the new version.

However, a production app should not use stable tags. Every new image to be deployed should use a unique tag. If you have 10 nodes deployed, and one of those nodes fails, the cluster or PaaS service will re-pull the tag it knows should be deployed. (how should you write PaaS service, it's a bit redundant...?)

If you re-use tags in production deployments, the new node will pull the most recent version of that tag, while the rest of the nodes are running an older version. If you always use unique tags for deployments, the new node will pull the same, consistent tag that's running on the other nodes.

Tag Replication

ACR geo-replication will replicate tag updates. But, if you're not aware of the flow, you can also get "magical" results.

Using the example above, stevelasdemos.azurecr.io/samples/helloworld:v1 has been pushed and replicated to all regions, which are running in all regions.

If I push an update to :v1 from West US, and issue a docker run/helm deploy to all regions, my local West US deployment will get the new version of :v1. However, all the other regions will not get the new tag, as they already have that tag running.

If you deploy :v2 from West US, and attempt to deploy :v2 in the other regions, you'll get an unknown manifest error.

Geo-replication and Webhook Notifications

When we initial designed the geo-replication feature, we realized this gap and added the regionalized webhooks. We do realize this could be slightly easier as we'd like a deployment to be queued, for when the replicas are deployed. We have some more work to do here, and would encourage feedback on what you'd like to see. What you're scenarios are.

Better Errors

A customer recently raised a ticket with us, as they were receiving a "blob unknown" errors. After digging into the issue, we realized we had an issue with how we cache manifest requests and the ordering of how we sync the blobs, manifests and cache them.

A while back, we added manifest caching. Customers were using OSS tools that continually polled the registry, asking for updates to a given tag. They were pinging the registry every second, from hundreds of machines. Each request went into the ACR storage infrastructure to recalculate the manifest. Only to return the same result. We added caching to cache manifest requests, which sped up the requests and improved overall ACR performance, across all customers. While we invalidate the 1hr cache TTL from a docker push, we realized a tag update, replicated across a registry wasn't invalidating the cache. We missed this as we consider it a best practice to never use the same tag, but we also know to never say never. So, we're fixing the cache invalidation when replicated.

We will also avoid "blob unknown" errors, when it's really a "manifest unknown" error". In the customer reported problem, we hadn't yet replicated all the content when they did a pull. Because the cache wasn't invalidated, we found the updated manifest, but the blobs hadn't yet replicated. We will make the replication more idem-potent, fix the cache TTL and will more accurately report "manifest unknown" as opposed to "blob unknown".

Preview --> GA

These sort of quirks are why we have a preview phase of a service. We can't possibly foresee all the potential issues without taking really long private bake times, which can miss a market. For customers, they get early access to bits that hopefully help them understand what's coming, so they can focus elsewhere while we improve the service.

I just want to end with a thank you for those that help us improve our services, on your behalf. We value every bit of feedback.

Steve and the rest of the ACR team.

Azure Feature - SQL Data Sync

This article is pulled from my website: https://42base13.net/ and article can also be read here: https://42base13.net/azure-feature-sql-data-sync/

Introduction

I have been using Azure for many years and have a cloud service that supports my global prayer app named Thoughts and Prayers (WP, W8, iOS, Android). I created this years ago before Azure Mobile Services were created and made my own WCF service to use across all platforms. With the new Universal apps, WCF support is not available on the Windows Phone 8.1 apps. So I need to move over to Azure Mobile Services to support an update of the app on Windows Phone. The biggest issue with this is that my original service and database are located in the North Central US region of Azure. This region does not have Azure Mobile Services, so I have to move things over to one of the other regions to support it and I had to find a way to move my LIVE database and not affect the 22k+ users of the apps. The answer for this is the new SQL Data Sync feature that is in preview on Azure.

Azure SQL Data Sync

Taking a look at this new feature in Azure, it allows you to define a Sync group and allows you to have data sync to other SQL Azure instances or even to on-premises SQL Server databases using a Sync Agent. Since I was needing to move my database from the North Central to East region, I did not need to use the SQL Agent and created Sync Group to do what was needed.

So going into the SQL Database section of the older Azure portal, you will see at the bottom of the screen to Add Sync.

![syncgroup]()

In the Sync Group you will designate one database as a Hub or main database and then the others that get added will sync to the Hub. Start creating the group by giving it a name and a region that the Data Sync will live in.

![syncgroup1]()

The next step will be where you define the Hub or main database. You will need to add a username and password for the Hub database. You also have to select how conflicts will be resolved. You can choose one of the following:

Hub Wins - any change written to the Hub database is written to the reference databases, overwriting changes in the same reference database record.

Client Wins - changes written to the hub are overwritten by changes in reference databases, so the last change written to the hub is the one kept and propagated to the other databases.

![syncgroup2]()

The final page of the Data Sync wizard lets you add a reference database. This will be the database that you can sync to or from. Pick the database from the drop down. This means that it needs to be a database on this account, or it could be a Sync Agent for an on-premises database. The usual username and password for this database will be stored as well as the Sync Direction. For the Sync Direction, you can be from the following:

Bi-Directional - changes from either the Hub or this database are synced to the other one.

Sync to the Hub - all changes from this database will be synced to the Hub.

Sync from the Hub - all changes from the Hub will be synced to this database.

The Data Sync system will use the Conflict Resolution setting from the previous page to determine what data wins and conflict. This means that you need to be careful about the possibility that data could get lost.

![syncgroup3]()

Now we have the Data Sync all setup, on to configuring how often it should perform the sync operation and what should sync. So right now we have the service setup but it is not setup to be run automatically or on a schedule.

Configuring the Sync

You will now see the Sync (preview) on the top menu of the SQL Database section in Azure. From this menu option, you can now configure the Sync feature to tell it how often and what fields to sync.

The first section is the references. This shows the main Hub database as well as all of the references. On the bottom you will find a button to add another reference database to this sync. For this example, I will keep it as a single database to sync with. This display also shows the direction of the data flow in the sync.

![syncconfig1]()

The next menu option for the Sync feature is Configure. This will allow you to set the feature to perform Automatic Sync operations and allow you to set the frequency. You can setup the frequency to be in minutes, hours, days, or months. Keep in mind that the sync operation is going to take some time, especially if the database is larger. Setting the frequency to be 1 minute might be too often, but this is part of a business decision for your data.

![syncconfig2]()

The next step is to setup the Sync Rules. This is where you will determine what fields will be synced, and what fields will not be synced. This shows up as a tree structure and has checkboxes for each field to select them. I wanted to show my data fields because there is a field that is not supported in the data sync. It seems that the automatic timestamp field for the records are not available for the sync since it is an automated field. This is a mobile services database and the version field is part of how those are configured.

![syncconfig3]()

The last two menu items are the properties and logs for the data sync. These are not needed to be setup for the sync to start working. At the bottom of the screen in this configuration, there are also two more buttons, Sync and Stop. The Sync will perform a sync operation right now and the Stop will stop one in progress.

Conclusion

The new Data Sync feature is a nice way to let you make sure that copies of your database are updated on a schedule. Data is important for your business and for your customers. There are a few things to keep in mind, the databases that you see are the ones from your own account. It does looks like it will support going to a different subscription under a single account, but you cannot sync to a totally different account. The data sync is not instant and it will take time to perform the operation, but this new preview feature is a great way to move a database, backup, and syncing with other Azure and on-premises databases. I am using it for my personal projects and it is something that has a lot of potential for many different databases.

Free Event in Buffalo on Becoming an Entrepreneur 101 for Startups and Technology Professionals

Are you a tech professional and always feel that you could do a better job or come up with better ideas than your management? Do you want to start your own business but can't afford the same development tools that you use at work? Are you a Startup and are worried about the costs of development tools and hosting?

Come to our free event in Buffalo, NY on Aug 14th for a full day of training and setting up your free BizSpark accounts as well as Azure for hosting, storage, and more. Dan Stolts and I are hosting this event to share our knowledge and expertise on this topic. And of course to give you great software and Azure cloud computing. Space is limited, so make sure to get your tickets today.

Learn blogging and marketing tips as well that can help your startup to be more successful.

Becoming an Entrepreneur 101 for Startups and Technology Professionals

Free Xamarin Cross-Platform Tools for Students

This article is pulled from my website: https://42base13.net/ and article can also be read here: https://42base13.net/free-xamarin-cross-platform-tools-for-students/

I love using Xamarin tools for building cross platform mobile apps. Now, they just announced that students could now get the tools for free.

"Today, we are pleased to announce a new student program to help make it easier than ever for students to start building the apps of their dreams with Xamarin."

They also announced programs for educators as well as a new Xamarin Student Ambassador program. Read all about it and apply for this great benefit for students, educators, and schools.

https://blog.xamarin.com/xamarin-for-students/

Installing the New UnityVS Plugin

This article is pulled from my website: https://42base13.net/ and article can also be read here: https://42base13.net/installing-the-new-unityvs-plugin/

Introduction

In a previous post, I talked about the fact that Microsoft purchased SyntaxTree and that means that the plugin to allow Visual Studio to be the editor and debugger for Unity is now available for free. These are instructions on how to get the new plugin setup and using it.

Installing

There were a few things that needed to be addressed in the plugin before it was released, but now it is available in the Visual Studio Gallery. In Visual Studio 2013, go to Tools -> Extensions and Updates. Go to the Online section and to Visual Studio Gallery. As I write this, the plugin is the top plugin, but if it is not, then just search for Visual Studio 2013 Tools for Unity.

![nuget_unity]()

On to Unity

So at this point when you start Unity up and add a new script file, you will find that the default editor is now.... still MonoDevelop. If you manually changed the editor in the Preferences in Unity to Visual Studio then set it back to MonoDevelop. You might see a UnityVS.OpenFile option and that is the one that will allow you to use Visual Studio 2013, if you do not see it, don't worry.

When you have a project up, Right Click on the Assets on the Project Pane. From this menu, click on Import Package. You will see in the Import Package menu the new option of Visual Studio 2013 Tools. This will add a UnityVS Folder to your project.

When you open up a script file, Visual Studio is now the default editor. You get all of the Intellisence and other VS features. One thing that you will notice is the button to run your program in debug or release is now showing something else. The options for the button are now set to "Attach to Unity" or "Attach to Unity and Play". The first one will just attach the debugger to Unity and then you have to play your scene yourself. The second option will do the same but it will play the current scene. Any breakpoint that is set, will be stopped at now.

Conclusion

UnityVS is a great tool to help people that use Unity 3D to be able to use Visual Studio for editing and debugging. This means the tool that developers normally use is what they can use for all of their scripting work in Unity. MonoDevelop is not a bad tool, but now it is easy and free to use the best coding IDE for your game development - Visual Studio.

Unity 2D: Sprites and Animation

This article is pulled from my website: https://42base13.net/ and article can also be read here: https://42base13.net/unity-2d-animation/

Introduction

People have asked me for a series of posts on Unity 2D to help them get started. This tutorial series will go into a starters guide to Unity 2D for making simple games. Years ago, I did an article for CodeProject.com called Invasion C# Style. I took an article on DirectX and C++ and converted it over to Managed DirectX. I am going to use the graphics from that game in this tutorial series since they are pretty decent graphics and work very well for a simple 2D game.

Unity is a cross platform game engine that has a built-in IDE for developing the game. It uses C#, JavaScript, and Boo for scripts and uses MonoDevelop as the built in code editor. Unity supports publishing to iOS, Android, Windows, BlackBerry 10, OS X, Linux, web browsers, Flash, PlayStation 3, Xbox 360, Windows 8, Windows Phone 8 and Wii U. There has been talk of Unity supporting Xbox One and PlayStation 4 in the future as well. Unity also includes a physic engine and as of the 4.3 version has support for making 2D games. I have seen people make 2D games with Unity in 3D mode, but now it can support 2D directly.

Setup / Requirements

Make sure to get the latest version of Unity installed. At the time that I am writing this, it is 4.3.2.

Visual Studio 2012/2013 is used for publishing only. MonoDevelop 4 is installed and setup as the default editor for scripts. You can change this in Unity by Going to Edit -> Preferences and then under External Tools you can change the External Script Editor. You might have to browse for the devenv.exe file to get Visual Studio 2013 to show up, but it is not hard to find.

To publish to the Windows 8 Store or Windows Phone Store, you will need to sign up for a Developer account. You can get an account for only $19 total for access to both stores. If you have an MSDN account, BizSpark, or DreamSpark account, you can get into the store for free.

Let's Go...

Create 2D Project

When Unity is launched, a dialog pops up with two tabs Open Project and Create New Project. Select the Create New Project tab and enter in a name for the project. In the bottom left of the dialog, there is a dropdown for selecting 3D or 2D for the project. Select 2D and then hit the Create button to make the project.

![UnitysetupInvasion]()

The main Unity IDE will then come up. This will now come up with the default 2D layout. The lower left pane is the project pane that shows you all of the folders and the files for the project as well as the prefabs, scenes, and scripts. The upper left pane is the hierarchy pane for the current scene being viewed. The middle pane is the scene and the game pane where things can be moved and the game will run when you are debugging. The far right pane is the inspector pane that will show the data for the selected item from the other panes. The layout and position of the panes can be moved and if you select one of the options in the layer dropdown on the upper right of the window, then the positions will change. To go back to this layout, select Default.

![unityInvasionPanes]()

A Bit of Organizing

In the project pane, there is a folder names Assets. This is actually a folder in the projects folder with the name Assets. One thing that I like to do is to organize things a bit to make it easier to keep track of. By right clicking on the Assets folder or on the pane to the right that says "This folder is empty", you can go to the Create -> Folder menu option to make folders to keep track of things. For this sample I am going to be making four folders, Artwork, Prefabs, Scenes, and Scripts. This creates these folders under the Asset folder in the project's folder.

![unityorganizing]()

Saving the Scene

One thing that helps at this point is to manually save the scene. Select the File -> Save Scene menu option and then select the Scenes folder and save the scene, for this tutorial I named it MainScene. This will create a MainScene.unity file in the Scenes folder.

![unitysavescene]()

One thing to keep in mind working with Unity is to make sure that you have the correct scene open. It is easy to have the wrong one open and you think there is something wrong with your project. Keep this in mind.

Importing Images

Importing images into the project can be done in a few ways. The first way is to find the folder under the project folder where you want the images and just copy them into that folder. For this project, the Artwork folder s being used for images. By taking the images from the CodeProject project, those can be dropped into the Artwork folder and when Unity regains the focus, the images are imported automatically. The second way is to right click on the Artwork folder in the Project pane and use the Import New Asset... menu option. A third way is to drag and drop the images to the folder in the Project pane and they will get copied into that folder.

For this part of the project, I copied over the background, the player ship, the three different ufo ships and the explosion images.

![invasionsprites]()

When an image is selected in the Project pane, the Inspector pane will get filled in with details on the image. Selecting the backdrop2 image will now give us the following information.

![invasionbginspector]()

From this information, you can see a few things that are important. The first is the Texture Type. This will default to Sprite for all of the images that are imported. The Sprite Mode defaults to Single. and the Pixels To Units defaults to 100. If you want the image to be larger, then you can change the Pixels To Units value to a smaller number. The Sprint Mode will be used when we make animations.

Adding Images to the Scene

At this point, we have nine images imported into our project and have a project setup for 2D and a scene named MainScene, but we don't have anything being displayed at all. So the next step is to add our background to the scene so that we have something to see when we run the project.

From the Project pane, drag the backdrop2 image from the Artwork folder to the Scene pane right over the image of the camera. What this does is creates a GameObject named backdrop2 and adds the Sprite Renderer component to it. With the Transform component, the scale, position, and rotation can be changed for the background. One the Scene pane, the scroll wheel on the mouse will zoom the scene in and out to make it easier to see things. This same thing can be done for anything that will be static in your game, such as a HUD, backgrounds, fixed buildings, etc.

![invasionbg]()

Creating Animations

Games can be made with just static images and no animation, of course, that would not look very professional. Good games have nice animations, transitions, and more. Obviously, we want to make a good game, so it would be good to add some animations. Plus, the sample project that I took the graphics from had sprite sheets for the player ship, all of the ufos, and the explosions. Sprite sheets are a classic way to do animation in 2D games. Unity has support built in to use sprite sheets and to create animations from those sprite sheets. For this example, the ship, ufo, and explosion sprites all are sprite sheets.

If a sprite is a sprite sheet, the sprite mode will have to be changed to Multiple and this will add a Sprite Editor button.

![invasionmulti]()

Going into the Sprite Editor you will see the entire sprite sheet appear in a new window. In the upper left corner, there is a Slice button by default. Clicking on this, will show a popup where the sprite will be edited into multiple images.

![invasionedit1]()

After changing the Type to Grid, the display will change to show you the Pixel size for each sprite in the sprite sheet. For the ones that included in the project, all sprites are 70x70 pixels. From here, click on the Slice button and it will divide up the image into sprites with the size specified.

![invasionedit2]()

Make sure to click on the Apply button on the upper right of this window to apply the slicing to the Sprite itself. I have done this a few times where forgetting the Apply button means slicing the image again. Do this to all of the Sprites to create multiple frame sprites that can be made into animations.

![invasionedit3]()

At this point, we have a background on the display with the backdrop2 sprite and all of the other sprites are setup as multiple frame sprites. If you expand the sprite in the Project pane, you will actually see all of the frames for the Sprite. These frames are what is going to be used to create the animation.

![invasionframes]()

Now to make the animations. For a given sprite, expand the sprite to see all of the separate frames. Select the frames and then drag them onto the Scene pane. This will popup a dialog to create a new animation for you. For the ship animation, I named it ShipAnimation. In the Hierarchy pane, a new object named ship_0 is created. This game object has the following properties when it is selected. Notice that the Transform and Sprite Renderer are similar to what was seen with the background for the game. A new component is added here for us called the Animator. This is the component that will animate thru all of the frames. From the main scene, you will only see the first frame of the animation, but if you click on the play button in the upper middle of the Unity IDE, you will be able to see the animation run. In this tutorial, I am not going to be going over all of the separate options in each component. This is left as an exercise for the reader.

![invasionbgplayer]()

This will let us create animations for each of the sprite sheets by selecting the frames, dropping them on the scene, saving the animation file. So we can see all of the sprites animate when we run the project. Each of the sprites show up in the Hierarchy pane with the name of the first frame. At this point to organize things better, the names in the Hierarchy pane are changed. You will notice that the Artwork folder will have a lot of new files now. An Animation folder could have been created to organize this better.

![invasionall]()

Making a Prefab

Prefabs are pre-fabricated objects that have a set of properties and components that define how the object should behave. Prefabs can be dynamically created in scripts. To create a Prefab, just take drag the Sprite from the Hierarchy pane into the Prefab folder in the Project pane and it will create the prefab for you. As you do this, you will notice that the game objects that are in the scene that are prefabs are colored in blue in the Hierarchy pane instead of the standard black. For this examples, all of the ufos and explosions have been made into prefabs.

![invasionprefabshier]()

![invasionprefabs]()

Since we have made prefabs from all of the ufos and explosions, we can actually remove the reference to them in the Hierarchy pane. Once this is done, you will still see the prefabs in the Prefab folder, but the scene will not show any of the explosions or the ufos. To get them on the screen, we will actually use a script for this.

Adding a C# Script

Scripting can be done using Javascript, C#, or Boo (a Python inspired syntax). This will use C# since I am the most familiar with C#. The first thing we will need to do is to add a new empty script. Right click on the script folder and go to Create -> C# Script to make a new C# script. This is named MainScript. When it is selected in the Project pane, it will actually show the entire script in the Inspector pane.

![invasionscript]()

Looking at the script, you will notice that it creates a public class using the name of the file that was created and it is derived from the MonoBehaviour class. There are two methods that MonoBehaviour uses, Start and Update. The Start method will be called when the script starts, so here you can put anything for setting things up. The Update method will be called once per frame to display, move things, or whatever needs to be changed every frame.

To get the script running in our scene we need to add a new game object to the Hierarchy pane. To add a new empty game object, use the GameObject -> Create Empty menu option. This was renamed to MainScriptGameObject. Now to tie the script to this game object, just drag the MainScript object from the Project pane to the new MainScriptGameObject in the Hierarchy pane. This adds the script component to the game object itself. Another way to add the script to a game object is to use the Add Component button and select Scripts -> MainScript. This second method will show you all of the scripts in your project so that you can add any script easily.

Dynamically Adding Animated Sprites

Up to now, we have a main C# script and just our main player ship on the screen with our space background. All of the other animations we changed to prefabs and are not getting displayed yet. The next step is to edit the script and add the other animations back onto the scene dynamically. This can be the first step in getting multiple enemies on the screen using the same prefab, setting up different levels, and enemy generation. To start the editing of the script, double click on the script in the Project pane. This will start up your default code editor which defaults to MonoDevelop.

The first thing that we need to do in the script is to create some public member variables to have the prefabs available in the script. Once you save the script from the editor you will see these properties for the script in the Inspector pane when the MainScriptGameObject is selected in he Hierarchy pane.

Many .NET developers use properties now to do more data binding, but for Unity scripts, you have to use public members instead of properties. Properties will not show up in the Inspector at all. In the Project pane, switch to the Prefabs folder and then drop and drop the prefabs that you want to use to the script member variable.

![invasionscript2]()

After this, when these member variables are used in the script, the prefabs will be used. This makes it easy for us to add the prefabs dynamically to the scene. The coordinates could be from an XML file, hard coded, or from any time of data storage. For this example, we will hard code the positions for each animation.

To create an instance of the prefab, use the Instantiate method. This will then need the prefab to use, the Vector3 for the position of the prefab and then the Quaternion.identity as parameters. The following is used to show that multiple objects can be created using the same prefab in different locations.

public class MainScript : MonoBehaviour { public GameObject PlayerExplode; public GameObject Ufo1; public GameObject Ufo2; public GameObject Ufo3; public GameObject Ufo1Explode; public GameObject Ufo2Explode; public GameObject Ufo3Explode; // Use this for initialization void Start () { Instantiate(PlayerExplode, new Vector3(0.5f, -1f), Quaternion.identity); Instantiate(Ufo1, new Vector3(-1.0f, 1.65f), Quaternion.identity); Instantiate(Ufo2, new Vector3(0f, 1.65f), Quaternion.identity); Instantiate(Ufo3, new Vector3(1.0f, 1.65f), Quaternion.identity); Instantiate(Ufo1Explode, new Vector3(-1.0f, 0.65f), Quaternion.identity); Instantiate(Ufo2Explode, new Vector3(0f, 0.65f), Quaternion.identity); Instantiate(Ufo3Explode, new Vector3(1.0f, 0.65f), Quaternion.identity); Instantiate(Ufo1, new Vector3(-2.0f, 1.65f), Quaternion.identity); Instantiate(Ufo1, new Vector3(-2.0f, 0.65f), Quaternion.identity); } // Update is called once per frame void Update () { } }

Please keep in mind, save the script in the editor before you switch back to Unity to test your changes. This has happened multiple times to me and some of my students. It is easy to forget. When you switch back to Unity it will reload the script just like it loaded the files when you added them to the folders under the Project's folder.

After exiting and restarting Unity, the Sprites were not showing up correctly. This was due to the order of things being displayed. All of the prefabs and the PlayerShip game objects were changed to have a value of 1 for the order of being displayed with the background set at the default value of 0.

Conclusion

At the end of all of these steps, we have a space background scene and have some animations on the screen and some that are added dynamically with a C# script. This is the first step in getting your Sprites and Animations into Unity 2D to make a game.

This is the start of my tutorial series on Unity 2D. More posts will come adding functionality to the game, documenting the steps as the Invasion game is developed using Unity 2D and getting published. For each article, the source code will be available for download. Feel free to use the code for your own research and as a basis for your own games, but please do not publish my game. Use your creativity to make your own.

Source Download

The original sprites can be found in the Original Sprites folder and the full Unity Project on my GitHub project UnityInvasion. Have fun.

A Spirited Debate...

As everyone knows, there is no shortage of passion in the tech industry. Naturally, there are many viewpoints out there, which leads to another thing I always enjoy….a spirited debate. Just so happens that I'm having one right here as a result of the recent WebSphere Loves Windows campaign and benchmarking results. I'd like to take the time to thank those of you who have responded or posted on the work to date. Remember, this is all in the name of saving customers money and helping them achieve optimal ROI.

While I rely on Greg to answer some of the technical questions associated with his research, and he's done so via his blog, I also want to continue to emphasize two points:

· We stand behind the results and the methods used for this testing from end to end. In fact, I recently responded to IBM by offering to pay for independent benchmarking via a third party. I hope to hear back and get that going, yet my phone remains strangely silent.

· Apples to apples comparison? Well, we know that everyone's apples look a little different, so check out the tool and see for yourself. By doing this you get to run what you deem as the fairest possible comparison and see how it works.

Please keep the feedback coming! We look forward to seeing more results from others as they test new configurations.

...And we'll prove it!

Yesterday I blogged about some recent findings regarding both system cost and performance when comparing Windows Server 2008 on an HP Blade Server against AIX on a POWER 570/POWER6 based server. As I stated, the tests showed that WebSphere loved running on Windows…to the tune of 66% cost savings and with better performance.

We encourage customers, third parties and IBM to check out the findings, run the sample apps and let us know what they find. IBM had a thought, but not about the results, just the fine print. Let me be clear: we stand behind our testing methodology and the results it generated. To prove it, we'll pay for the bake-off. Here is our open letter to IBM:

Dear Ron,

I know you are just as interested in saving customers money and improving performance as we are which is why our recent tests caught your eye. To demonstrate my confidence in the numbers we have produced, I'd like to propose having a third party re-run the benchmark tests and publish the results. To make it easy, I'll put my money where my mouth is and fund it. Are you in?

Hope to hear back soon!

Thanks,

steve

Another Milestone for the BizTalk Product Family

We are excited to announced two things today!

1—As promised, the first public beta of BizTalk Server 2009 is now available for download. This beta release is officially feature complete, and on track for a final release in the first half of calendar year 2009. We encourage our customers and partners to check it out today and let us know what you think. BizTalk Server 2009 is a full-featured release and as always, will be a no cost upgrade to BizTalk Server customers with active Software Assurance. In a time when we are all trying to do more with less, we're very pleased to be able to offer additional value for existing IT investments in BizTalk. As mentioned before, the BizTalk Server 2009 aligns with the 2008 wave of updates earlier this year – Visual Studio, SQL Server and Windows Server. To get the beta and more information, go here. We look forward to getting the feedback!

We also know that many BizTalk customers are interested in doing more with BizTalk Server as an enterprise service bus (ESB). As such, we also released the a community technology preview of the ESB Guidance 2.0 today, which delivers updated prescriptive guidance for applying ESB usage patterns with improved itinerary processing, itinerary modeling using a visual domain-specific language (DSL) tools approach, a pluggable resolver-adapter pack and an enhanced ESB management portal. To get it, go here.

While I'm thrilled to see the 4th major version of this product since 2004, we will move to a rhythm of BizTalk Server updates every two years. Our customers have told us that this is the right consistent cadence for their infrastructure needs.

2—BizTalk RFID Mobile is officially available today. Previously announced at RFID Journal Live last spring, RFID Mobile extends the power of RFID built into the core BizTalk Server platform even farther to the 'edges' of the enterprise. Customers can now get a read on their inventory and orders in real time—enabling people to ultimately make more informed immediate decisions about the business. We also officially released the RFID Standards Pack today, which supports key RFID industry standards, and will enable customers and partners to more seamlessly develop open and flexible solutions for their RFID systems. For more info, go here.

This year has inarguably been one of the most innovative I've seen at Microsoft. We updated the major parts of our core platform with Windows Server 2008, Visual Studio 2008, SQL Server 2008 and introduced the Azure Services Platform. We think that using these technologies in conjunction with BizTalk Server 2009 will help our customers achieve significant productivity gains in mission critical areas such as ALM, SOA, connectivity, interoperability and BI.

So, when taken together, what does this all mean? Over the last decade, BizTalk Server has becomes a comprehensive family of solutions focused on enterprise connectivity for over 10,000 customers and partners worldwide. As we continue to expand the platform, we keep developers' needs at the heart of everything we do, with the goal of making their hard problems simple to solve.

Popular posts from this blog

[Excel] 문서에 오류가 있는지 확인하는 방법 Excel 문서를 편집하는 도중에 "셀 서식이 너무 많습니다." 메시지가 나오면서 서식을 더 이상 추가할 수 없거나, 문서의 크기가 예상보다 너무 클 때 , 특정 이름이 이미 있다는 메시지가 나오면서 '이름 충돌' 메시지가 계속 나올 때 가 있을 것입니다. 문서에 오류가 있는지 확인하는 방법에 대해서 설명합니다. ※ 문서를 수정하기 전에 수정 과정에서 데이터가 손실될 가능성이 있으므로 백업 본을 하나 만들어 놓습니다. 현상 및 원인 "셀 서식이 너무 많습니다." Excel의 Workbook은 97-2003 버전의 경우 약 4,000개 2007 버전의 경우 약 64,000개 의 서로 다른 셀 서식 조합을 가질 수 있습니다. 셀 서식 조합이라는 것은 글꼴 서식(예- 글꼴 종류, 크기, 기울임, 굵은 글꼴, 밑줄 등)이나 괘선(괘선의 위치, 색상 등), 무늬나 음영, 표시 형식, 맞춤, 셀 보호 등 을 포함합니다. Excel 2007에서는 1,024개의 전역 글꼴 종류를 사용할 수 있고 통합 문서당 512개까지 사용할 수 있습니다. 따라서 셀 서식 조합의 개수 제한을 초과한 경우에는 "셀 서식이 너무 많습니다." 메시지가 발생하는 것입니다. 그러나 대부분의 경우, 사용자가 직접 넣은 서식으로 개수 제한을 초과하는 경우는 드뭅니다. 셀 서식이 개수 제한을 넘도록 자동으로 서식을 추가해 주는 Laroux나 Pldt 같은 매크로 바이러스 에 감염이 되었거나, 매크로 바이러스에 감염이 되었던 문서의 시트를 [시트 이동/복사]하여 가져온 경우 시트의 서식, 스타일이 옮겨와 문제가 될 수 있습니다. "셀 서식이 너무 많습니다." 메시지가 발생하지 않도록 하기 위한 예방법 글꼴(종류, 크기, 색, 굵기, 기울임, 밑줄), 셀 채우기 색, 행 높이, 열 너비, 테두리(선 종류, ...

ASP.NET AJAX RC 1 is here! Download now

Moving on with WebParticles 1 Deploying to the _app_bin folder This post adds to Tony Rabun's post "WebParticles: Developing and Using Web User Controls WebParts in Microsoft Office SharePoint Server 2007" . In the original post, the web part DLLs are deployed in the GAC. During the development period, this could become a bit of a pain as you will be doing numerous compile, deploy then test cycles. Putting the DLLs in the _app_bin folder of the SharePoint web application makes things a bit easier. Make sure the web part class that load the user control has the GUID attribute and the constructor sets the export mode to all. Figure 1 - The web part class 2. Add the AllowPartiallyTrustedCallers Attribute to the AssemblyInfo.cs file of the web part project and all other DLL projects it is referencing. Figure 2 - Marking the assembly with AllowPartiallyTrustedCallers attribute 3. Copy all the DLLs from the bin folder of the web part...

How to control your World with Intune MDM, MAM (APP) and Graph API