Skip to main content

Boot Windows adding a local drive pointing to SHarepoint Online Folder

Announcing Dev10 Beta 2

Visdual Studio 2010 Beta is now available here.

Please start pounding it on it and let us know what you think.

Dynamically add commands to your VSIP Package

|

|

The set of commands that you can expose in a Visual Studio Package is fixed and defined by the .ctc file that you compile and include into your satellite dll. But what if after installation you need to create more commands? There is a solution to this problem. It implies having the ctc.exe (Command table compiler) from the VSIP distribution bits be distributed with your VSIP package. But that's the only piece of bits that you will need from the VSIP distribution. Let's begin, at runtime your package will need to create a new CTC file and have the CTC.EXE (the ctc compiler tool) compile it into a cto file. Notice that you are creating a dependency to the VSIP bits by having to invoke ctc.exe. However, with a little extra effort that will be the only dependency to the VSIP bits. Normally a CTC file uses the command preprocessor from Visual C++ compiler: cl.exe. You don't want to include the C++ compiler with your VSIP package right? Well the trick is to fool the ctc compiler with a dummy command preprocessor. This small batch script will be enough: @echo off echo #line 1 %4 type %4 Because we are not using the real preprocessor, then our generated CTC file cannot use any symbols that are defined in another inclusion file. For example, you cannot use the header file "vsshlids.h", instead you need to generate your CTC file without the help of any macro replacement. For example, instead of using replacement macros like in here: CMDS_SECTION MyPackageGUID MENUS_BEGIN MENUS_END NEWGROUPS_BEGIN MyGroupGuid:0x0100, guidSHLMainMenu:IDM_VS_MENU_TOOLS,0x600; NEWGROUPS_END BUTTONS_BEGIN MyGroupGuid:0x0100,MyGroupGuid:0x0100,0x0400, OI_NOID,0x00000001,0x00000000,"&My New Command"; BUTTONS_END BITMAPS_BEGIN BITMAPS_END CMDS_END CMDUSED_SECTION CMDUSED_END CMDPLACEMENT_SECTION CMDPLACEMENT_END VISIBILITY_SECTION VISIBILITY_END KEYBINDINGS_SECTION KEYBINDINGS_END You will have to use the real value and generate this: CMDS_SECTION { 0x77D93A80, 0x73FC, 0x40F8, { 0x87, 0xDB, 0xAC, 0xD3, 0x48, 0x29, 0x64, 0xB2 } } MENUS_BEGIN MENUS_END NEWGROUPS_BEGIN { 0x96119584, 0x8C4B, 0x4910, { 0x92, 0x11, 0x71, 0xD4, 0x8F, 0xA5, 0x9F, 0xAF } }:0x0100, { 0xD309F791, 0x903F, 0x11D0, { 0x9E, 0xFC, 0x00, 0xA0, 0xC9, 0x11, 0x00, 0x4F } }:0x0413,0x600; NEWGROUPS_END BUTTONS_BEGIN { 0x96119584, 0x8C4B, 0x4910, { 0x92, 0x11, 0x71, 0xD4, 0x8F, 0xA5, 0x9F, 0xAF } }:0x0100,{ 0x96119584, 0x8C4B, 0x4910, { 0x92, 0x11, 0x71, 0xD4, 0x8F, 0xA5, 0x9F, 0xAF } }:0x0100,0x0400,{ 0xD309F794, 0x903F, 0x11D0, { 0x9E, 0xFC, 0x00, 0xA0, 0xC9, 0x11, 0x00, 0x4F } }:0x02EA,0x00000001,0x00000000,"&My New Command"; BUTTONS_END BITMAPS_BEGIN BITMAPS_END CMDS_END CMDUSED_SECTION CMDUSED_END CMDPLACEMENT_SECTION CMDPLACEMENT_END VISIBILITY_SECTION VISIBILITY_END KEYBINDINGS_SECTION KEYBINDINGS_END Yes, I know it looks ugly (actually both files look really ugly), but it will be auto generated. Notice that the guid of the CMDS_SECTION must match the guid of your VSIP package. This is a very important detail; the guid must match so that VS finds a loaded package with that guid. Now you are ready to invoke ctc and create your own cto file, you will invoke like this: ctc.exe MyCTCFile.ctc MyCTCFile.cto –Ccpp.bat where MyCTCFile.ctc if your generated ctc file and cpp.bat is the dummy preprocessor. Ok, now you have a cto file, but how do make VS load it? The answer: 1. by creating a new satellite dll and 2. Registering it as the satellite dll of a non existent or dummy package. 1. To create a new satellite dll, you can use the exiting satellite dll from your VSIP Package (If you package does not have a satellite dll, you will have to create an empty one). Then you can use the BeginUpdateResource and UpdateResource functions to create you new satellite dll from the original satellite dll. internal sealed class NativeMethods { [DllImport("kernel32.dll", SetLastError = true)] public static extern IntPtr BeginUpdateResource(string pFileName, Int32 bDeleteExistingResources); [DllImport("kernel32.dll", SetLastError = true)] public static extern Int32 EndUpdateResource(IntPtr hUpdate, Int32 fDiscard); [DllImport("kernel32.dll", SetLastError = true)] public static extern Int32 UpdateResource(IntPtr hUpdate, string lpType, Int16 lpName, Int16 wLanguage, byte[] lpData, Int32 cbData); } File.Copy("OrginalSatellite.dll", SatelliteDllFileName); IntPtr hUpdate = IntPtr.Zero; using (FileStream ctoFile = new FileStream(tempCTO, FileMode.Open,FileAccess.Read)) { using (BinaryReader ctoData = new BinaryReader(ctoFile)) { byte[] ctoArray = ctoData.ReadBytes((int)ctoFile.Length); hUpdate = NativeMethods.BeginUpdateResource(this.SatelliteDllFileName, 1); try { if (hUpdate.Equals(IntPtr.Zero)) { Marshal.ThrowExceptionForHR(Marshal.GetHRForLastWin32Error()); } if (NativeMethods.UpdateResource( hUpdate, "CTMENU", (Int16)ResourceId, (short)CultureInfo.CurrentUICulture.LCID, ctoArray, (Int32)ctoArray.Length) != 1) { Marshal.ThrowExceptionForHR(Marshal.GetHRForLastWin32Error()); } } finally { if (!hUpdate.Equals(IntPtr.Zero)) { NativeMethods.EndUpdateResource(hUpdate, 0); hUpdate = IntPtr.Zero; } } } } In this code, the string "OrginalSatellite.dll" represents the location of the satellite dll of your package. The variable tempCTO is the file name of cto file created by the invocation of ctc.exe. 2. Ok, you got a new satellite dll, but as I said before, we need a dummy package for this new satellite dll. Basically you will need to create a new guid, this new guid can be stolen from a guid used in the generated ctc file, I am creating a new command group in my ctc file, so I am using the same guid for the dummy package. Register the dummy package by creating a new key under HKLM\Software\Microsoft\VisualStudio\Packages: [HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\VisualStudio\8.0\Packages\{96119584-8C4B-4910-0x9211-71D48FA59FAF}] [HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\VisualStudio\8.0\Packages\{96119584-8C4B-4910-0x9211-71D48FA59FAF}\SatelliteDll] "DllName"="MyNewSatelliteDllName.dll" "Path"="C:\\Path\\To\\My\\New\\SatelliteDll" As with any other satellite with menu resources we need to register it in the HKLM\Software\Microsoft\VisualStudio\\Menus Just write a new string entry with the dummy package guid and the value as ",ResourceId,1", where ResourceId must match the id that you use in the call to UpdateResource: [HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\VisualStudio\8.0\Menus] "{96119584-8C4B-4910-0x9211-71D48FA59FAF}"=",101,1" Now you must exit devenv.exe and run devenv.exe /setup again so that VS rebuild the command table. That's it. Your VSIP package has now more commands attach to it. Improvements: - Remove the dependency to the ctc.exe file, this will require knowledge on the binary representation of a cto file. I suspect that the format is not complicated and very straight forward. - This procedure requires you to run devenv.exe /setup, is there a way to update the command table of VS at runtime? |

Going to TechEd

I'll be in TechEd at the Visual Studio Team Architect demo station.

See you there.

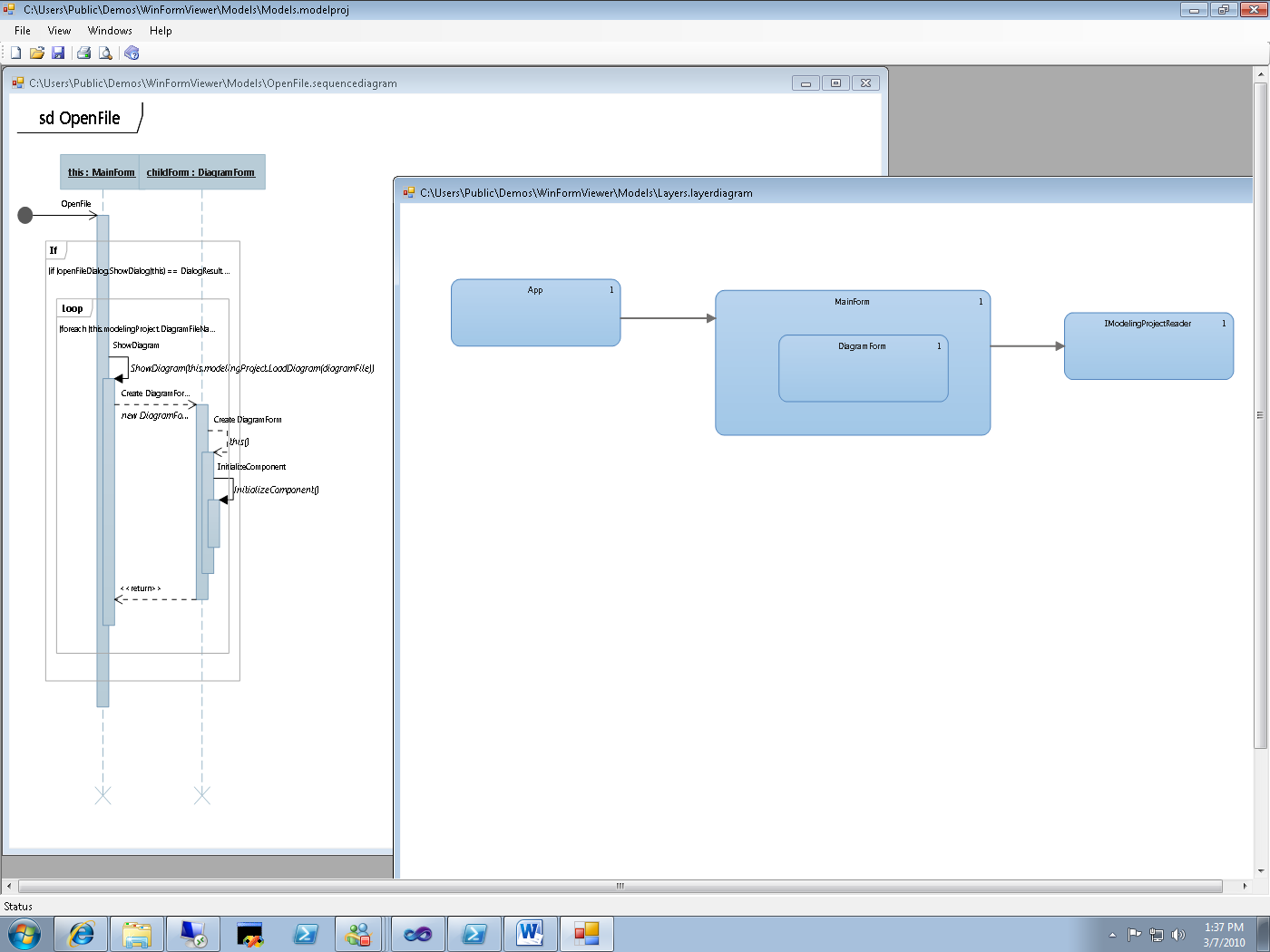

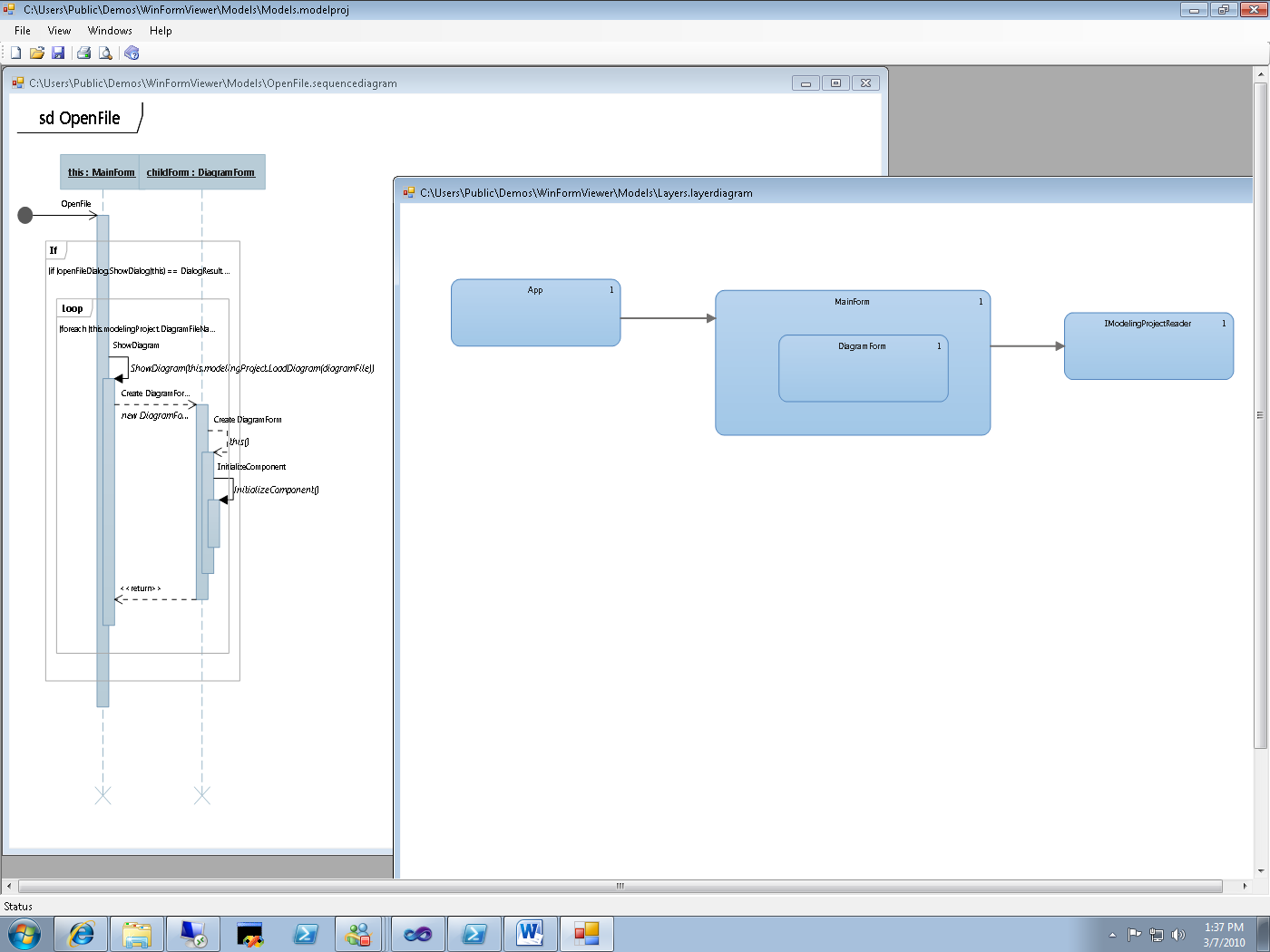

How to: Display modeling diagrams outside Visual Studio

In one of Cameron's blog post we show how to load and save a diagram from within Visual Studio. In this post we'll show how to use the modeling API to load an Uml diagram outside Visual Studio from a small Windows Form Application and display the diagrams in it.

The modeling API uses the interface IModelingProjectReader to access data in the modeling project.

In order to use this API you need to add the following references to your project:

Microsoft.VisualStudio.ArchitectureTools.Extensibility

Microsoft.VisualStudio.Uml.Interfaces

To load a modeling project use the following code:

using Microsoft.VisualStudio.ArchitectureTools.Extensibility;

using Microsoft.VisualStudio.ArchitectureTools.Extensibility.Presentation;

this.modelingProject = ModelingProject.LoadReadOnly(openFileDialog.FileName);

Once the project is loaded, we need to get the list of diagrams in that project:

foreach(var diagramFile in this.modelingProject.DiagramFileNames)

{

ShowDiagram(this.modelingProject.LoadDiagram(diagramFile));

}

Now we are almost ready to get the diagram, but first we need to add the following reference to our project:

Microsoft.VisualStudio.Modeling.Sdk.10.0

Microsoft.VisualStudio.Modeling.Sdk.Diagrams.10.0

LoadDiagram from IModelingProjectReader will return an IDiagram instance, from this instance you need to get the shape element for the DSL diagram. To do this use GetObject:

using Microsoft.VisualStudio.ArchitectureTools.Extensibility.Presentation;

using DslDiagrams = Microsoft.VisualStudio.Modeling.Diagrams;

void ShowDiagram (IDiagram diagram)

{

var dslDiagram = diagram.GetObject<DslDiagrams::Diagram>();

Once we have the dslDiagram we can use the CreateMetafile from the DSL API to get the image out of the diagram:

var selectedShapes = new []

{ diagram.GetObject<DslDiagrams::PresentationElement>() }.ToList();

var image = dslDiagram.CreateMetafile(selectedShapes);

That's it. I zip the sample at WinFormsViewer.zip, the sample code has a modeling project, and this is how the viewer looks like:

Hope you find this useful.

How to enable TV ratings under parental controls in MCE

|

|

If you don't have TV Ratings under your parental settings in Windows Media Center, most likely is because the COM object that implements that functionality is not installed with your TV turner driver, in the case of Hauppauge, if you install the WHQL driver then you will loose TV Ratings, if you install the retail driver you will get TV Ratings. The reason is that the retail driver includes the file hcwxds.dll. For NVIDIA, that dll is NVTVRAT.DLL and for Emuzed it is EZRATING.DLL Regards, Oscar Calvo |

How to: Run Expression Encoder 3 under PowerShell remoting

James wanned me to explain the set of steps I use to enable Convert-Media under a PowerShell remoting.

First a disclaimer: running these steps will lower the security settings of your PC, use with caution.

Here there are:

In your encoding server (mine is called tv-server):

Enable-PSRemoting -force # Enables remoting

set-item WSMan:\localhost\Client\TrustedHosts * -force # I am not running a domain controller, so my server needs to trust everybody

set-item wsman:localhost\Shell\MaxMemoryPerShellMB 1024 -force # The default allowed memory is very low, we need to bump it so that Convert-Media have room to work.

Enable-WSManCredSSP -Role Server -force # I need the server to have access to my Windows Home Server, so it needs to autenticate using credential delegation

In your client:

function AllowDelegation($policyName)

{

$keyPath = "HKLM:\SOFTWARE\Policies\Microsoft\Windows\CredentialsDelegation\"+$policyName

if (test-path $keyPath)

{

rmdir $keyPath -force -rec

}

$key = mkdir $keyPath

$key.SetValue("1","WSMAN/*");

}

AllowDelegation "AllowDefaultCredentials"

AllowDelegation "AllowFreshCredentials"

Enable-WSManCredSSP -Role Client -DelegateComputer * -force

In order to remote into your server do:

Enter-PSsession <ServerName> –cred <UserName>

I have used "*" (star) in two places, that's too broad, if you are already using a DNS suffix I would recommend to use it and change "*" to "*.mydomain.com".

In that case you need to refer to your server as:

Enter-PSsession <ServerName>.mydomain.com –cred <UserName>

Once inside, load the Encoder module as usual and execute Convert-Media.

You could Batch background encoding jobs using the instructions from the PowerShell team.

Enjoy,

Intro

Hello, after working with DevDiv and p&p as vendor for the past 7 years (wow, I can't believe it's so long) I finally joined Microsoft as a full time employee. I joined the VS Team Architect team as an SDE 2 months ago. I joined the team just in time for the Visual Studio 2008 release, I am really excited of the features that my team is delivering but more so about the ones in the future.

Joining Microsoft has been a huge change for me, both professionally and personally (I relocated from Costa Rica) and it's been an incredible busy year, so forgive me if I haven't answer your CAB and/or GAT questions (I know there are a couple here and there). Meanwhile I move the CAB and GAT articles from my previous employer web site to the msdn blog site.

Regards,

Oscar Calvo

Not really the last vsvars32 you will ever need...but close

I took Peter avise and for a while I've been using Chris's vsvars32, however it had some drawbacks,

1. It did not work from a 64bit PowerShell

2. For some reason Get-Batchfile did not work for me, I had to modify to call "echo" explicitly.

I also added a helper VsInstallDir and a alias for devenv.

Enjoy,

Oscar

function global:VsInstallDir($version="10.0")

{

$VSKey = $null

if (test-path HKLM:SOFTWARE\Wow6432Node\Microsoft\VisualStudio\$version)

{

$VSKey = get-itemproperty HKLM:SOFTWARE\Wow6432Node\Microsoft\VisualStudio\$version

}

else

{

if (test-path HKLM:SOFTWARE\Microsoft\VisualStudio\$version)

{

$VSKey = get-itemproperty HKLM:SOFTWARE\Microsoft\VisualStudio\$version

}

}

if ($VSKey -eq $null)

{

throw "Visual Studio not installed"

}

[System.IO.Path]::GetDirectoryName($VsKey.InstallDir)

}

function Get-Batchfile ($file) {

$cmd = "echo off & `"$file`" & set"

cmd /c $cmd | Foreach-Object {

$p, $v = $_.split('=')

Set-Item -path env:$p -value $v

}

}

function global:VsVars32($version="10.0")

{

$VsInstallPath = VsInstallDir($version)

$VsToolsDir = [System.IO.Path]::GetDirectoryName($VsInstallPath)

$VsToolsDir = [System.IO.Path]::Combine($VsToolsDir, "Tools")

$BatchFile = [System.IO.Path]::Combine($VsToolsDir, "vsvars32.bat")

Get-Batchfile $BatchFile

[System.Console]::Title = "Visual Studio shell"

}

set-alias devenv ((VsInstallDir)+"\devenv.exe") -scope global

Notes on the Synthesis of Form

I have been reading Christopher Alexander's "Notes on the Synthesis of Form", if you are into "Test Driven Design", Software Design and AgileSoftware Development you will find this book written by a building architect 45 years ago fascinating.

The author explains why some unconsiuos cultures can come up with better home designs than the developed (or self-consiuos) cultures. It also explains why a desing can only be created using test or "proofs".

Form there you can extrapolate to software development and see with a complete new perpective how the agile process approach the process of "Design".

In my personal case, It allow me to see why principles like YAGNI and KISS are fundamental to the process of design.

Even if you are not into agile, I definitely recommend this book, its a short read.

Powershell

As Peter I have became infected with Powershell, and to make it worse, there is console mode editor support. Sweet!!!

I have one week using it, I am not going back to cmd.exe ever!!

Here is a great replacement for vsvars32.bat made by Brad Wilson.

The Evil EnvDTE namespace

If you have ever written an AddIn for Visual Studio or a Package, you must likely recognize the interfaces EnvDTE.Solution, EnvDTE.Project, EnvDTE.ProjectItem, EnvDTE80.SolutionFolder and EnvDTE.DTE, and you may ask what's so wrong about these interfaces?

In order to answer this question we must look at the history of Visual Studio and Visual Basic. One of the many features introduced with the release of Visual Basic 4.0 in 1995 (https://en.wikipedia.org/wiki/Visual_basic ) was the ability to create "Add In" that ran inside the VB IDE or inside the Office Applications.

The idea was that using a set of COM interfaces one can easily extend the running host with more functionality. Now days this sound fairly easy to do but that's because today we have Java, .NET and may design patterns that are specifically designed to do this (check out the Composite Application Block from P&P). But in those days, Microsoft had unmanaged C++ and VB4 or VB5.

I can understand that If Microsoft had chosen C++ as the default language for writing those Add Ins, the design would probably be much better but instead they went with VB for a number of reasons ( The most important one was cost of development ). So if VB was to be the language for developing these Add In the COM interfaces used for extending the host MUST be VB-compatible.

And been VB-compatible meant a lot of constraints right there. Remember, in those days VB did not have inheritance, it barely did encapsulation, there was no interface concept in the language until VB6.

So VB lacked some pretty fundamental elements in the language, it also added some elements that we may call "detrimental" to the language, yes you're right, I am talking about default properties. You know the language thing that allows to write: Value = recordSet["Name"] instead of value = RecordSet.Columns["Name"].Value. I know that the first looks "shorter" than the later, but the later is explicit about the underlying data structure, however, that's another topic.

So, the firsts Add Ins for VB and Office (Called VBA) were designed with these constraints and if fact Microsoft was right (at the particular time). They were a success in terms of a community writing new Add Ins every day.

Then 2001 came and .NET was born. In 2002 Visual Studio 7.0 was released. With this VB acquire new powers, suddenly it was a full Object Oriented language and we also were given his big brother C#.

However, if you look at the way you extended Visual Stuido 7.0 and for that matter Visual Studio 8.0 and probably Orcas also, we continue to use the same old interfaces that were great for a "detrimental" scripting language as VB4,5,6, but not so great for VB.NET, C# and C++.

Let's see an example of what do I mean, let's take the EnvDTE.Project interface and let's analyzed it.

![Photobucket - Video and Image Hosting]()

The EnvDTE.Project interface is provided by a Project in the current open Solution in Visual Studio (I am using the definition of Project broadly because a Solution Folder is also a Project in Visual Studio, well see why later on, keep reading).

The Figure shows elements in the Solution Explorer in Visual Studio. Every single object in the solution tree must implement the IVsHierarchy, those nodes that are projects must also implement the IVsProject interface. However, one may think that most objects that are "Projects" in the solution tree provide an EnvDTE.Project interface. I said most because the reality is that it's up the designer of the Project system to provide or not the EnvDTE.Project.

The reality is that EnvDTE.Project (and for that matter almost all interfaces in the EnvDTE namespace) is an Automation interface. That is, an interface designed to be used by a VB6 client. So, you see where I go with this?

![Photobucket - Video and Image Hosting]()

Maybe, this picture helps to clear things up. When you have a Project node in the Solution Explorer, this project node must (yes must) implement the IVsHierarchy interface and the IVSProject interfaces. However, it is not the same story with EnvDTE.Project, this interface is provided by asking the IVsHierarchy for the "Extension Object". The designer of the Project System may answer NULL to that request.

It is completely valid for a FooBarProject system not to have an automation object.

The reality is that with the languages and Tools that we have today, there's little need for "automation" interfaces. I am not saying that we should not used them, on the contrary, they can be very useful in certain scenarios, but one needs to understand what's going behind the scenes. I wish somebody had told me all of this when Daniel(https://weblogs.asp.net/cazzu/) and I were designing the concept of Bound References in GAX.

One example when you should NOT use the EnvDTE interfaces is when you are trying to run through the Solution Tree, using the IVsHierarchy interface is much easier and a lot more robust that trying to use a bunch of automation objects.

Another problem with automation objects is that not every project system implements the same interfaces in the same way, one example is the get_Files method, C# implements it using a zero-based index, but the VB project system implements it using a one-based index.

If you are trying to write a Framework on top of Visual Studio, try to avoid going through the automation interfaces, try to see if there is a service in VS that can give you what you want. Example: Instead if using DTE.Selection, use IVsMonitorSelection service. If you need to store a Project pointer, persist the GUID of the project, not the path or canonical name.

All of this to say that, the EnvDTE are there mostly for historical reasons and to support old add ins and extensions. One must realize that they are second class citizens in the Visual Studio world of extensibility and that when writing extensions to VS one must talk with the real underlying objects we are extending.

I wish somebody had told me this before.

The Evil EnvDTE namespace

Link

Unit testing and the Scientific Method

Has it ever happen to you when working on a bug fix that after investing time on a fix you realized that the fix does not actually fixes the bug?

Or even worse, new bugs appear as the result of your change in the system under production.

This is similar to what happens when scientists assume a hypothesis to be true without actually running an experiment to confirm it. This something that no good scientist will ever do, however, it happens in the Software Engineering field more often than not.

If you compare the Scientific Method to the process followed to perform a bug fix, you will find a lot of similarities. I will go as far as to say that they are the same process.

Let me elaborate, the Scientific Method defines the following mayor tasks:

1. Define the question

2. Gather information and resources (observe)

3. Form hypothesis

4. Perform experiment and collect data

5. Analyze data

6. Interpret data and draw conclusions that serve as a starting point for new hypothesis

7. Publish results

8. Retest (frequently done by other scientists)

Let see how they apply to Software development and in particular to the process of "bug fixing".

1: Define the question:

For software development the question is simple: "Is the Software under development done-done?"

By done-done, I mean: Does it complies with user requirements? Does it have bugs? Is it shippable to customers?

Most of the time (if not always), the answer is no. Software will always have bugs and new feature requests from customers.

This step is formalized in software development by testing and filling bugs against the software in development. A good bug is one that actually contains the right information and no more. Adding more data will just create noise and make the bug harder to read and understand. This will serve the triage team to decide if team wants to move to step 3.

This is the step in software development when a developer gets assigned a bug. The next step for the developer is to "guess" what's going on. I say "guess" because by just reading the bug, most developers will not know for a fact (unless they were the ones that file the bug) what is going on.

The developer may use the debugger to understand and to gather more data about the bug; this could already be done during step 2.

A failing unit test is a great way to formulate a hypothesis to describe the bug under fix. If you find yourself in a position where it is impossible to write a unit test to express the bug under fixing, then you need to step back and invest into building the right infrastructure to be able to formulate hypothesis against your system under development. This is fancy way of saying that you need to build infrastructure that lets you build unit tests.

Another sign of problem in the process is if extensive debugging sessions (lasting more than 10 minutes) are required to formulate a hypothesis about the problem. But this is an entire subject for a different blog post.

Let me emphasize the point about investing in test infrastructure. If you compare the kind of investment made by the scientific community on "experimentation infrastructure", we in the software development community fall short by a long shot. When did a software project had the need to build a particle collider or to put a huge telescope in orbit just to be able to "test" an hypothesis?

These steps are together because unlike scientist, developers have it easy. They get to always use computers to run unit tests and integrations tests and most tools collect enough data about the system under tests.

I have to note than just like scientists, sometimes developers also need to design and implement experiments. Typical examples of this are:

a. Design a stress test framework.

b. Implement your own testing framework from scratch, like XUnit or NUnit if your development system lacks one.

6. Interpret data and draw conclusions that serve as a starting point for new hypothesis

This is the step where important questions need to be asked, like: Is there more cases that our hypothesis is not covering? Why this bug was not discovered earlier? Is this a regression?

Do we have invalid hypothesis (unit tests that actually do not test the system) in our system?

Once the developer confirms that in fact the unit test is valid, then she must proceed to perform the actual fix for the bug, which is equivalent in science as to formulate a new theory or model. In the case of software development we need to find the "the simpler solution that could possibly work but no simpler".

Scientist and developer, we both love when we find a solution that is: simple, small and complete. And also, both groups of professionals are very suspicious of the solution if it is complex and large. Some scientists will go as far as say that these kinds of solutions are really an intermediate step into the final and elegant solution. I tend to agree with that.

But developers are also engineers and we need to balance cost and time to be able to ship. Shipping is something that does not concern scientist (but there have been some famous races between scientists to see who is able to publish first).

7. Publish results

This is simple; just hit the button to do the submission in your source control system.

8. Retest.

In the scientific community a new theory, model or result is published with the expressed intent to be re-validated by other members of the community. It is enough that a single person in the community finds the result "invalid" to restart the process back to step 2. Software development is the same, once a bug is declared fixed; another member of team (not the same developer) must retest the bug and validate the fix.

In software development, some part of this step is automated if you have a gated check in system.

Developers hate when a bug fix is re-opened, that means the formulated hypothesis was incorrect, and the unit test created (if any) is not testing the right aspect of the system under development.

If the bug is actually fixed then the process goes back to step 1. Which is to ask: Are we done-done?

Conclusion

I focus the comparison with the process of bug fixing, but the comparison applies to the process of developing user stories. And with that almost the entire software development process is covered.

I would to make emphasis on step 3, myself being a software developer; when someone asks you about why a bug happens, do not guess, instead, formulate a hypothesis and run an experiment, then answer the question. That's what a sane scientist will do. Even worse, do not check in code "hoping" that it will fix a bug. That's like a scientist publishing a new theory without experimentation. Any scientist that does that not only will be ridiculed by her peers, she can forget about making a career as a scientist.

In short, engineers that do software development for a living should act more like scientist, because like it or not we are doing the same job scientists do.

Visual Studio 10 CTP

There are tons of new cool features in Visual Studio Team Architect 10.

- New UML Designers

- Architecture Explorer

- Layer Designer

Please download a give us feedback at Dev10 CTP Feedback page

If you have questions about the UML designers use the forums, we will be watching.

A new begining....

Bueno como sabéis dejé Microsoft a principios de Octubre de 2017, aunque podría decir muchas cosas, en virtud de un acuerdo de "no agresión" me veo obligado a callar. Como tengo mis propias opiniones y todavía España es un pais con cierta libertad de expresión ya os ire contando como va esto del "nuevo modelo". Muy en breve sabreis donde empiezo de nuevo, promete ser muy interesante, "challenging" y sin duda voy a poder trabajar agusto. Pienso dar mucha caña en este blog asi que estad al tanto, quien sabe si ahora voy a poder ser MVP :-D

a ver si nos aclaramos... Como comprar y activar tu suscripcion de Microsoft Online BPOS

Como todavía hay partners y clientes que me preguntan como se compra, como se activa BPOS, os pego unos slides para que quede claro.... espero :-D

BPOS Purchase Example + Screenshots.pdf

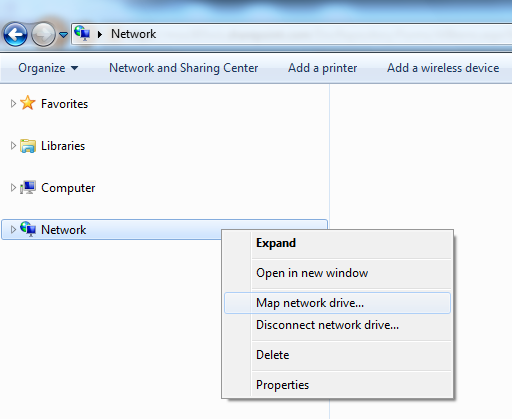

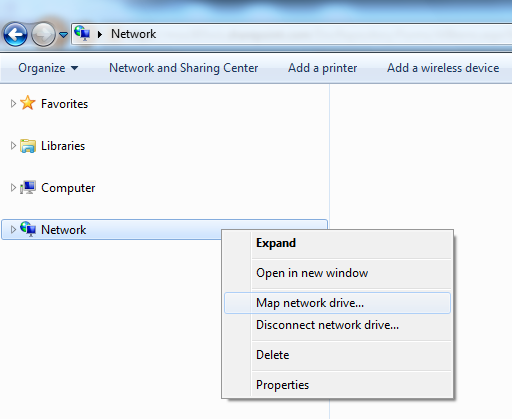

Access Office 365 document Library from WIndows Explorer

In order to map the files in this Document Library in Windows Explorer the trick is to:

- Copy the URL of the Document Library to the clipboard

- Open Windows Explorer

- Right-click "Network" and click "Map Network Drive" to display the dialog "Map Network Drive"

- In the dialog click "Connect to a Web site that you can use to store your documents and pictures" to display the dialog "Add Network Location"

- In the dialog click "Next" and then "Next" again to display a new dialog

- In the textbox "Internet or network address" paste the copied URL of the Document Library

- Finish the dialogs

In my example the URL is "

In order to map the files in this Document Library in Windows Explorer the trick is to:

- Copy the URL of the Document Library to the clipboard

- Open Windows Explorer

- Right-click "Network" and click "Map Network Drive" to display the dialog "Map Network Drive"

- In the dialog click "Connect to a Web site that you can use to store your documents and pictures" to display the dialog "Add Network Location"

- In the dialog click "Next" and then "Next" again to display a new dialog

- In the textbox "Internet or network address" paste the copied URL of the Document Library

- In my example the URL is "https://microsoft-my.sharepoint.com/personal/oscarmh_microsoft_com/

You should now be able to access the Document Library from your Windows Explorer.

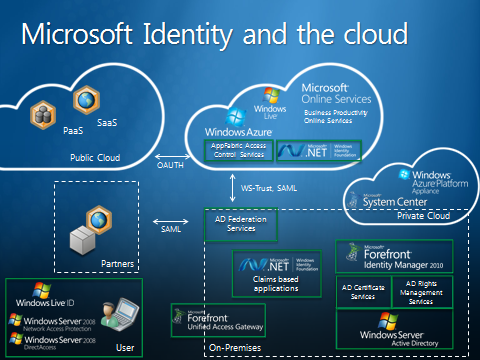

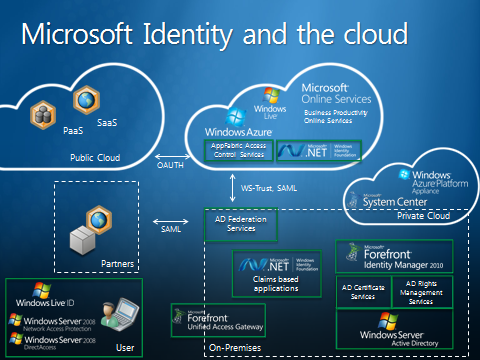

ADFS HELPPPPP!!!

Uno de los puntos criticos e importantes en Office 365 es ADFS como lo va a ser la coexistencia rica con Exchange 2010, estoy intentando encontrar informacion de como hacer una instalacion correcta os pego algo que he encontrado

https://blogs.msdn.com/b/plankytronixx/archive/2011/01/24/video-screencast-complete-setup-details-for-federated-identity-access-from-on-premise-ad-to-office-365.aspx

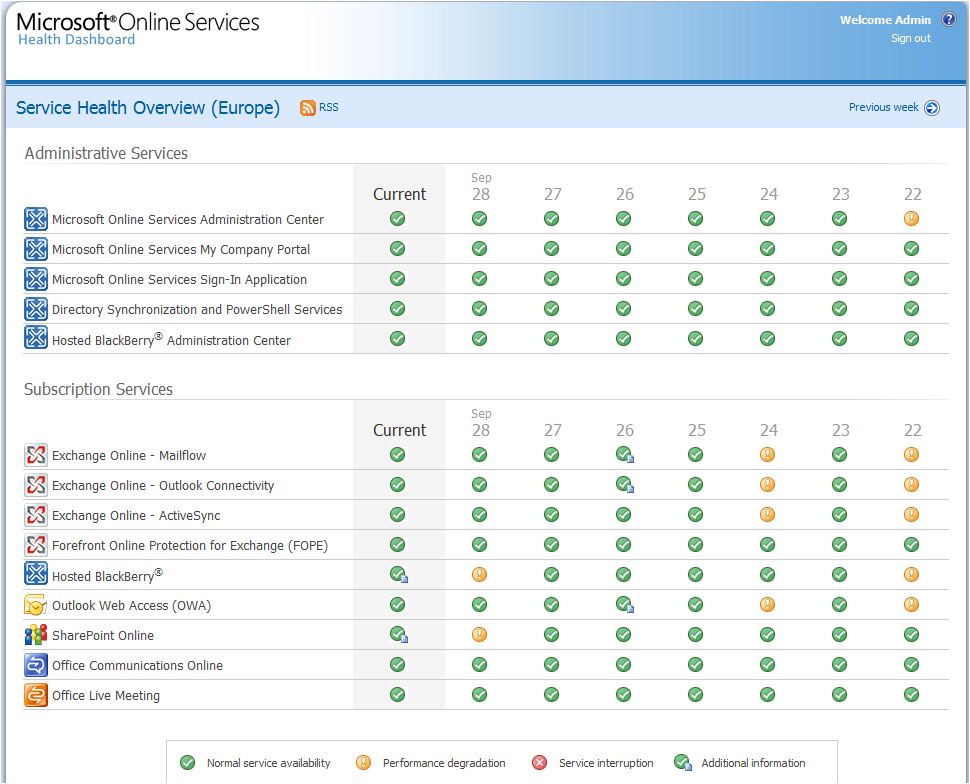

Bienvenidos al MOSHD...

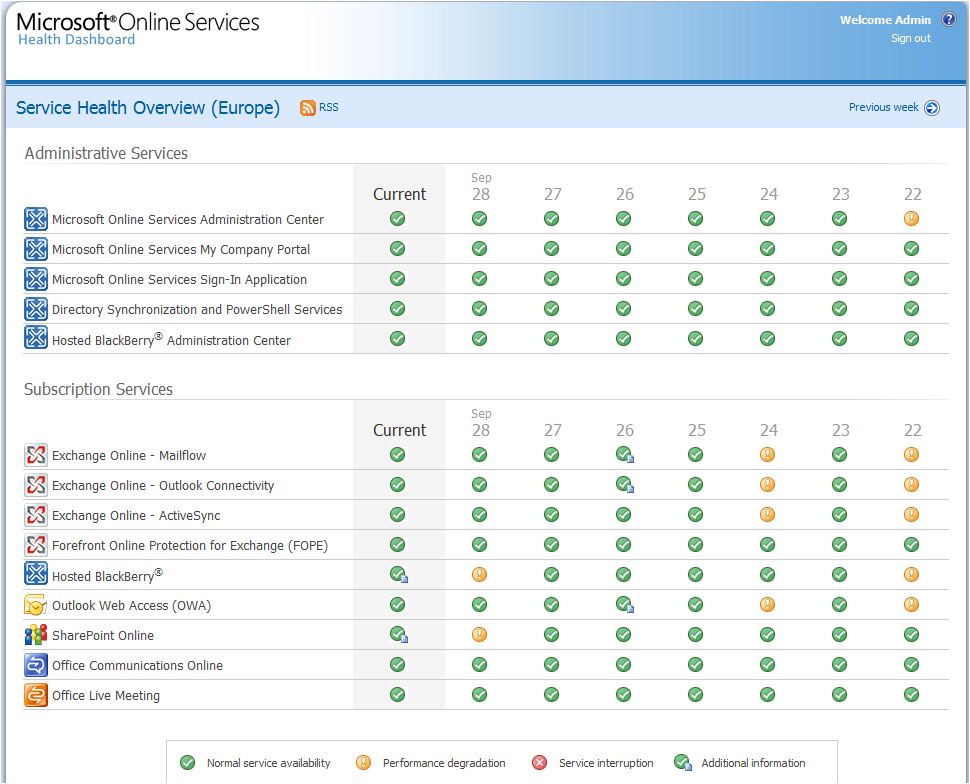

Con lo que nos gustan las siglas :-) MOSHD es el nuevo novisimo Microsoft Online Services Health Dashboard.... con este sugerente titulo tenemos un estado tiempo real e historico de 35 dias del status de los servicios Online, para acceder solo tenemos que acudir a nuestra pagina dependiendo de donde se encuentre nuestro Datacenter asi:

Cuenta con login/password que es el mismo que el admin que tengamos en nuestro MOAC

Este es el aspecto que tiene

Ejem, si pulsamos en los iconitos amarillos veremos la explicacion de la degradacion (!) si la ha habido... un paso más en la transparencia. Cuenta con mucha mas informacion y esperemos a ver su evolucion

bueno voy a copiarme de chema, saludos malignos

Boot Windows adding a local drive pointing to SHarepoint Online Folder

So, in my case need to do a .bat file with the following command

net use x: \\microsoft-my.sharepoint.com@SSL\DavWWWRoot\personal\oscarmh_microsoft_com /persistent:yes

Then regedit

well we need to use the variable %username% like in net use x: \\microsoft-my.sharepoint.com@SSL\DavWWWRoot\personal\%username%_microsoft_com /persistent:yes

The beauty of that is that I can access the hidden part of my onedrive

Navigate to the "HKEY_LOCAL_MACHINE\Software\Microsoft\Windows\CurrentVersion\Policies\Explorer\Run" key. Right-click the whitespace in the center details pane and select "New" then "String Value." Enter "map drive" for the name and enter "mapdrive.bat" for the value. Click "OK" to save your settings.

Read more : https://www.ehow.com/how_6835491_map-drive-windows-startup.html

Navigate to the "HKEY_LOCAL_MACHINE\Software\Microsoft\Windows\CurrentVersion\Policies\Explorer\Run" key. Right-click the whitespace in the center details pane and select "New" then "String Value." Enter "map drive" for the name and enter "mapdrive.bat" for the value. Click "OK" to save your settings

Read more : https://www.ehow.com/how_6835491_map-drive-windows-startup.html

Navigate to the "HKEY_LOCAL_MACHINE\Software\Microsoft\Windows\CurrentVersion\Policies\Explorer\Run" key. Right-click the whitespace in the center details pane and select "New" then "String Value." Enter "map drive" for the name and enter "mapdrive.bat" for the value. Click "OK" to save your settings

Read more : https://www.ehow.com/how_6835491_map-drive-windows-startup.html

Popular posts from this blog

[Excel] 문서에 오류가 있는지 확인하는 방법 Excel 문서를 편집하는 도중에 "셀 서식이 너무 많습니다." 메시지가 나오면서 서식을 더 이상 추가할 수 없거나, 문서의 크기가 예상보다 너무 클 때 , 특정 이름이 이미 있다는 메시지가 나오면서 '이름 충돌' 메시지가 계속 나올 때 가 있을 것입니다. 문서에 오류가 있는지 확인하는 방법에 대해서 설명합니다. ※ 문서를 수정하기 전에 수정 과정에서 데이터가 손실될 가능성이 있으므로 백업 본을 하나 만들어 놓습니다. 현상 및 원인 "셀 서식이 너무 많습니다." Excel의 Workbook은 97-2003 버전의 경우 약 4,000개 2007 버전의 경우 약 64,000개 의 서로 다른 셀 서식 조합을 가질 수 있습니다. 셀 서식 조합이라는 것은 글꼴 서식(예- 글꼴 종류, 크기, 기울임, 굵은 글꼴, 밑줄 등)이나 괘선(괘선의 위치, 색상 등), 무늬나 음영, 표시 형식, 맞춤, 셀 보호 등 을 포함합니다. Excel 2007에서는 1,024개의 전역 글꼴 종류를 사용할 수 있고 통합 문서당 512개까지 사용할 수 있습니다. 따라서 셀 서식 조합의 개수 제한을 초과한 경우에는 "셀 서식이 너무 많습니다." 메시지가 발생하는 것입니다. 그러나 대부분의 경우, 사용자가 직접 넣은 서식으로 개수 제한을 초과하는 경우는 드뭅니다. 셀 서식이 개수 제한을 넘도록 자동으로 서식을 추가해 주는 Laroux나 Pldt 같은 매크로 바이러스 에 감염이 되었거나, 매크로 바이러스에 감염이 되었던 문서의 시트를 [시트 이동/복사]하여 가져온 경우 시트의 서식, 스타일이 옮겨와 문제가 될 수 있습니다. "셀 서식이 너무 많습니다." 메시지가 발생하지 않도록 하기 위한 예방법 글꼴(종류, 크기, 색, 굵기, 기울임, 밑줄), 셀 채우기 색, 행 높이, 열 너비, 테두리(선 종류, ...

How to control your World with Intune MDM, MAM (APP) and Graph API

VSS yedekleme testi nasıl yapılır Exchange üzerinde bulunan verilerin yedeklenmesi (backup) ve geri yüklenmesi (restore) baslibasina çok önemli bir konudur. Bir yedegin saglikli alinmasi kadar restore isleminin basarili bir biçimde yapilabilmesi de test edilmesi gereken önemli bir islem. Exchange destegi olan (aware) diye adlandirdigimiz yazilimlar exchange writer'lari kullanarak VSS teknolojisi ile yedek alirlar. Yedekleme esnasinda karsilasilan sorunlarin büyük bölümünün nedeni, yazilimlarin uyumsuzlugu ya da bu yazilimlardaki yanlis bir ayar olabilmektedir. Bunun tespiti için, yani yedek alma sirasinda sorunun VSS Writer'dan mi, disk sisteminden mi ve/veya yedekleme yazilimindan mi kaynaklandigini anlayabilmek için Betest aracini kullanabilirsiniz. BETEST, Windows SDK yada Volume Shadow Copy Service SDK 7.2 (sonraki versiyonlarda mevcut) içerisinde bulunan yardimci bir araçtir. Araci kolaylikla bulabilir ve kurabilirsiniz. Kurulum islemini exchange sunucunuz...

ASP.NET AJAX RC 1 is here! Download now

Moving on with WebParticles 1 Deploying to the _app_bin folder This post adds to Tony Rabun's post "WebParticles: Developing and Using Web User Controls WebParts in Microsoft Office SharePoint Server 2007" . In the original post, the web part DLLs are deployed in the GAC. During the development period, this could become a bit of a pain as you will be doing numerous compile, deploy then test cycles. Putting the DLLs in the _app_bin folder of the SharePoint web application makes things a bit easier. Make sure the web part class that load the user control has the GUID attribute and the constructor sets the export mode to all. Figure 1 - The web part class 2. Add the AllowPartiallyTrustedCallers Attribute to the AssemblyInfo.cs file of the web part project and all other DLL projects it is referencing. Figure 2 - Marking the assembly with AllowPartiallyTrustedCallers attribute 3. Copy all the DLLs from the bin folder of the web part...

Comments

Post a Comment