Skip to main content

Identifying Cell by Cell Computation Mode in MDX queries

Synchronizacja wsteczna haseł z Azure AD do Active Directory i Self-Service Password Reset

Dotychczas za pomoca narzedzia synchronizacyjnego DirSync mozliwe bylo synchronizowanie hasel z lokalnego Acitve Directory do Azure AD. (Opisalem ten proces w artykule Synchronizacja hasel do Office 365 deep dive). Co jednak, jesli uzytkownik zapomnial swoje haslo? Potrzebna byla wtedy interwencja administratora, poniewaz uzytkownik nie mial mozliwosci zresetowania hasla . Administrator resetowal haslo, a DirSync synchronizowal je do Office 365 . Wsteczna synchronizacja hasel oraz portal Self-Service Password Reset rozwiazuja ten problem. Postaram sie obie funkcjonalnosci przyblizyc w ponizszym artykule.

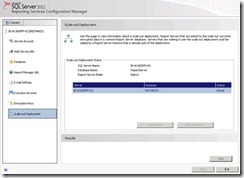

Jak to dziala?

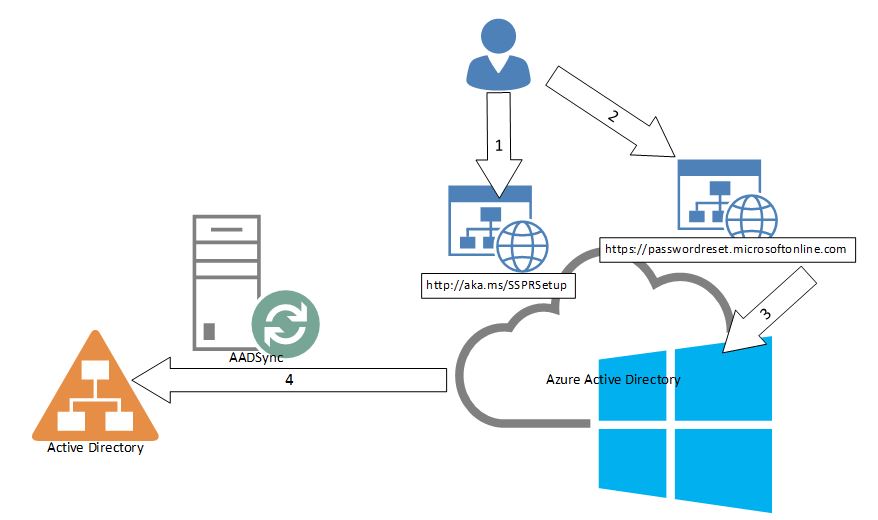

Aby móc skorzystac z opcji resetowania hasla uzytkownik musi miec przypisana licencje Azure AD Premium. Po przypisaniu licencji, uzytkownik moze za pomoca strony (1) https://aka.ms/SSPRSetup skonfigurowac, w jaki sposób chce potwierdzic swoja tozsamosc w momencie resetowania hasla. Dostepne opcje to:

- odebrania polaczenia telefonicznego (takze w jezyku polskim),

- wpisanie kodu z wiadomosci sms,

- wpisanie kodu z wiadomosci email otrzymanej na alternatywny adres pocztowy.

Uzytkownik po otworzeniu strony do resetowania hasla (2) (https://passwordreset.microsoftonline.com/) bedzie musial podac swój login oraz uwierzytelnic sie za pomoca jednej z wczesniej skonfigurowanych metod. Po zakonczonym powodzeniem uwierzytelnieniu, uzytkownik bedzie mógl wprowadzic nowe haslo (3), które zostanie zapisane w Azure AD. Nastepnie narzedzie synchronizacyjne Azure AD Sync skonfigurowane do synchronizacji wstecznej hasel, zreplikuje (4) haslo to lokalnego AD.

Nowe haslo, które wprowadzi uzytkownik musi byc zgodne z regulami zlozonosci zaimplementowanymi w lokalnym Active Directory.

Co musi zrobic administrator, aby wdrozyc te funkcjonalnosc?

Proces uruchamiania funkcjonalnosci Self-Service Password Reset zostal bardzo dobrze opisany na ponizszej stronie: https://msdn.microsoft.com/en-us/library/azure/dn683881.aspx

Wazne, aby konto administratora wlaczajacego portal Self-Service Password Reset:

- posiadalo przypisana licencje Azure AD Premium,

- bylo kontem chmurowym (zalozonym w Azure AD), a nie kontem sfederowanym z lokalnego AD (konto typu Microsoft Account uzywane do zarzadzania tenantem Azure równiez nie jest odpowiednie).

Warto pamietac, ze narzedzie Azure AD Sync synchronizuje równiez hasla z lokalnego AD do Azure AD, a wiec zapewnia funkcjonalnosc dostepna dotad dzieki DirSync.

Jak dziala proces synchronizacji wstecznej hasel?

Synchronizacja wsteczna hasel korzysta z technologii Azure Service Bus. Silnik synchronizacyjny wbudowany w narzedzie Azure AD Sync podtrzymuje komunikacje z Azure AD. Z punktu widzenia firewall'a, polaczenie jest nawiazywane z serwera Azure AD Sync do Azure AD. Do komunikacji wykorzystywane sa porty 9350-9353. Jednakze, gdy sa zamkniete, synchronizacja odbywa sie na porcie 80. Po zresetowaniu przez uzytkownika hasla jest ono przesylane w postaci jawnej do lokalnego AD, aby sprawdzic czy spelnia wymagania, co do zlozonosci. Jesli haslo jest zgodne z wymaganiami, zostanie zapisane w lokalnym AD. Mimo, ze komunikacja odbywa sie na porcie 80, jest ona zaszyfrowana mechanizmami, które dostarcza Azure Service Bus.

Po wykonaniu kilku testów moge stwierdzic, ze po zresetowaniu, haslo jest synchronizowane do lokalnego AD w przeciagu okolo 1 minuty.

Podsumowanie

Azure AD Premium otwiera nowe mozliwosci zwiazane z zarzadzaniem AD „w chmurze". Ponadto dzieki nowemu narzedziu synchronizacyjnemu: Azure AD Sync, integracja miedzy lokalna infrastruktura AD, a Azure AD jest jeszcze pelniejsza. Wkrótce Azure AD Sync bedzie dostarczal kolejnych nowych funkcjonalnosci. Stay tuned!

Weryfikacja środowiska przed migracją do Windows Azure

Kilka tygodni temu Microsoft udostepnil darmowa usluge Windows Azure Virtual Machine Readiness Assessment. Dzieki niej mozliwa jest weryfikacja prywatnej infrastruktury (obecnie: Active Directory, SQL Server oraz SharePoint) pod katem migracji serwerów do Windows Azure. Narzedzie testuje wybrane srodowisko, w wyniku czego powstaje szczególowy raport. Znajdziecie w nim ew. obszary, w których wymagane sa zmiany przed migracja (np. w konfiguracji lub architekturze uslugi). Dane dotyczace srodowiska sa zbierane zarówno na podstawie ankiety wypelnianej manualnie, jak równiez (a moze przede wszystkim) automatycznie wykonanych testów.

Narzedzie mozecie pobrac stad: Windows Azure Virtual Machine Readiness Assessment (https://www.microsoft.com/en-us/download/details.aspx?id=40898)

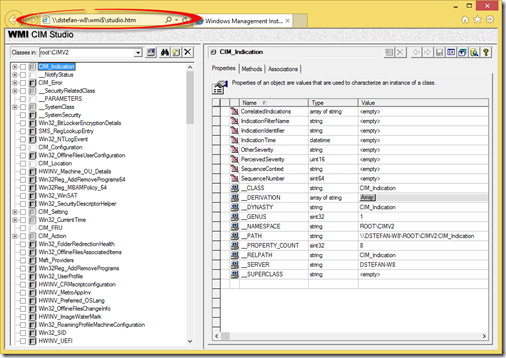

WMI CIM Studio na Windows 8.1

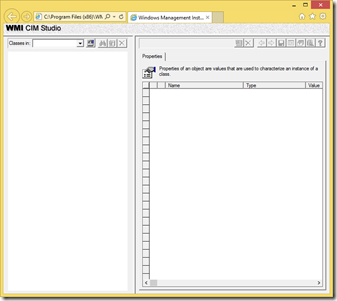

Kazdy pracujacy na co dzien z Windows Management Instrumentation powinien zaznajomic sie z pewnym narzedziem. Mianowicie z WMI CIM Studio (do pobrania stad: https://www.microsoft.com/en-us/download/details.aspx?id=24045). Niestety wraz z pojawianiem sie nowych wersji systemów operacyjnych, a co za tym idzie wersji przegladarki Internet Explorer, CIMStudio przestalo dzialac. Formatki ActiveX na których jest ono oparte sa uznawane za niebezpieczne. Owocuje to pustymi oknami dialogowymi:

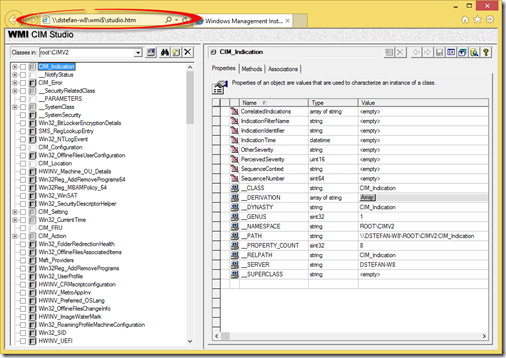

Na szczescie jest obejscie tego problemu, które dziala równiez w Windows 8.1/2012R2 jest wzglednie proste – uruchomienie studio.htm z zaufanej sciezki sieciowej. Z tym, ze ta sciezka sieciowa moze tez byc nasza stacja robocza :) Wiec do pracy:

Po instalacji WMI tools przechodzimy do katalogu z jego instalacja (domyslnie C:\Program Files (x86)\WMI Tools). Nastepnie share-ujemy go pod jakas nazwa. W moim przypadku jest to WMI$:

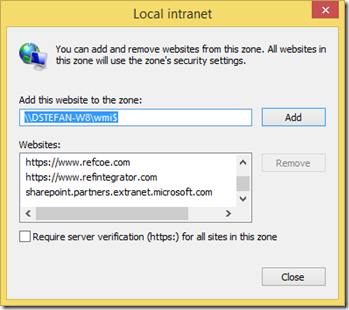

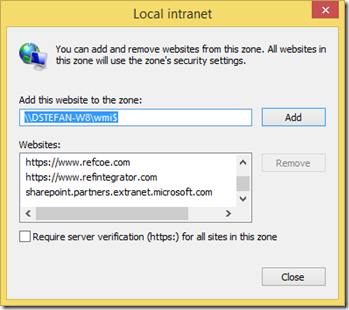

Po zaaplikowaniu zmian przechodzimy do katalogu sieciowego i otwieramy studio.htm za pomoca Internet Explorera. Pierwsze otwarcie bedzie mialo taki sam efekt, jak próba korzystania z CIM Studio bezposrednio z menu start – puste okna. Nalezy w opcjach zaawansowanych wlasciwosci strefy Local Intranet dodac nasza sciezke do listy adresów (uwaga – nie moze to byc localhost, musi to byc nazwa NetBios komputera):

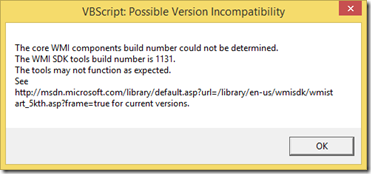

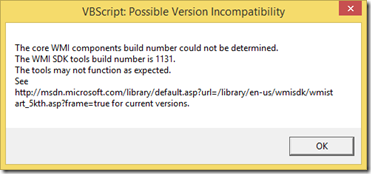

Po zatwierdzeniu zmian zamykamy IE. Otwieramy ponownie studio.htm i jestesmy powitani bedem:

Na szczescie to falszywy pozytyw. Po kliknieciu "OK" kilka razy mozemy pracowac z CIM Studio!

Dla zainteresowanych tematem WMI polecam zapoznanie sie z seria moich artykulów na GeekClub.pl:

https://wss.geekclub.pl/baza-wiedzy/wmi-jako-obiektowa-baza-danych-wprowadzenie-16,1444

Zalecany zestaw poprawek (hotfix rollup) dla Windows 7 SP1 / Windows Server 2008 R2 SP1

W polowie miesiaca firma Microsoft udostepnila zestaw poprawek (hotfix rollup), adresujacy znane problemy z wydajnoscia startu / logowania do systemu.

Zestaw poprawek zawiera tez usprawnienia dotyczace stabilnosci systemu operacyjnego.

Po wiecej informacji zapraszam do... (i tu niespodzianka) mojego bloga: Zalecany hotfix rollup dla Windows 7 z SP1 / Windows Server 2008 R2 SP1 .

Nazywam sie Piotr Gardy, jestem jednym z inzynierów PFE pracujacych w polskim oddziale Microsoft. Moja specjalizacja jest System Center Configuration Manager oraz zagadnienia zwiazane z wdrazaniem (deploymentem), wydajnoscia i stabilnoscia stacji roboczych.

Mój blog istnieje juz od dawna i regularnie wrzucam na niego swoje posty. Jednakze obiecuje wstawiac do nich odnosniki na niniejszym blogu.

Zapraszam do pozostania w kontakcie i serdecznie pozdrawiam

Zapraszamy na MTS 2013

W tym roku, ponownie mamy zaszczyt poprowadzic kilka sesji na konferencji Microsoft Technology Summit. Jezeli jeszcze nie wiesz czy sie wybierasz, to zapraszamy do zapoznania sie z tegoroczna oferta na stronie: www.mtskonferencja.pl.

Jezeli bedziesz na konferencji, to oczywiscie zapraszamy na nasze sesje oraz kontakt z nami. Obiecujemy trzymac poziom!

Temat | Opis | Prelegent | Godzina |

Web developer - Performance Troubleshooter Toolbox | W czasie fazy zbierania wymagan, projektowania lub implementacji aplikacji bardzo czesto skupiamy sie tylko i wylacznie na samej funkcjonalnosci rozwiazania, „zapominajac" o tak waznych parametrach jej jakosci jak bezpieczenstwo, czy bedaca tematem niniejszej sesji, wydajnosc. Na sesji tej dowiesz sie o: • Aspektach architektury aplikacji, na które warto zwrócic uwage podczas mierzenia wydajnosci, • podstawowych narzedziach wykorzystywanych do analizy problemów wydajnosciowych, zarówno dla aplikacji juz wdrozonych, jak i systemów bedacych jeszcze w fazie implementacji, • podejsciu do wydajnosci aplikacji z perspektywy calego cyklu jej zycia (Application Lifecycle Management). | Bartosz Kierun | Dzien 1: 12:30 - 14:00 |

Exchange Server 2013 - Tips & Tricks | Przyjdz, aby dowiedziec sie wiecej o najciekawszych trikach i poradach zwiazanych z Exchange 2013. Tego nie znajdziesz na Technecie! | Pawel Partyka | Dzien 1: 12:30 - 14:00 |

Zautomatyzowane wdrazanie Windows 8/8.1 w mojej firmie? Jasne, ze sie da! | Dowiedz sie w jaki sposób podejsc do wdrazania Windows Client w Twojej firmie. Czy mozna zrobic to juz jutro? Co z przygotowaniem? Skad wziac narzedzia do wdrazania? Moze juz sa obecne w firmie? Odpowiedzi na te pytania padna na sesji. Teoretycznie, ale i praktycznie - live demo musi byc! | Piotr Gardy | Dzien 2: 10:30 - 11:30 |

Architektura rozwiazan na platformie SharePoint | SharePoint 2013 oraz Office 365 wspieraja kilka modeli tworzenia oraz hostowania rozwiazan. Twórcy rozwiazan SharePoint staja przed dylematem, jaka architekture powinni wybrac. W ramach sesji zademonstrowane zostana rózne podejscia umozliwiajace rozszerzenie standardowej funkcjonalnosci produktów SharePoint. Przytoczone zostana wybrane rekomendacje oraz sprawdzone dobre praktyki planowania i budowy róznych typów rozwiazan. | Pawel Królak | Dzien 2: 12:00 - 13:00 |

Opanuj swoja chmure – Monitorowanie i wizualizacja stanu chmury przy pomocy System Center Operations Manager 2012 R2 | Opanowac chmure nie jest latwo, zwazywszy ze czesto systemy nie maja swojego stalego miejsca pobytu i meandruja pomiedzy wieloma hostami. Operations Manager automatycznie i w prosty sposób potrafi to okielznac przy pomocy wielu dodatków monitorujacych nie tylko sam system operacyjny, uslugi, procesy czy platforme wirtualizacyjna, ale takze pokazujacych stan infrastruktury w zupelnie inny sposób niz ten znany z widoku prostej konsoli. Na sesji postaramy sie pokazac najlepsze praktyki monitorowania chmury prywatnej, jak warto podejsc do samego tematu monitorowania i jak w najlepszy sposób przedstawic dane na temat chmury w sposób przejrzysty i zrozumialy dla operatów i administratorów IT. | Lukasz Rutkowski | Dzien 2: 13:00 - 14:30 |

Nie taki trace straszny - czyli jak badac problemy w sieci za pomoca Mirosoft Network Monitor | Wiekszosc specjalistów z dnia na dzien boryka sie z wszelkiego rodzaju problemami sieciowo-infrastrukturalnymi. Normalnym wtedy jest przeczesywanie gigabajtów logów i korzystanie z wyszukiwarek internetowych. Dopiero w ostatecznosci siegamy do analizy ruchu sieciowego, „bo to nie jest problem z siecia". Ta sesja ekspercka stanowi zestaw wskazówek pokazujacych, jak wydajnie podejsc do rozwiazywania problemów nie tylko sieciowych za pomoca typowo sieciowego narzedzia - Microsoft Network Monitor. | Daniel Stefaniak | Dzien 2: 13:00 - 14:30 |

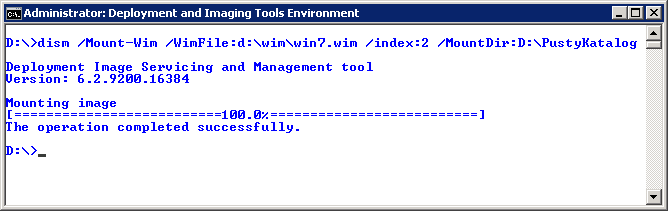

Zarządzanie obrazami systemów operacyjnych w trybie off-line

Poniewaz bardzo czesto otrzymuje pytania dotyczace zarzadzania obrazami systemów operacyjnych (pliki WIM) w trybie off-line ponizej zamiescilem prosty scenariusz, w którym:

- wlaczymy oprogramowanie klienta Telnet,

- usuniemy z pliku WIM indeks o numerze 1,

- zmienimy nazwe obrazu.

Wszystkie powyzej wymienione czynnosci wykonamy bez koniecznosci ponownego uruchamiania systemu Windows. Obsluga obrazów w trybie off-line ma miejsce wtedy, gdy obraz systemu Windows jest modyfikowany bez jego wczesniejszego rozruchu. Obsluga obrazów w tym trybie to wygodny sposób zarzadzania istniejacymi obrazami systemów operacyjnych (pliki WIM), który eliminuje koniecznosc uruchamiania lub ponownej instalacji systemów operacyjnych, a nastepnie tworzenia ich zaktualizowanych obrazów.

Narzedzia dism.exe oraz imagex.exe stanowia czesc oprogramowania o nazwie Windows ADK - https://www.microsoft.com/en-us/download/details.aspx?id=30652

Wiecej informacji o narzedziach do obslugi obrazów - https://technet.microsoft.com/pl-PL/library/hh825039.aspx

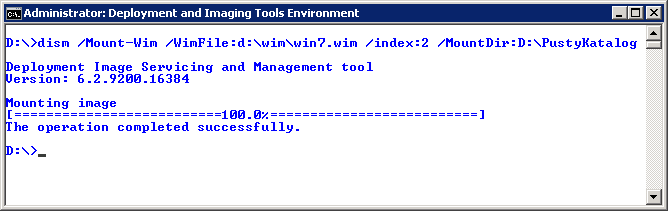

Wlaczamy oprogramowanie klienta Telnet

1. Tworzymy pusty katalog a nastepnie uruchamiamy komende:

dism /Mount-Wim /WimFile:d:\wim\win7.wim /index:2 /MountDir:D:\PustyKatalog

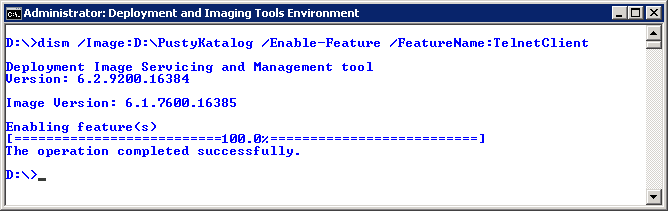

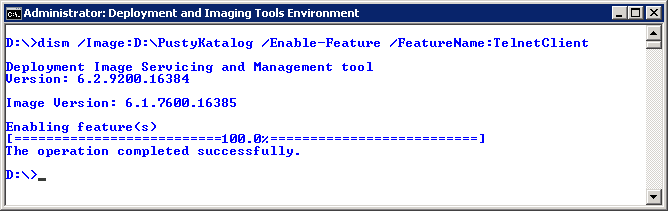

2. Nastepnie uruchamiamy komende (w tym wypadku wielkosc liter ma znaczenie):

dism /Image:D:\PustyKatalog /Enable-Feature /FeatureName:TelnetClient

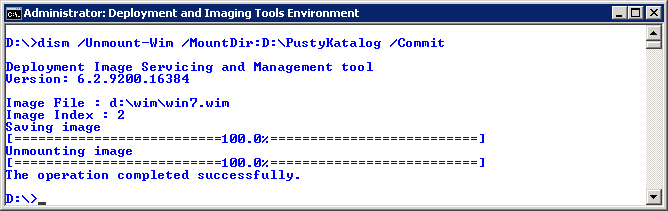

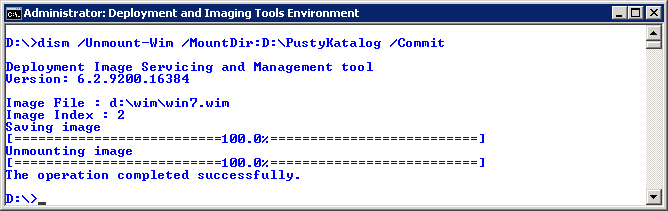

3. Nastepnie uruchamiany komende:

dism /Unmount-Wim /MountDir:D:\PustyKatalog /Commit

Usuwamy z pliku WIM indeks o numerze 1

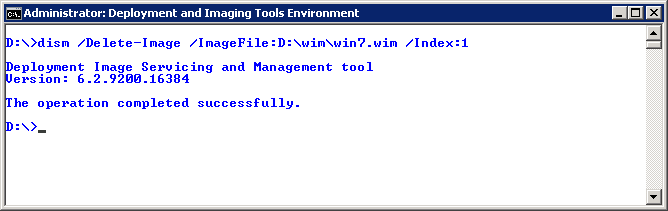

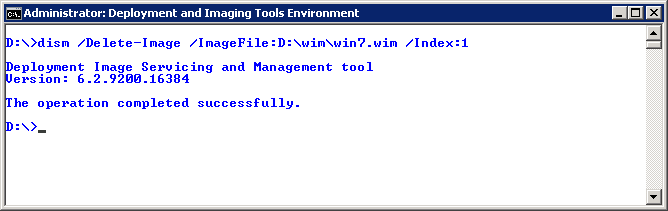

1. W celu usuniecia obrazu o indeksie 1, który w naszym przypadku zawiera partycje systemowa systemu Windows 7, która podczas instalacji system zarzadzajacy utworzy automatycznie, uruchamiamy komende:

dism /Delete-Image /ImageFile:D:\wim\win7.wim /Index:1

Zmieniamy nazwe obrazu

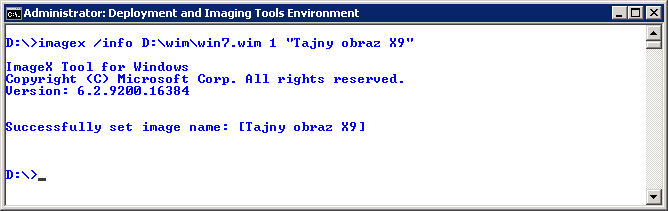

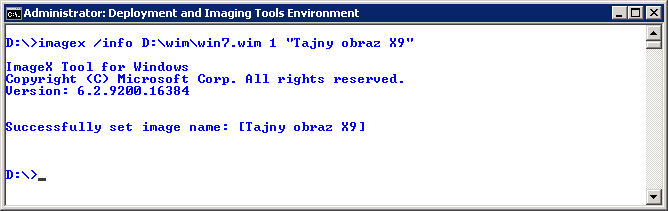

1. W tym celu uruchamiamy komende:

imagex /info D:\wim\win7.wim 1 "Tajny obraz X9"

Zarządzanie obrazami systemów operacyjnych w trybie off-line (ciąg dalszy)

W kolejnym przykladzie zajmiemy sie aktualizacja oprogramowania. Celem cwiczenia bedzie aktualizacja obrazu systemu operacyjnego za pomoca systemu zarzadzajacego System Center Configuration Manager w wersji 2012.

Aby móc skorzystac z opisywanej funkcjonalnosci wczesniej musimy zainstalowac i skonfigurowac role systemu zarzadzajacego o nazwie Software Update Point (SUP) .

Aktualizujemy oprogramowanie

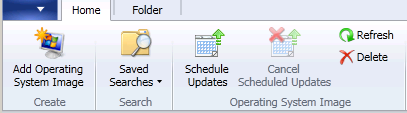

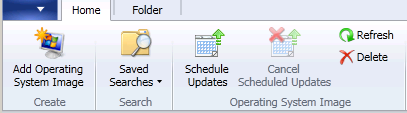

1. W konsoli systemu zarzadzajacego wybieramy galaz Software Library\Overview\Operating Systems\Operating System Images.

2. Wybieramy obraz systemu operacyjnego, który chcemy zaktualizowac.

3. Nastepnie wybieramy opcje Schedule Updates.

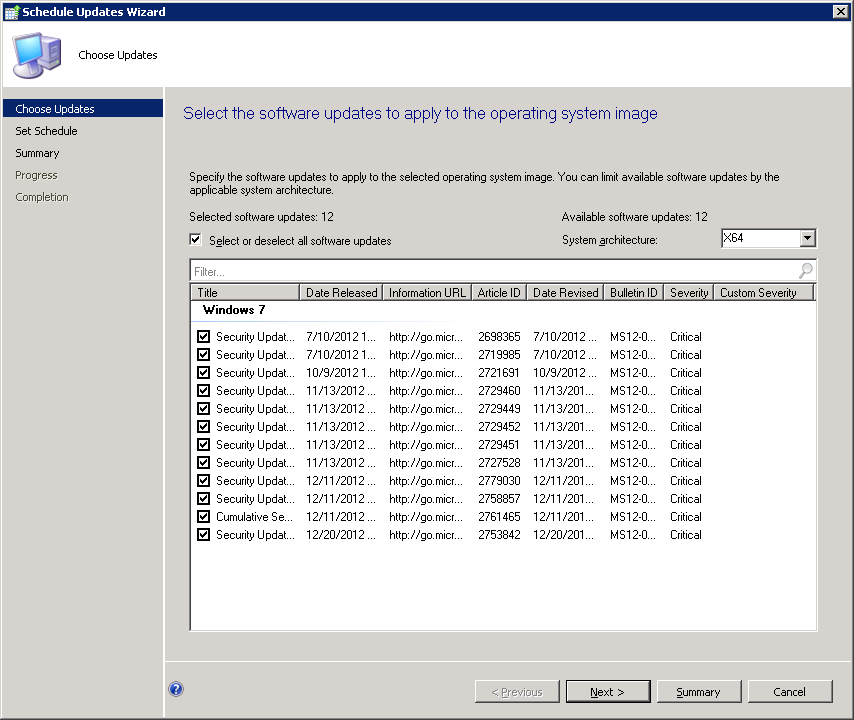

4. W oknie Schedule Updates Wizard zaznaczamy aktualizacje, które chcemy zainstalowac.

5. Na kolejnych oknach wybieramy pozadane opcje (np. konfigurujemy harmonogram zadan) i zamykamy narzedzie.

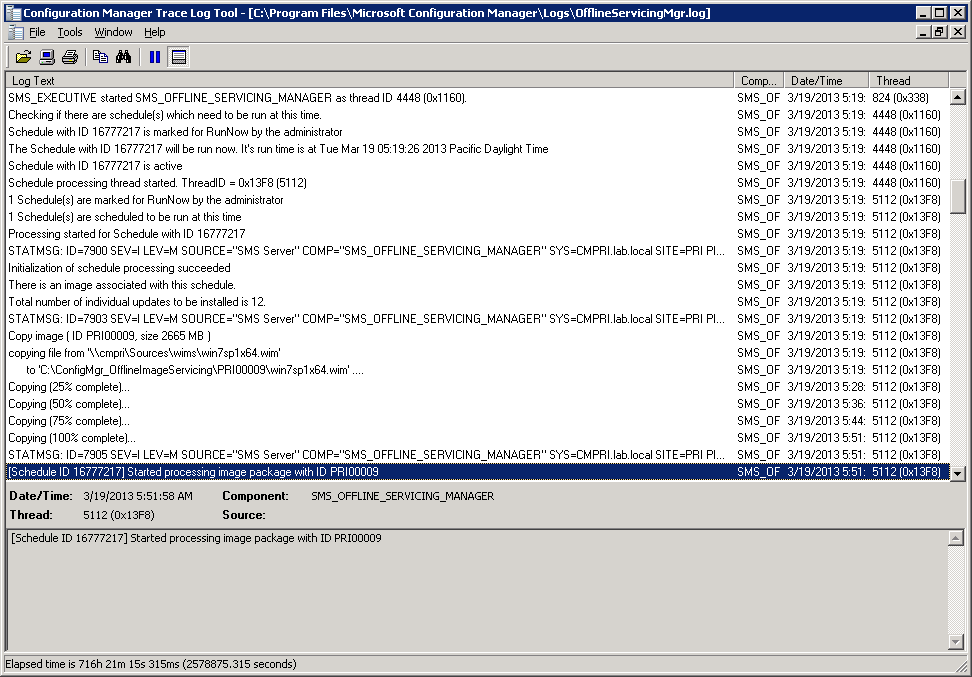

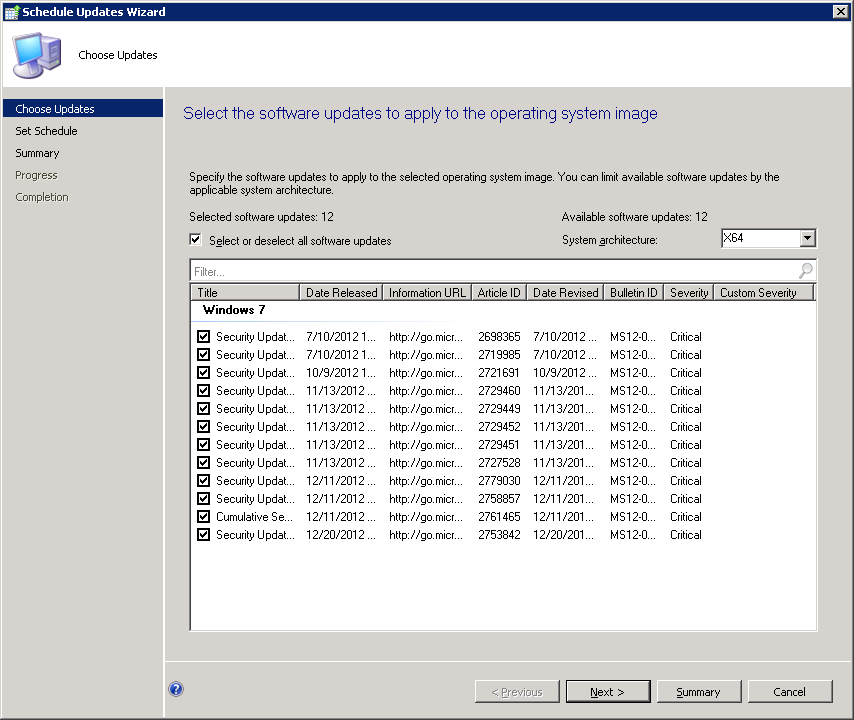

6. Postep instalacji mozemy obejrzec w logu

<katalog instalacyjny serwera>\Logs\OfflineServicingMgr.log

Zarządzanie zmianą w Office 365

Zarzadzanie zmiana w przypadku przejscia na uslugi Software as a Service takie jak Office 365 moze wymagac adaptacji tego procesu po stronie klienta. Celem tego artykulu jest przedstawienie, jakiego typu zmiany wystepuja w Office 365 oraz w jaki sposób informujemy naszych klientów o planowanych zmianach. Dzieki tym informacjom klienci beda mogli zintegrowac, gdzie jest to potrzebne, wlasny proces kontroli zmiany w organizacji ze zmianami wykonywanymi w platformie.

Pryncypia Office 365

„Office 365 is Evergreen" takie sformulowanie mozna czesto znalezc na obcojezycznych stronach dotyczacych Office 365. Co to w praktyce oznacza? Pracujac z Office 365 korzystamy zawsze z najnowszej wersji platformy. Office 365 jest kazdego dnia uaktualniany o usprawnienia w dzialaniu lub nowe funkcjonalnosci.

Office 365 jest agnostyczny, jesli chodzi o wersje. Pracujac np. z Exchange Online mozemy uzyskac informacje o wersji organizacji, jednakze nie udostepniamy publicznie informacji, jakie usprawnienia oraz nowe funkcjonalnosci wprowadza dana wersja. Ponadto wersje organizacji Exchange Online moga byc rózne dla kazdej instancji (tenanta) Office 365.

Rodzaje zmian:

Zmiany wprowadzane w Office 365 mozemy podzielic na 5 kategorii:

Nowe funkcjonalnosci

Zmiany w istniejacych funkcjonalnosciach

Zmiany powodujace zaklócenia w dzialaniu uslugi

Zmiany konfiguracyjne

Usprawnienia w platformie

Nowe funkcjonalnosci to zmiany wprowadzajace nowe mozliwosci w usludze np.: Data Loss Prevention w Sharepoint Online, czy MAPI/http w Exchange Online.

Zmiany w istniejacej funkcjonalnosci to rozszerzenia i rozbudowa istniejacych parametrów uslugi np.: zwiekszenie quoty (limitów) dla OneDrive for Business, zwiekszenie quoty (limitów) dla skrzynek wspóldzielonych w Exchange Online

Zmiany powodujace zaklócenia w dzialaniu uslugi to bardziej znaczace zmiany, które wymagaja dluzszego zaplanowania i wymagaja podjecia akcji po stronie klienta. Przykladem tego typu zmiany moze byc ograniczenie we wsparciu dla produktów, np.: wspieranie tylko najnowszej wersji przegladarki dostepnej dla systemu operacyjnego od 12 stycznia 2016.

Zmiany konfiguracyjne to zmiany, które wymagaja zaangazowania po stronie klienta, ale nie wymagaja dluzszego planowania. Dotycza zazwyczaj usprawnien w dzialaniu platformy np. zmiany wprowadzane w zakresach adresów IP wykorzystywanych przez Office 365

Usprawnienia w platformie, które poprawiaja jej dzialanie, zwiekszaja bezpieczenstwo, wprowadzaja usprawnienia wydajnosciowe lub usuwaja znane problemy w dzialaniu Office 365. Tego typu zmiany sa zazwyczaj niewidoczne dla klientów.

W jaki sposób informujemy o zmianach.

Informacje o zmianach w Office 365 dostepne sa w nastepujacych miejscach:

Wymagania systemowe dla Office 365 - https://technet.microsoft.com/pl-pl/library/office-365-system-requirements.aspx. Przedstawia wymagania dla platformy Office 365 w tym dlugoterminowe plany zwiazane z wymaganiami, np. informacje o tym, ze podstawowe wsparcie dla Office 2010 konczy sie 13 pazdziernika 2015, co w kontekscie Office 365 oznacza, ze nowe funkcjonalnosci pojawiajace sie na platformie chmurowej moga nie byc dostepne w Office 2010 po tej dacie.

Office 365 blog - https://blogs.office.com/. Strona, uaktualniana codziennie, zawierajaca informacje o wprowadzonych nowych funkcjonalnosciach lub zmianach w istniejacych funkcjach platformy

Strony Kondycji uslugi (Service Health) i Planowanej konserwacji (Planned Maintenance) w Portalu Office 365. Strony informuja o incydentach i wykonywanych pracach, aby przywrócic elementy uslugi do dzialania po wykrytej awarii. Strona Planowanej konserwacji informuje o przewidzianych pracach utrzymaniowych i ich konsekwencjach dla dostepnosci uslugi.

Centrum Wiadomosci (Message Center) w Portalu Office 365. Zawiera m.in. notyfikacje o planowanych zmianach, które moga powodowac zaklócenia w dzialaniu uslugi np.: wylaczenie wsparcia dla protokolu SSL 3.0.

Publiczna mapa drogowa - https://office.com/roadmap. Strona ta przedstawia wysoko poziomowe informacje o zmianach w funkcjonalnosci i nowych funkcjonalnosciach. Nie umieszczamy na tej stronie wszystkich informacji o nowych funkcjonalnosciach czy zmianach w konfiguracji, które planujemy wprowadzic do Office 365.

Rodzaje zmian, a kanaly informacyjne

Gdzie szukac informacji o wybranym rodzaju zmian. Ponizsza tabela powinna ulatwic poszukiwania informacji o zmianach.

| Zmiana | Zródlo | Kiedy pojawia sie informacja? |

| Nowe funkcjonalnosci | Publiczna mapa drogowa | Do 12 miesiecy wczesniej |

| Centrum wiadomosci, Office 365 Blog | W momencie wydania |

| Zmiany w istniejacych funkcjonalnosciach | Publiczna mapa drogowa | 1-3 miesiace wczesniej |

| Office 365 Blog | W momencie wydania |

| Zmiany powodujace zaklócenia w dzialaniu uslugi | Wymagania systemowe dla Office 365 | Co najmniej 12 miesiecy wczesniej |

| Centrum wiadomosci | 12 miesiecy |

| Zmiany konfiguracyjne | Centrum wiadomosci | 1-12 miesiecy wczesniej |

| Usprawnienia w platformie | Strony kondycji uslugi, jesli zmiana dotyczy przywrócenia uslugi po awarii | 5 dni minimum |

Czy moge wstrzymac wprowadzanie zmiany w Office 365?

Platforma Software as a Service, jaka jest Office 365 nie umozliwia wstrzymania na zadanie klienta wprowadzenia zmiany. Office 365 jest globalna usluga. Na raz z tych samych serwerów fizycznych korzysta wiele instancji Office 365. Ponad to, z uwagi na zobowiazania zwiazane z prywatnoscia danych nie rejestrujemy, na których serwerach znajduja sie w danej chwili skrzynki uzytkowników nalezace do klienta, czy na których serwerach znajduja sie strony Sharepoint. W zwiazku z powyzszym nie ma technicznych mozliwosci wstrzymania wprowadzania zmiany na prosbe klienta.

First Release

First Release jest programem, który umozliwia klientom uzyskanie wczesniej dostepu do niektórych nowych znaczacych funkcjonalnosci niz zostana one zainstalowane w srodowiskach korzystajacych ze standardowego cyklu dystrybucji zmiany. Dolaczenie do programu wykonywane jest dla calej instancji Office 365, a wiec dotyczy zarówno Exchange Online, jaki Sharepoint Online.

First Release nie jest „Beta" programem. Klienci korzystajacy z First Release otrzymuja takie samo wsparcie jak korzystajacych ze standardowego cyklu.

First Release nie jest narzedziem, które umozliwia opóznianie instalacji nowych funkcjonalnosci. Jest programem, który umozliwia otrzymaniem nowych cech uslugi w pierwszej kolejnosci.

Zmiany w First Release sa wprowadzana minimum 2 tygodnie wczesniej niz w standardowych srodowiskach. W przypadku wylaczenia trybu First Release w instancji Office 365 przez klienta, funkcjonalnosci, które nie sa jeszcze dostepne w standardowych „tenantach" zostana wycofane.

Podsumowanie

Istnieje kilka miejsc gdzie publikujemy informacje o zmianach planowanych w Office 365. Zalecam regularne sledzenie Centrum Wiadomosci w portalu Office 365, aby byc na biezaco z wprowadzanymi zmianami, zwlaszcza tymi, które maja wplyw na dostepnosc uslugi lub wymagaja akcji administratora. Ta funkcjonalnosc bedzie podlegala dalszym usprawnieniom. Informacji o zmianach w Centrum Wiadomosci szukajcie na Mapie Drogowej Office 365.

An Error when attempting to Preview a Report using SQL Server Data Tools in a Remote Session

A customer with whom I work recently encountered an error message when attempting to preview a report using SQL Server Data Tools (SSDT) in a remote session via Citrix.

The error message that was being returned was:

An error prevented the view from loading. (Microsoft Visual Studio)

===================================

An error occurred while attempting to start the report preview worker process. (Microsoft.ReportingServices.Designer)

Given that this scenario worked with previous editions of Reporting Services and BI Development Studio, the error was unexpected. On further investigation, the same behavior and error could be reproduced by launching a Remote Desktop session in Seamless or Remote Applications Integrated Locally (RAIL) mode.

RAIL extends the RDP protocol presents a remote application running on a RAIL server as a local user application running on the client machine. This causes the remote application to appear as if it is running on the user's local computer rather than being presented in the desktop of the remote Server.

In this scenario, the behavior and the error message are both by design.

SSDT is a multi-targeted assembly that is built around the .NET 4.0 Framework. Business Intelligence Development Studio (BIDS) is built around the .NET 3.5 Framework and there are differences in the way that the .NET 4.0 Framework deals with child processes when the parent process dies. As a result, there was a deliberate decision to change the behavior of the report viewer in preview mode. This is largely because under the .NET 4.0 Code Access Security policy, executing under the current AppDomain is no longer supported, necessitating use of a sandbox. You may wish to read Brian Hartman's blog posting "Expression Evaluation in Local Mode" for a more detailed discussion of Visual Studio 2010 defaulting to the .NET 4.0 CAS policy. The net result of the change is that when a report is previewed, SSDT spawns a child process to allow previewing of the report. In the RDP using Seamless or RAIL mode, it would still be necessary for SSDT to spin up a new instance of the Reporting Services Preview Processing Host (PreviewProcessingService.exe) separate from the SSDT process. In the event that the user were to close SSDT or SSDT were to crash, it would be possible for orphaned instances of the Reporting Services Preview Processing Host application to remain in memory and continue execution after SSDT was terminated.

The change makes it cleaner to close SSDT and eliminates the possibility of orphaned child processes in the event that the SSDT environment is terminated for some reason. Had the change not been made, it would have been possible to allow orphaned child applications/processes to continue to execute, using CPU cycles and consuming server memory, until the user logged off the machine. If the user didn't log off and rather elected to simply disconnect without logging off, any orphaned applications would have remained in memory for an indefinite period of time. In the scenario where a machine is being remotely accessed by multiple users, that could potentially mean thousands of orphaned applications would be in memory and actively executing at any given time.

Analysis Services: Removing Instance Level Admin Rights from Developers….

One of popular questions in these days: Is it possible to remove admin rights from developers but they can still run backup/restore operations as well as profiler trace? I know, from developer's perspective, this is like removing their freedom for things they developed but on the other hand, from Management perspective, admin rights should only belong to Database/BI Operational team. It is a very long running debate

Short Answer: YES

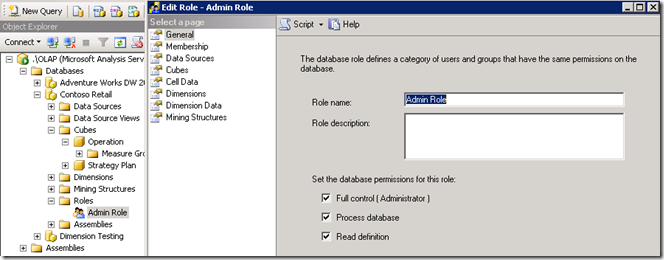

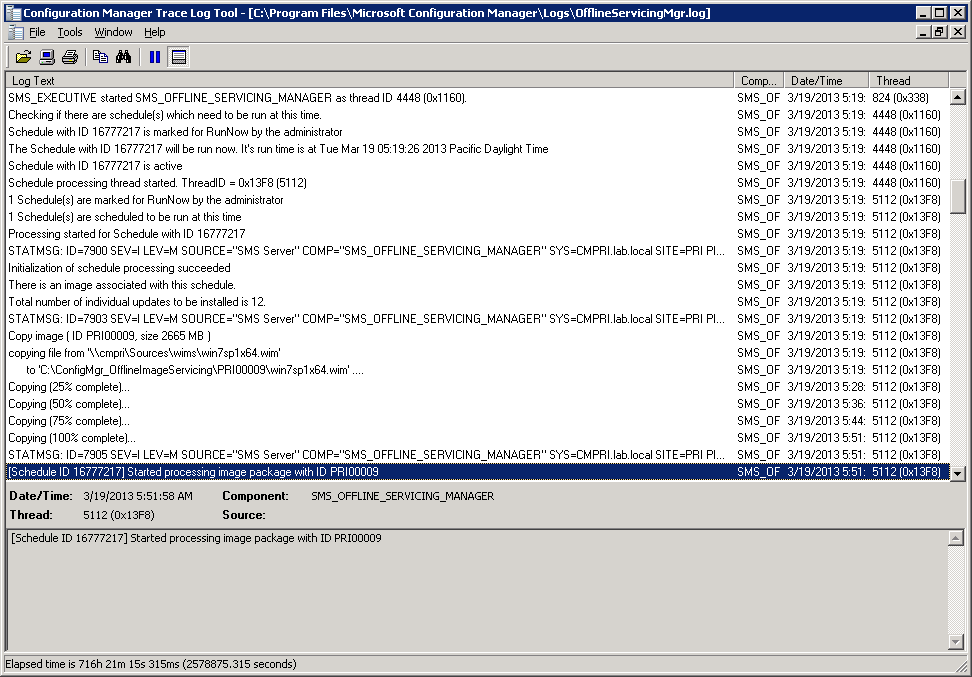

Here is my scenario and steps to limit permission:

In this scenario, we have two users : UserA and UserC

UserA: Instance Admin (represents DBA Permission)

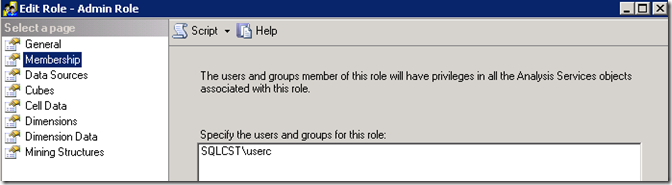

UserC: Database Level Admin (Represents Developer who has admin rights for specific database)

Two databases: Adventure Works and Contoso Database

UserC hasFull Control over Contoso Database

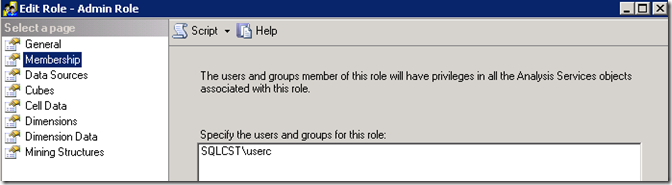

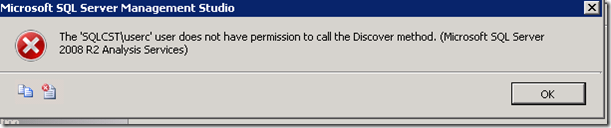

Now, userC can run Backup/Restore commands againts Contoso Database.However there is one issue, We have identified. There is no problem on backup command through GUI however if you try to restore database through GUI, userC gets following error message "The 'DOMAIN\username' does not have permission to call the Discover method"

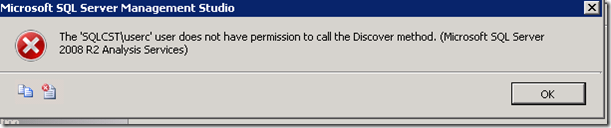

I suspect that this might be potential defect on GUI in SSMS by sending extra discover commands to Analysis Services. However, you can still restore database by using XMLA script.

<Restore xmlns="https://schemas.microsoft.com/analysisservices/2003/engine">

<File>C:\Program Files\Microsoft SQL Server\MSAS10_50.OLAP\OLAP\Backup\Contoso Retail.abf</File>

<DatabaseName>Contoso Retail</DatabaseName>

<AllowOverwrite>true</AllowOverwrite>

</Restore>

and it works completely well.

You can find required permissions for backup/restore operation in following link:

https://msdn.microsoft.com/en-us/library/ms174874(v=sql.105).aspx

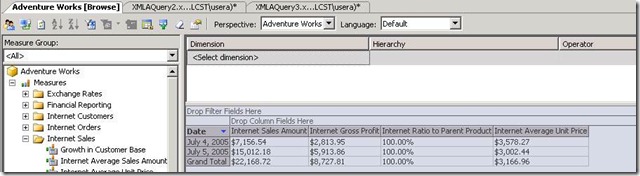

Another feature your developers may want to use is Profiler Trace. Developers can run profiler trace but they can only see profiler trace events againts databases,they have admin rights on.

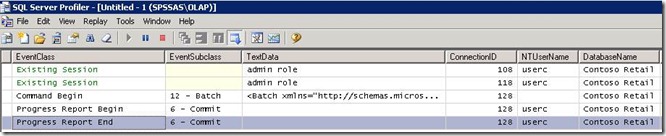

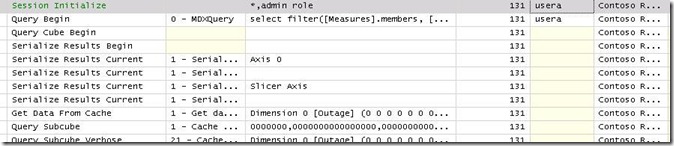

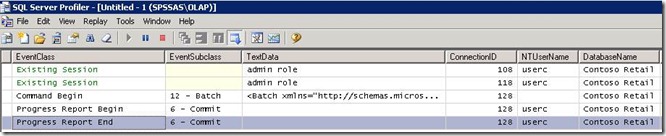

if you give full permission to user for specific database, user can run profiler trace and see all events related with that database only. For instance, UserC had admin rights for Contoso Database. UserC can run profiler trace against AS instance but userC can only see profiler trace events for Contoso Database. If UserA logs on to Contoso, this will appear on UserC trace. But if User logs on to Another Database Let's call it AdventureWorks, in this case, This session doesn't appear in UserC trace.

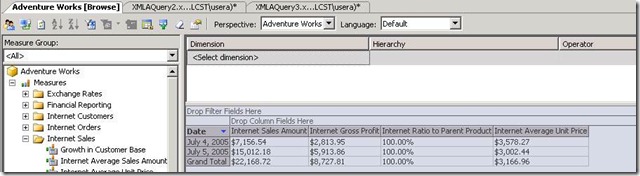

This is screenshot UserA is accessing Adventure Works

This is screenshot from Trace, UserC is running

Now if usera access Contoso Retail database, this will appear on trace that userC is running on. Screenshot attached

if you have any questions, feel free to contact me.

Analysis Services Tabular Mode Performance Tuning Guide is available!

We recently published performance guidelines for Analysis Service Tabular Model. You can access this white paper from our msdn site:

https://msdn.microsoft.com/en-us/library/dn393915.aspx

Feel free to post your questions!

Thanks

Kagan

Technorati Tags: SSAS,Tabular,Model,Mode,SSAS 2012,Performance Tuning,Optimisation,Guideline

Business Intelligence Academy for you…

It is pleasure to announce that we have another great offering in our premier catalogue. BI Academy is targeting any technical contributor to Business Intelligence solution, who wants to skill up on Microsoft Business Intelligence components. BI Academy is not only set of classroom trainings. We regularly catch up with attendees to give opportunity to ask their questions. BI Academy is mentoring program which involves classroom, virtual trainings as well as mentoring sessions.If you are interested in following mentoring program, please contact premier services or your Technical Account Manager.

BI Academy Training

BI Academy is divided in seven modules, which provides different levels of expertise and knowledge. Each module is a two days class with hands-on training. It follows a roadmap from the fundamentals to deeper areas inside the BI stack, like performance and tuning techniques. Learn how to integrate SQL Server™ BI technologies with SharePoint® Server so that you can use them to provide one central location for placing all your enterprise-wide BI content and tools. Technologies covered:

- SQL Server Engine

- Analysis Services (SSAS) Tabular and Multi-Dimensional

- Reporting Services (SSRS)

- Integration Services (SSIS)

- Data Quality Services

- Master Data Services

- Excel tools and PowerPivot

- SharePoint Server 2010

- PerformancePoint and Excel Services

Module 1

BI Fundamentals

Overview of the fundamental concepts in a BI solution by introducing the different multi-dimensional models.

Module 2

Tabular Modelling with SQL Server 2012

Learn how to install, develop and configure SSAS Tabular Solutions with SQL Server 2012 and take advantage of xVelocity technology.

Module 3

SSAS Performance, Tuning & Optimization

Review and improve the performance of a SSAS solution and learn how to troubleshoot the most common scenarios.

Module 4

Building and loading data warehouses with SSIS & T-SQL

Review how to improve the performance of a BI solution and review of how to troubleshoot the most common scenarios, including DW Design, SSIS Development and how to Load data into a Data Warehouse.

Module 5

Enterprise Information Management

Take advantage of Data Quality Services (DQS) and Master Data Services (MDS) to build a Knowledge Driven solution. Learn how to operate with data quality issues and how you can achieve this using real case scenarios.

Module 6

Data Consumption & Visualization with SharePoint Integration

After session 3 where we saw how to create different ways to explore data or to aggregate that data on a custom view, this session has the main pur-pose of showing how to turn those visualizations available in SharePoint. In resume, this session show how a BI Solution could be integrated with a SharePoint infrastructure.

Module 7

Data Consumption & Visualization with SSRS, PowerPivot and Power View

Presentation of the capabilities of the Data Visualization & Data Consumption Layers, Reporting Services Features & Development, Self-Service BI with Report Builder and Self-Service BI with PowerPivot for Excel.

Can I use SSDB without installing SSIS?

Recently my customer asked question around SSDB. As you may know, When you install SQL Server Engine only, when you go to management studio, you see, Integration Services Catalogs option.

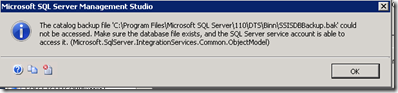

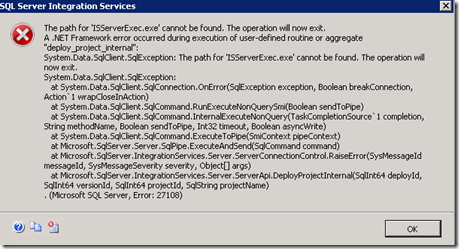

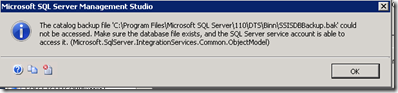

If you try to create one without installing SSIS, you will get following error message about SSISDBBackup.bak

The catalog backup file 'C:\Program Files\Microsoft SQL Server\110\DTS\Binn\SSISDBBackup.bak' could not be accessed. Make sure the database file exists, and the SQL Server service account is able to access it. (Microsoft.SqlServer.IntegrationServices.Common.ObjectModel)

In some sites, as a workaround, they suggest to restore SSISDBBackup.bak to be able to use SSISDB. It is true that you can restore bak file from machine which SSIS 2012 installed however you can not use SSISDB even you restore database successfully.

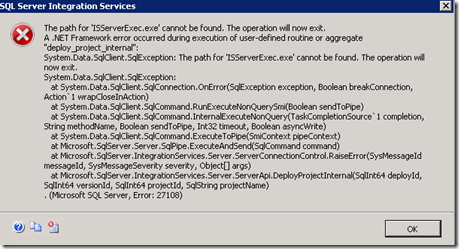

Some users say, they just want SSISDB to store packages but this is not possible as well. When it comes to deployment of this package to SSISDB, deployment will fail with ISServer.exe not found error message:

As conclusion, in order to functionality of SSISDB like store, deploy, run packages through SSISDB Catalog, you have to install SQL Server Integration Services 2012.

Let me know if you have any questions.

Kagan

Technorati Tags: SSISDB,dtexec,Integration Services,Package Execution.

Excel 2013 PowerPivot workbook with PowerPivot for SharePoint 2010 ?

Last week, One of my customer had interesting issue with PowerPivot Workbooks. Here is scenario:

My customer has created a PowerPivot Model by using Excel 2013. As you may know, PowerPivot is now shipped with Excel 2013 and it is another addin shipped with Excel.

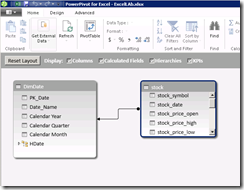

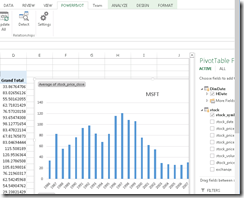

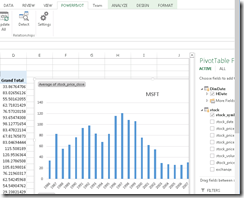

Please note that imagesbelow are illustrative images.

So Customer created very simple model

And also created Pivot Table in a workbook. Everything is working fine excel side.

As next step, we deployed our workbook to SharePoint PowerPivot Gallery and try to render workbook through sharepoint. When you first open workbook. You can see that Excel Services manages to render workbook properly. There is no problem so far. Because workbook didn't need to read data from source or doesn't need to refresh data.

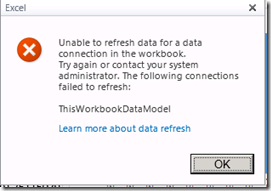

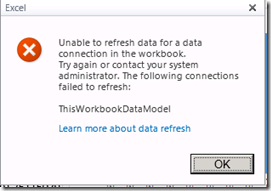

So when you click one of drill down options or filter options, excel workbook needs to refresh data and also needs to load workbook into your powerpivot for SharePoint Instance. At this point we get following error message:

"Unable to refresh data for a data connection in the workbook. Try again or contact your system administrator. The following connections failed to refresh: ThisWorkbookDataModel."

We couldn't find anything useful in ULS Logs. After further investigations, we have found out that This issue occurs because Excel 2013 uses a different method to maintain data models in workbooks than does SQL Server 2008 R2 or SQL Server 2012 PowerPivot for Excel 2010. Excel 2013 uses an internal connection for communication between the workbook and the embedded PowerPivot data. This internal connection does not reference a Microsoft OLE DB provider for Analysis Services (MSOLAP). Whereas PowerPivot for Excel 2010 uses an MSOLAP connection to load the embedded PowerPivot data model from a custom XML part in the workbook.

Excel Services and PowerPivot for SharePoint 2010 load PowerPivot data models from a custom XML part in the workbook. Therefore, this technology is incompatible with internal connections that do not reference an MSOLAP provider (such as the connections that are used by Excel 2013).

Note PowerPivot functionality is not supported by Excel 2013 workbooks that use data models in SharePoint Server 2010 environments.

Seems like only solution here to upgrade your sharePoint farm to 2013 farm or use excel 2010 to create your data models.

To work around this issue, upload the Excel 2013 workbook to a SharePoint Server 2013 farm.

Excel Services and PowerPivot for SharePoint 2013 load Excel 2013 workbooks that use advanced data models and PowerPivot workbooks that are created in PowerPivot for Excel 2010.

If you want to read more about it, here is KB Article:

https://support.microsoft.com/kb/2755126

Thanks

Kagan Arca

Technorati Tags: Power Pivot,SharePoint 2013,SharePoint 2010,Compatibility,ThisWorkBookDataModel,Error

Exciting news...

It is pleasure to announce our new blog site which will cover technical information as well as news, updates, events about Business Intelligence Premier Field Engineering. Our expert team is from all around world and it is great opportunity for us to share our experience on the field with you. In this blog, you will find more content around, best practices, recommendations, customer learnings, workshops, events. You will also have a chance to ask questions to our experts from all around world. Here are some technologies we are planning to cover:

- Sharepoint

- Reporting Services (SSRS)

- Integration Services (SSIS)

- Analysis Services (SSAS) / PowerPivot

- Data Quality Services (DQS)

- Master Data Services (MDS)

- SQL Server

- Data Warehousing

- StreamInsight

In addition to exciting technical topics, we will also give you updates about:

- Microsoft Premier Catalog Update For Business Intelligence

- Microsoft SQL/BI Operational Days

- Community Events

We also want to talk about :

- Recommendations

- Customer Scenarios / Lessons Learned From Large Implementations

- Performance Tuning and Optimisation Techniques

- Operational Implementations

- Troubleshooting/ Monitoring Techniques

I know, all sounds interesting and we are looking forward to sharing our experiencewith you but we also want to hear from you! Your feedback is always valuable for us to address your needs.

Stay tuned! We will have some exciting news around initiative we are working on Readiness Program model for you. More news soon to come!

Funny Case in Reporting Services

Hello everyone,

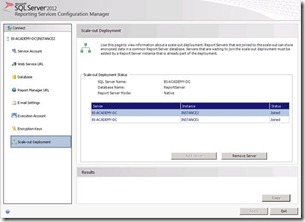

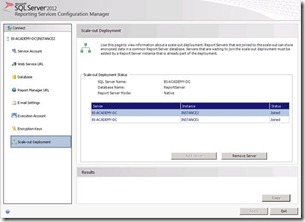

This week I had a funny case about Reporting Services scale-out. If you are aware of this subject you now that you can use multiple instances of SQL Server Reporting Services using the same Report Server database.

I had two Report Servers in different servers and a Report Server remote database in a SQL Server cluster:

· Server A – Instance1

· Server B – Instance2

· Server Z – SQL Server Cluster

So, the goal was to remove SSRS Instance2 from scale-out keeping only SSRS Instance1.

If we look into Reporting Services Configuration Manager we could see the two instances in the scale-out configuration section:

First step would be remove to Instance2 from scale-out and then uninstall Reporting Services bits from Server B.

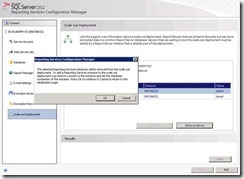

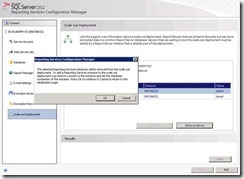

But something weird happened. First the person responsible for this operation removed Instance2 using Reporting Services Configuration Manager where the following warning appeared before removing the instance from scale-out.

This warning is normal and after we click OK, we can see that Instance2 was removed from scale-out giving the information of the installation ID that was removed.

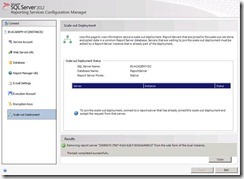

Now comes the strange part. If you look into Keys table in Report Server database or using Reporting Services Configuration Manager in Instance1, we can see that Instance2 record still exists!

If we check the status column in scale-out section we see that Instace2 is in "Waiting to Join" state. Basically the operation done by the user simple removed the symmetric key information for Instance2 but did not removed from scale-out.

So what can we do? If you try to add again Instance2 (This option does not make any sense!) you can see that it complains that SSRS instance no longer exists in the server.

Next, the logic step would be to use the rskeymgmt.exe tool with option "-r <installationID>" to remove the symmetric key information for a specific report server instance, thereby removing the report server from a scale-out deployment. The <installationID> is a GUID value that can be found in the RSReportserver.config file or in the Keys table in Report Server database.

But something went wrong. Tool return an error indicating that Report Server Windows services for instance MSSQLServer was not found. If we look closely MSSQLServer is the default named instance for SQL Server.

How can we solve this issue? Let's recap our environemt: One server with SSRS Instance1 and another server with SSRS Instance2. Since we are running this tool in the server with Instance1 (and Instance2 was part of Instance1 scale-out) we need to specify the "–i <Instance ID>"option while running rskeymgmt.exe.

So we ran rsmgmtkey.exe with options –r and –i like the following picture specifying Instance2 installation ID and Instance1 as scale-out instance.

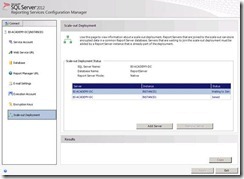

And here you go! Now everything is in place and Instance2 is finally off the scale-out topology.

Hope this solution helps you!

Alexandre Mendeiros Premier Field Engineer – Portugal

Reference

rskeymgmt Utility (SSRS)

https://msdn.microsoft.com/en-us/library/ms162822.aspx

Scale-out Deployment (Native Mode Report Server)

https://msdn.microsoft.com/en-us/library/ms181357.aspx

Add and Remove Encryption Keys for Scale-Out Deployment

https://msdn.microsoft.com/en-us/library/ms155931(v=sql.110).aspx

Hardware Sizing a Tabular Solution (SQL Server Analysis Services)

Hello everyone,

This month we released a new document about hardware sizing in SQL Server 2012 Analysis Services Tabular mode. This document provides guidance for estimating the hardware requirements needed to support processing and query workloads for an Analysis Services tabular solution.

Check more information here.

See you next time!

How can I learn Business Intelligence? Part-1

Technorati Tags: Data Warehouse,Learn Business Intelligence,Enterprise Data Warehouse,Dimensional Modeling,SQL,OLTP,OLAP

How can I learn Business Intelligence? I have been asked this question by my colleagues & customers several times. Actually It is great to see that people in market now realized that Business Intelligence doesn't mean SQL Server or Database. In other words, You shouldn't expect person who has deep SQL Skills to be able to deliver Business Intelligence. Although it took some time market to realise, finally we are there! We can understand from question "How can I learn Business Intelligence?" from people who are in SQL Market for ages.

I generally reply this question with another question: Which Business Intelligence layer are you interested in? Yes, I divided BI into layers:

-Data Warehousing Layer

-ETL Layer (Extract Transform Load)

-Data Processing Layer

-Data Management Information Later

-Data Visualisation & Content Management Layer.

I would recommend my customers and colleagues to start with Data Warehousing concepts. Because it is not easy to switch from stressful OLTP mind set like, transaction/ sec, High batch requests, concurrency, locking/blocking etc… to relaxed Data Warehousing OLAP mind set like, bulk loads, staging, transforming data etc…

Here is my few recommendation about Data Warehousing:

It is very useful to understand Enterprise DWH

https://msdn.microsoft.com/en-us/library/hh146875.aspx

There is also another useful link which provides wide range of resources about Data Warehousing:

https://technet.microsoft.com/en-us/sqlserver/dd421879.aspx

I also recommend you to read book called The Definitive Guide to Dimensional Modeling.

For our Premier customers, we have two modules in BI Academy. These are :

Module 1

BI Fundamentals

Overview of the fundamental concepts in a BI solution by introducing the different multi-dimensional models.

Module 2

Building and loading data warehouses with SSIS & T-SQL

Review how to improve the performance of a BI solution and review how to troubleshoot the most common scenarios, including DW Design, SSIS Development and how to load data into a Data Warehouse.

Covering these topics with some hands on experience should give good starting point to Data Warehousing Layer.

I will be covering rest of layers on following posts.

How to use SSIS to migrate data to Azure database from on-premise database

Today I read an article from msdn, it described Azure database support ODBC, so I did a test to migrate data from on-premise to Azure database.

General Guidelines and Limitations (Windows Azure SQL Database)

https://msdn.microsoft.com/en-us/library/windowsazure/ee336245.aspx#dlaps

Let's start to see how to use SSIS to migrate data from on-premise database to Azure.

- Create a DSN via ODBC. The most step is similar with traditional ODBC configuration, just pay more attention on the server name format, SQL authentication, and default database.

- Now I had a DSN named "AzureDB", the default database is destination Azure database

- Using SQL Server Data Tools to create an Integration Service Project.

- Drag a "Data Flow Task" in Control Flow

- In Connection Managers, create a new "OLE DB Connection Manager" and choose the on-premise database; Then create a new "ODBC Connection Manager" and choose the Azure database

- In Data Flow, drag "OLE DB Source" and "ODBC Destination", then configure the "OLE DB Connection Manager" to the OLE DB Source and choose the table you want to migrate, meanwhile configure "ODBC Connection Manager" to ODBC Destination and choose the table you created in Azure database.

- Then run the SSIS package, we can see the data migrate to Azure database now.

Enjoy it!

Identifying Cell by Cell Computation Mode in MDX queries

Some weeks ago I was asked to help with a performance issue with some MDX Queries.

I was shown what the customer wanted to achieve, they needed to calculate some metrics including sampling errors using the method of "Conglomerados Últimos" (This can be translated to "Conglomerates Last" Method), this method was created by a Spanish Researcher and publish in a Spain Publication to calculate sampling errors a posteriori when the sample is stratified. This method is described in Spanish (Sorry I don't an English version of this document) here https://dialnet.unirioja.es/descarga/articulo/250808.pdf

Just trying to understand the method and formulas was hard, but the customer had already an elegant solution using an MDX Script and the SCOPE statement. So I focused on the performance issue instead of trying to understand the formulas.

After a couple of hours I found out that the formula was using cell by cell calculation instead of block calculation, then found the operator in fault (the "^" operator) and after a small change (multiply the measure by itself) the query improved from around 30 seconds to 1 second.

You can find some details about the operators that support block computation in "Identifying and Resolving MDX Query Performance Bottlenecks in SQL Server 2005 Analysis Services" found in https://www.microsoft.com/en-us/download/details.aspx?id=661, you can also find some guidelines to improve query performance in https://msdn.microsoft.com/en-us/library/bb934106.aspx

What I want to focus in this blog is how you can detect that the issue is Cell by Cell Calculations.

We have two main tools to troubleshoot this kind of problems, SQL Server Profiler and Performance Monitor, the recommended order is to identify that the problem is Formula Engine using the profiler and then use the Performance monitor to confirm that the query is using Cell By Cell Calculation instead of block calculation (or computation).

Here is the recommended methodology, this is not new, but I haven't found a good reference that goes through this steps.

Step 1. Identify if bottleneck is Formula engine

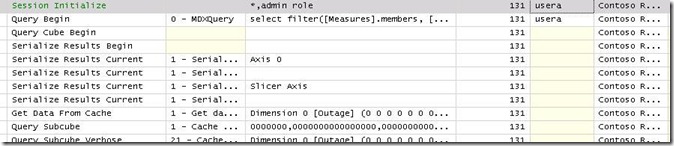

Use the profiler to capture the execution of your query, it is easiest if you do this in an isolated environment but if not possible you can still filter the events from your query using the ConnectionId or some other column.

You need to add the duration for each QuerySubcube, and then subtract that total from the duration shown in the QueryEnd Event. In this example you can see that most subcubes take 0 ms, some of them 16 ms and some other that is not shown in the image was 110 ms. You can also see that the total for the query was 3,953 ms, that means that, in the Storage Engine, Analysis Services spent 142 ms and the difference 3811 ms were spent in the Formula Engine.

In conclusion, we have a Formula Engine Bottleneck, let's check is that bottleneck is caused by cell by calculation.

The previous procedure may not scale well when you have hundreds of subcubes events or many potential queries using Cell by Cell in the same trace, in that situation you can use a procedure like this one:

- Save the profiler trace to a SQL Server table

- Execute the following SQL Query to obtain a list of the longest running queries

SELECT ROWNUMBER, EVENTCLASS, EVENTSUBCLASS, TEXTDATA, DURATION, CONNECTIONID FROM <TABLE_NAME> WHERE EVENTCLASS=10 - Execute the following SQL Query to get the beginning and ending points of the query in the trace

SELECT TOP 2 ROWNUMBER, EVENTCLASS, EVENTSUBCLASS, TEXTDATA, DURATION, CONNECTIONID FROM <TABLE_NAME> WHERE EVENTCLASS IN (9,10) AND CONNECTIONID=<TARGET_CONNECTIONID> AND ROWNUMBER <= <ROWNUMBER_OF_TARGET_QUERY> ORDER BY ROWNUMBER DESC - Execute the following SQL Query to determine the amount of time spent in storage engine

SELECT SUM(DURATION) FROM <TABLE_NAME> WHERE EVENTCLASS=11 AND CONNECTIONID=<TARGET_CONNECTIONID> AND ROWNUMBER BETWEEN <QUERY_BEGIN_ROWNUMBER> AND <QUERY_END_ROWNUMBER>

Step 2. Identify if the issue is Cell by Cell Calculation

You can use Performance Monitor to identify if the issue is that the calculations is being done Cell By Cell. To be successful in this task is necessary that you run your query in an isolated environment because we have a single counter for all queries and to be sure that our query is the one causing the problems you need to execute it alone, without other concurrent queries. If your query is using Cell By Cell calculation you will see something like the following image in your query.

In the image you can see that the counter being used is "MSOLAP&InstanceName:MDX \ Total Cells Calculated" and the initial value is 0 and the value after you execute the query is a little above 1 million.

This was after restarting the instance and we can see that a single execution of the query had to calculate more than 1 million rows. If this is the first time you do this, you don't know if 1 million is too much or too low. I can tell you that is very high for this specific query.

Step 3. Modify your query

Modify the query using the guidelines in the links references until you stop seeing the big increments in cell calculated for your query.

In this particular case the Script was using a couple of expressions like:

Measures.Measure1 ^ 2

We changed the formula to something like:

Measures.Measure1 * Measures.Measure1

After the formula was changed in this particular case we got the following results.

You can see that the total for the query is 156 ms now, considering that the Storage Engine must have used the same time, 132 ms, it means that now the Formula Engine is taking only 24 ms.

In the performance Monitor we can see that the total number of cell calculated was 41. The query was executed after another restart of the instance.

Final Comments

This is just a basic procedure to find out what is causing the problem on your query. Modifying the query to avoid cell by cell calculation will be easy sometimes, like in this real case, and will be hard in other scenarios.

I hope this is helpful to start troubleshooting your own cases.

Popular posts from this blog

视频教程和截图:Windows8.1 Update 1 [原文发表地址] : Video Tutorial and Screenshots: Windows 8.1 Update 1 [原文发表时间] : 4/3/2014 我有一个私人的MSDN账户,所以我第一时间下载安装了Windows8.1 Update,在未来的几周内他将会慢慢的被公诸于世。 这会是最终的版本吗?它只是一项显著的改进而已。我在用X1碳触摸屏的笔记本电脑,虽然他有一个触摸屏,但我经常用的却是鼠标和键盘。在Store应用程序(全屏)和桌面程序之间来回切换让我感到很惬意,但总是会有一点瑕疵。你正在跨越两个世界。我想要生活在统一的世界,而这个Windows的更新以统一的度量方式将他们二者合并到一起,这就意味着当我使用我的电脑的时候会非常流畅。 我刚刚公开了一个全新的5分钟长YouTube视频,它可以带你参观一下一些新功能。 https://www.youtube.com/watch?feature=player_embedded&v=BcW8wu0Qnew#t=0 在你升级完成之后,你会立刻注意到Windows Store-一个全屏的应用程序,请注意它是固定在你的桌面的任务栏上。现在你也可以把任何的应用程序固定到你的任务栏上。 甚至更好,你可以右键关闭它们,就像以前一样: 像Xbox Music这种使用媒体控件的Windows Store应用程序也能获得类似于任务栏按钮内嵌媒体控件的任务栏功能增强。在这里,当我在桌面的时候,我可以控制Windows Store里面的音乐。当你按音量键的时候,通用音乐的控件也会弹出来。 现在开始界面上会有一个电源按钮和搜索键 如果你用鼠标右键单击一个固定的磁片形图标(或按Shift+F10),你将会看到熟悉的菜单,通过菜单你可以改变大小,固定到任务栏等等。 还添加了一些不错的功能和微妙变化,这对经常出差的我来说非常棒。我现在可以管理我已知的Wi-Fi网络了,这在Win7里面是被去掉了或是隐藏了,以至于我曾经写了一个实用的 管理无线网络程序 。好了,现在它又可用了。 你可以将鼠标移至Windows Store应用程序的顶部,一个小标题栏会出现。单击标题栏的左边,然后你就可以...

ASP.NET AJAX RC 1 is here! Download now

Moving on with WebParticles 1 Deploying to the _app_bin folder This post adds to Tony Rabun's post "WebParticles: Developing and Using Web User Controls WebParts in Microsoft Office SharePoint Server 2007" . In the original post, the web part DLLs are deployed in the GAC. During the development period, this could become a bit of a pain as you will be doing numerous compile, deploy then test cycles. Putting the DLLs in the _app_bin folder of the SharePoint web application makes things a bit easier. Make sure the web part class that load the user control has the GUID attribute and the constructor sets the export mode to all. Figure 1 - The web part class 2. Add the AllowPartiallyTrustedCallers Attribute to the AssemblyInfo.cs file of the web part project and all other DLL projects it is referencing. Figure 2 - Marking the assembly with AllowPartiallyTrustedCallers attribute 3. Copy all the DLLs from the bin folder of the web part...

Announcing the AdventureWorks OData Feed sample

Update – Removing Built-in Applications from Windows 8 In October last year I published a script that is designed to remove the built-in Windows 8 applications when creating a Windows 8 image. After a reading some of the comments in that blog post I decided to create a new version of the script that is simpler to use. The new script removes the need to know the full name for the app and the different names for each architecture. I am sure you will agree that this name - Microsoft.Bing – is much easier to manage than this - Microsoft.Bing_1.2.0.137_x86__8wekyb3d8bbwe. The script below takes a simple list of Apps and then removes the provisioned package and the package that is installed for the Administrator. To adjust the script for your requirements simply update the $AppList comma separated list to include the Apps you want to remove. $AppsList = "Microsoft.Bing" , "Microsoft.BingFinance" , "Microsoft.BingMaps" , "Microsoft.Bing...

Comments

Post a Comment